How to Track AI Visibility for Small Businesses (2025)

Learn how to track brand visibility across Google AI Overviews, ChatGPT, Perplexity, and Gemini—fast, beginner workflow in under 60 minutes/week.

If customers ask AI systems for advice before they click a website, your brand needs to show up in those answers. This guide gives small businesses a repeatable, under-60-minutes-per-week workflow to measure and improve visibility across Google AI Overviews, ChatGPT, Perplexity, and Gemini—plus the exact KPIs to track and a one-slide report your stakeholders will understand.

Why this matters now: Google says AI Overviews surface when they’re “most helpful,” with prominent web links to sources—meaning trusted citations can drive discovery even without classic rankings, per the Google Blog’s May 2025 AI Overviews update and the Google Search Central page on AI features and your website (2024–2025).

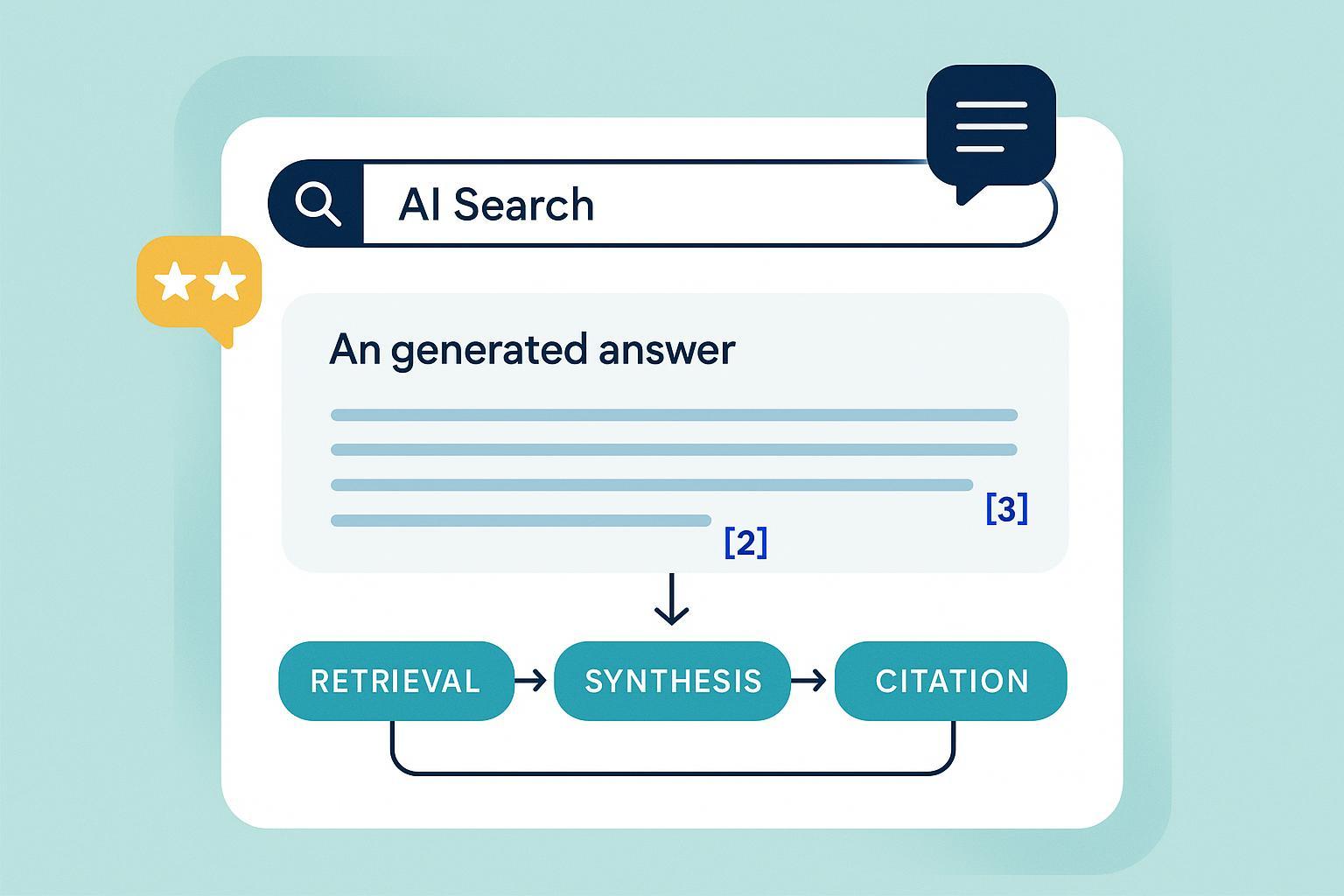

What “AI visibility” means: the five signals

Track these signals across each AI surface. Keep it simple and consistent.

Mentions: Your brand or product is named in the AI answer.

Citations: The AI links to your site as a source (a clickable URL). Perplexity shows explicit citations in each answer, as explained in the Perplexity “Getting started” post (2024–2025).

Placement: Where your brand appears in the answer UI (top card, inline paragraph, or buried below the fold). In Google AI Overviews, web links appear alongside the generated summary, per the Google AI Overviews explainer PDF (2025).

Sentiment: Positive, neutral, or negative language about your brand.

Share of Voice: Your citations versus competitors’ for the same prompt set.

Choose your priority AI surfaces (quick decision framework)

Pick 2–3 surfaces to track first, based on your business model.

Local/service-led (clinics, home services, restaurants): Prioritize Google AI Overviews for discovery and local questions; add Gemini for Google ecosystem reach. Google notes AIO integrates with Search and links out to sources, see the May 2025 announcement. For local context and ecosystem shifts, see BrightLocal’s roundup of AI and local search from I/O 2025 (BrightLocal I/O 2025 overview).

Research-heavy or niche B2B/ecommerce: Prioritize Perplexity (heavy citation behavior) and ChatGPT for research prompts; still sample Google AI Overviews. Perplexity emphasizes cited answers and premium sources in their Deep Research overview (2024–2025). OpenAI describes how ChatGPT Search rewrites queries and provides source links in its Help Center explainer (2025).

Android/YouTube-heavy audiences: Include Gemini (and AI Mode/Deep Search in the Google ecosystem). See Google’s coverage of AI Mode and Deep Search in the Gemini/AI Mode updates (2025).

Tip: Start narrow. Two engines × 10–25 prompts is enough for a stable baseline. Expand only after four weeks of consistent logging.

Build your tracking plan in under 60 minutes per week

Timebox your routine. Here’s a practical split.

10 minutes: Run your prompt set across priority surfaces

35 minutes: Log results (sheet), capture screenshots, note changes

15 minutes: Decide actions and assign owners

1) Set goals and KPIs (first week only, 10 minutes)

Define success for 30 days. Example targets: 40% visibility rate, 20% citation rate, positive sentiment ≥ 0.2, and one new high-quality citation per week.

2) Create a customer-intent prompt set (15 minutes)

Draft 10–25 prompts that mirror what real customers ask. Include:

Problem/solution: “how to fix a leaking faucet [city]”

Comparison: “best bookkeeping software for freelancers”

Local intent: “emergency dentist near me [city]”

Competitor/context: “alternatives to for small teams”

Post-purchase: “how to maintain safely” Avoid brand-only prompts at first; they inflate results without reflecting discovery.

3) Manual sampling routine (weekly, 10 minutes)

Open incognito/private mode to reduce personalization noise; keep your setup consistent week to week. For reproducibility, use the Chrome DevTools Sensors panel to set test locations for local queries, as documented in the Chrome DevTools Sensors guide (official docs).

Run each prompt on 2–3 priority surfaces. For Google, note whether an AI Overview appears and where links are displayed (see the AI Overviews explainer PDF, 2025). For ChatGPT, review the sources panel when browsing/search is active per OpenAI’s Help Center.

4) Logging template (15 minutes)

Create a simple sheet with these columns:

Date

Prompt/Query (verbatim)

Engine (Google AIO, ChatGPT, Perplexity, Gemini)

Location (city/state/country; lat/long if simulated)

Visibility (Y/N)

Brand Mention (Y/N)

Citation URL(s) to your site

Placement (Top/Inline/Below)

Sentiment (+/0/-)

Competitors cited

Screenshot link

Notes (format changes, anomalies) This schema mirrors common reporting practices and can later feed a dashboard; see reporting guidance patterns from Semrush and Conductor, such as the Semrush reporting builder overview and Conductor’s tracking frameworks intro (2024–2025).

5) Light automation options (optional, 10 minutes to set up; then passive)

Google AI Overviews: Use SerpApi’s AIO support; some queries need a follow-up request with a short-lived page_token to fetch the full Overview. See the SerpApi AIO guide (2024–2025). Respect rate limits and ToS.

Perplexity: Use the Sonar or Sonar Pro API to programmatically run queries with cited results, per the Perplexity API Terms of Service (updated May 23, 2025) and the Sonar Pro API overview.

ChatGPT/Gemini: There’s no official public “web browsing with citations” API for general web checks in ChatGPT; use RAG for your own corpus and comply with the OpenAI Usage Policies. For Gemini programmatic work, leverage Vertex AI quotas and RAG Engine as described in Vertex AI quotas and RAG Engine citations (2025).

Metrics that matter (and how to read them)

Use these lightweight formulas. Smooth week-to-week volatility with a 4-week moving average.

Visibility rate = Prompts with your brand mentioned ÷ Total prompts

Citation rate = Prompts where your site is linked ÷ Total prompts

Placement score = Average of Top=3, Inline=2, Below=1

Sentiment score = Map +1 / 0 / -1 then average

Share of Voice (SOV) = Your citations ÷ (Your citations + top competitors’ citations)

Movement vs. last period = This week’s metric − Last week’s metric

Reading tips:

Rising visibility without citations: Your brand is discussed but not credited—create citable assets (original stats, FAQs) and tighten entities/structured data.

Strong citations but low placement: Improve clarity and direct answer formatting so your brand appears higher in summaries.

Negative sentiment: Address product/service issues and publish clear, factual responses; improve reviews for local queries.

Turn insights into action (improve your presence)

Prioritize 2–3 actions per month; don’t try everything at once.

Content upgrades: Lead with concise answers to core questions; add bylines, bios, and sources to reinforce expertise. Google’s guidance emphasizes helpful, people-first content and E-E-A-T; see the Search Central helpful content guidance (ongoing).

Structured data and entity alignment: Add schema.org types (FAQPage, Article, Product, LocalBusiness, Review) and keep org/product/location entities consistent; aligns with how AI systems parse relationships—see combined advice in Search Engine Land’s citation optimization overview and the Semrush AI search optimization guide (2024–2025).

Acquire/strengthen citations: Publish original research, stats, or checklists others will cite; ensure crawl access (don’t block AI bots where appropriate, like PerplexityBot/GPTBot) per general crawling guidance in Search Central and platform docs.

Local signals: Keep your Google Business Profile complete (categories, services, photos), build consistent NAP citations, and solicit quality reviews; see AI-and-local insights from BrightLocal’s I/O 2025 coverage.

Distribution: Seed your best answers on channels AI engines scan: reputable blogs, industry directories, Q&A/community posts, and press mentions.

Reporting: a one-slide monthly summary

Build a single slide stakeholders can read in 60 seconds.

KPIs: Visibility rate, Citation rate, Placement, Sentiment, SOV, Movement (4-week MA)

Highlights: Top new citations, notable competitor moves, queries where you broke through

Actions: The 2–3 changes you shipped and what’s next

Business tie-in: Any impact on leads, calls, or assisted conversions (annotate in GA4 if AI referrals are identifiable; see guidance on segmenting LLM traffic in GA4 from Search Engine Land, 2024.)

Common pitfalls (and how to avoid them)

Tracking only rankings and ignoring AI answers: Add your weekly AI routine now; treat it as a separate channel.

Brand-heavy prompt sets: Shift to customer-intent prompts that match discovery.

Over-relying on one platform: Track at least two engines; variance is the norm.

Not logging prompt versions/locations: Always record exact phrasing and test location.

Confusing mentions with citations: Prioritize linked citations—they drive traffic and trust.

Ignoring sentiment and placement: Weight visibility by prominence and tone.

No baseline or control set: Run the same 10–25 prompts for four weeks before declaring victory.

Weekly and monthly cadence (cheat sheet)

Weekly (≤60 minutes):

Run your 10–25 prompts on 2–3 surfaces (incognito; set location if needed)

Log visibility, citations, placement, sentiment, SOV; attach screenshots

Flag top 3 action items

Monthly (≈90 minutes):

Expand prompts, compare against baseline, compute moving averages

Ship 2–3 improvements (content, schema, citations, local signals)

Present the one-slide summary and reset goals

Final note and CTA

You can run this workflow with a spreadsheet and light automation. If you want to centralize cross-engine tracking and alerts so you spend more time fixing issues than finding them, Try Geneo.