How to Restructure Content for AI Search Optimization: Step-by-Step Guide

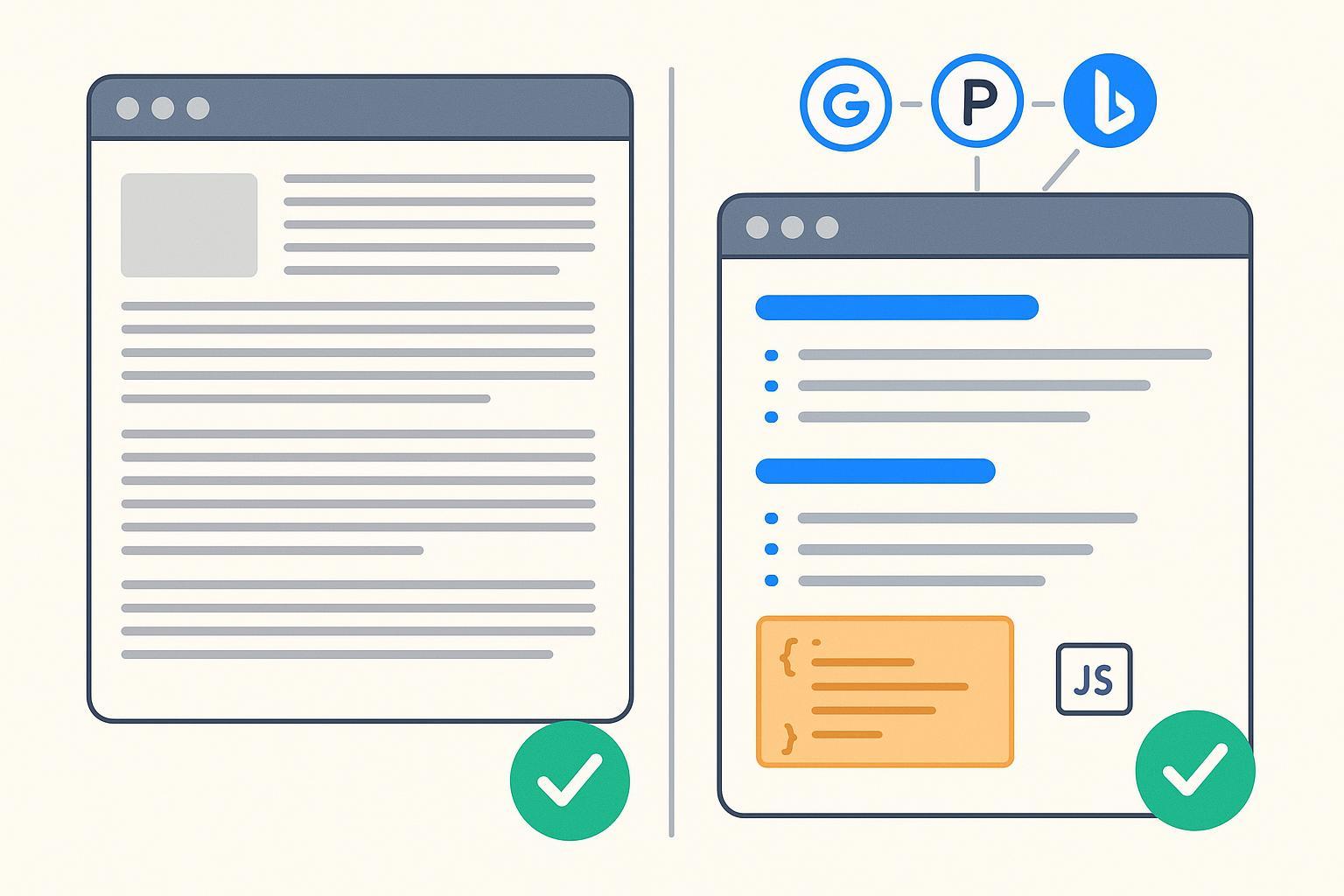

Learn how to restructure existing content for AI search optimization. Actionable steps help boost your chances of being cited in Google AI Overviews, Perplexity, and Bing Copilot.

Optimize your existing pages so they’re more likely to be cited by AI search experiences like Google’s AI Overviews, Perplexity, and Bing Copilot—without sacrificing classic SEO. You’ll leave with a repeatable workflow, validation steps, and a lightweight monitoring plan.

- Difficulty: Intermediate

- Time: 2–6 hours per page (complexity varies)

- Prerequisites: Access to your CMS, ability to edit HTML/JSON-LD, Search Console, and basic SEO tooling

Why this works: These AI systems summarize from the open web and attach references when grounded. Clear, answer-first structure, correct schema, and clean technical foundations increase your likelihood of being retrieved and cited, per official guidance from Google, Perplexity, and Microsoft Bing.

- Google describes AI Overviews as AI-powered results that synthesize information from multiple sources and include links for deeper exploration, emphasizing quality and helpfulness rather than special tricks, as noted in the Google AI Overviews update (May 2024) and in the Google Developers guidance on AI features. In May 2025 they reiterated that “helpful, satisfying content” is the path to success in AI search, per Google Developers: Succeeding in AI Search (2025).

- Perplexity states it includes citations in each answer and grounds responses in trusted sources, per the Perplexity Publishers Program (2024) and Getting started with Perplexity.

- Microsoft explains Copilot responses are grounded in high‑ranking web results with references and a Learn more section, per Microsoft Support: Copilot in Bing – responsible AI approach and Microsoft Learn: Generative AI – public websites grounding.

Before you start: a quick transformation checklist

You’ll take a page from “topic essay” to “retrieval-friendly, answer-first” without turning it into thin FAQ spam.

- Map queries to intents (questions, tasks, definitions)

- Cover key entities and attributes succinctly

- Restructure headings into question-forward H2/H3s with concise answers up top

- Add copyable steps, checklists, and decision helpers

- Implement matching schema (HowTo, FAQPage, Article) that mirrors visible content

- Bind key claims to authoritative sources with descriptive anchor links

- Clean up technical foundations (indexability, performance, canonical)

- Validate across AI surfaces and set up monitoring

Verification at a glance:

- Structured data validates in Rich Results Test

- Manual checks show your URL as a citation in AI Overviews (when present), Perplexity, or Bing Copilot for target queries

- Headings, anchors, and Q/A blocks render correctly

Step 1: Build your query–intent map

Goal: Capture the real questions and tasks users ask so your page can answer them directly.

Do this:

- List the core topic and audience tasks. Expand into question-style variants (how, what, why, best way, steps, vs, checklist).

- Mine questions from SERP features and communities. Tools like People Also Ask, forum threads, and question datasets help.

- Cluster queries by intent:

- Informational (how/what/why)

- Task workflows (step-by-step)

- Reference/definitions

- Prioritize queries where AI answers frequently include citations and where you can add unique value.

Why it matters: Studies show strong overlap between AO citations and top organic results, and AO primarily triggers on informational queries. For scale and overlap context, see the 2025 Semrush AI Overviews prevalence/overlap study and comparative Semrush AI Mode analysis; both indicate high overlap with top 10 results and predominance of informational intent.

Verify:

- For your top 3 intents, run the queries and record whether AO appears and how often Perplexity and Copilot show references.

Troubleshoot:

- If AO rarely appears, focus on Perplexity and Copilot queries or pick adjacent intents with steadier AO presence. External analyses also note volatility; see Search Engine Land’s 2025 brand/citation patterns.

Step 2: Map entities and coverage gaps

Goal: Ensure the page cleanly explains the core entities and attributes AI systems expect around the topic.

Do this:

- Extract entities: primary concept, subtopics, synonyms, acronyms, related tools, metrics, and constraints.

- For each entity, add a brief definition or description on-page.

- Add a concise FAQ that mirrors real questions (2–6 items). Avoid padding.

Why it matters: Clear entity definitions and relationships improve disambiguation, making your content easier to ground and cite.

Verify:

- Each key entity has a short explanatory block or definition list.

- FAQ questions exist on the page and are user‑derived (no invented Qs).

Troubleshoot:

- If FAQs feel forced, remove them; Google limits FAQ rich results mostly to authoritative government and health sites since Aug 2023, per Google’s announcement on HowTo/FAQ changes (2023). Keep FAQs for user value, not for SERP decorations.

Step 3: Restructure headings and answer blocks

Goal: Convert narrative paragraphs into liftable Q/A sections that AI systems can quote and link.

Do this:

- Keep your H1 as the task/outcome.

- Add an H2 “Quick answer” for each major question: a 1–2 sentence direct answer, then detail.

- Use H2/H3 question-style headings that are self-contained (“How do you…?”, “What is…?”). Avoid vague headings.

- Provide copyable structures: checklists, numbered steps, decision trees.

Example pattern:

- H2: How do you migrate XYZ without downtime?

- Direct answer (2 sentences)

- Steps (1–7)

- Common pitfalls

- Evidence links

Verify:

- Skim just the headings and first sentences: can a reader extract answers without reading everything? If yes, AI systems can, too.

Troubleshoot:

- If sections are too long, split into smaller H3s and add a 1–2 sentence summary up top.

Step 4: Implement structured data and semantic HTML

Goal: Align visible content with schema so machines can parse your steps and Q/A reliably.

Do this:

- Use JSON‑LD.

- Choose one or more schema types that match what’s on the page:

- Article/BlogPosting: always for editorial content.

- HowTo: when you include a step-by-step procedure.

- FAQPage: when you have genuine Q/A visible on the page.

- Keep markup consistent with visible content; don’t mark up phantom FAQs.

- Use semantic HTML (main, article, section, hgroup, ol/ul) and clean ordered lists for steps.

Validate:

- Run the Google Rich Results Test and fix errors.

- Confirm properties per docs: HowTo schema, FAQPage, and Article. Read the intro to structured data.

Sample HowTo JSON‑LD:

{

"@context": "https://schema.org",

"@type": "HowTo",

"name": "Restructure a page for AI search optimization",

"totalTime": "PT3H",

"step": [

{

"@type": "HowToStep",

"name": "Build a query–intent map",

"text": "Cluster user questions into informational, task, and definition intents."

},

{

"@type": "HowToStep",

"name": "Map entities and coverage gaps",

"text": "Define key entities, attributes, and provide concise FAQs."

},

{

"@type": "HowToStep",

"name": "Restructure headings and answers",

"text": "Use question-forward H2/H3s with a 1–2 sentence direct answer followed by detail."

},

{

"@type": "HowToStep",

"name": "Implement schema and semantic HTML",

"text": "Add JSON-LD for Article/HowTo/FAQPage that mirrors on-page content; validate in Rich Results Test."

}

]

}

Troubleshoot:

- If Rich Results Test passes but Search Console flags inconsistencies, ensure the visible Q/A or steps exactly match your JSON-LD and that markup loads server-side or early in the DOM.

Step 5: Bind claims with evidence and sources

Goal: Earn trust signals and give AI systems clean citations to attach.

Do this:

- For any stat, definition, or claim, link to a canonical source with descriptive anchor text.

- Prefer original docs or studies; include year and publisher in nearby text.

- Keep link density reasonable (about one external link per 120–150 words).

Examples used in this guide:

- Google’s stance on AO and eligibility: Google Developers – AI features and the May 2024 AI Overviews update.

- AO prevalence and overlap: 2025 Semrush study and 2025 Search Engine Land analysis.

- Perplexity’s citations-by-default: Publishers Program.

- Copilot grounding and references: Microsoft Support article.

Verify:

- Every key claim on your page has a relevant source with a descriptive anchor. No “click here” links.

Troubleshoot:

- If you can’t find a primary source, remove the claim or rephrase to be opinion, clearly marked as such.

Step 6: Technical cleanup and performance

Goal: Remove technical blockers that hinder retrieval and grounding.

Do this:

- Ensure indexability (200 status, noindex rules, robots.txt allow-list, canonical correctness).

- Improve performance (fast LCP/CLS), reduce script noise, and keep main content early in the DOM.

- Use clean, descriptive anchor links and avoid heavy interstitials.

Why it matters: All three systems pull from the open web and prefer accessible, fast, unambiguous pages. Google reiterates that there are no special AO technical tricks—classic SEO fundamentals still apply, per Google Developers: Succeeding in AI Search (2025).

Verify:

- Crawl the page and confirm indexable, canonical URLs; measure LCP/CLS; ensure structured data loads without JS errors.

Troubleshoot:

- If performance is poor, defer non-critical scripts and compress images; move JSON-LD server-side.

Step 7: Validate across AI surfaces

Goal: Confirm your restructuring shows up as citations and references where applicable.

Do this:

- Google: Run target queries periodically. Note whether an AI Overview appears and if your URL is cited among the links. Document date, location, and query phrasing to account for variability described in the AI features doc and acknowledged in the May 2024 AO update.

- Perplexity: Test queries and check inline citations. Try Focus options (All, Academic, Reddit) and observe whether your page is cited; the platform highlights citations by design, per Perplexity’s guides.

- Bing Copilot: Enter your queries and look for references in the Learn more section; Microsoft documents that web-grounded answers include references, per Microsoft Support.

Verify:

- Capture screenshots and URLs in a log with timestamps for before/after comparison.

Troubleshoot:

- If no citations appear after several weeks, revisit Step 3 (shorten answer blocks), strengthen evidence links, and verify indexability. External context shows variability and alignment with top organic results; ensure your page ranks competitively for the target intents, supported by the 2025 Semrush overlap study.

Step 8: Monitor and maintain

Goal: Keep your content fresh and track changes in AI-surface behavior.

Do this:

- Refresh recency-sensitive sections quarterly or when facts change.

- Re-run validation checks after major edits.

- Maintain a simple dashboard/log for target queries and whether your page appears as a citation.

Why it matters: AO, Perplexity, and Copilot behavior evolves. Ongoing monitoring helps you respond to volatility noted by third‑party analyses like the 2025 Search Engine Land citation patterns.

Toolbox: Research and monitoring (neutral parity)

Disclosure: Geneo is our product.

- Geneo: Multi‑platform AI search visibility monitoring across ChatGPT, Perplexity, and Google AI Overviews; tracks brand mentions/citations, sentiment, and historical queries; can suggest content strategy improvements for teams.

- BrightEdge: Enterprise SEO platform with AI search/Answer Engine tracking; keyword/topic research and reporting.

- seoClarity: Enterprise SERP intelligence with answer box and AI feature monitoring; People Also Ask insights.

- Authoritas: SERP and entity insights with question mining; AI feature tracking in studies.

- AlsoAsked or AnswerThePublic: Adjacent utilities for question mining and FAQ discovery.

How to choose:

- Coverage: Which AI surfaces does it monitor (AO, Perplexity, Copilot, others)?

- Focus: Research vs monitoring vs reporting depth.

- Practicality: Learning curve, data exports/APIs, collaboration, and cost.

Troubleshooting: If X, try Y

- Schema validates but no impact on AI citations

- Tighten your question headings and put succinct answers first; ensure evidence links are present.

- AO appears but you’re never cited

- Strengthen organic rankings for the intent, deepen entity coverage, and reduce ambiguity. Overlap with top organic results is high per the 2025 Semrush study.

- Perplexity ignores your page

- Ensure the content is accessible (no paywall), clearly answers the question, and provides unique insights. Re-check after adding concise summaries; Perplexity emphasizes citations in every answer, per its Publishers Program.

- FAQ rich results missing

- Expected for most sites since Aug 2023; keep FAQs if useful to readers, per Google’s FAQ changes announcement.

Verification worksheet (copy/paste)

- Page URL:

- Target query intents (3–5):

- Entities covered (list):

- H2/H3 question headings present? Y/N

- Each major question has a 1–2 sentence direct answer? Y/N

- Schema types used (Article/HowTo/FAQPage):

- Rich Results Test passed? Y/N Date:

- Evidence links added for key claims? Y/N

- Performance: LCP/CLS:

- Google AO shows for target queries? Y/N; Your URL cited? Y/N

- Perplexity cites your URL? Y/N

- Bing Copilot references your URL? Y/N

- Screenshots captured in log? Y/N

- Next review date:

Key sources and further reading

- Google’s positioning and eligibility for AI features: Google Developers – AI features in Search and the Google AI Overviews update (May 2024). See also Succeeding in AI Search (2025).

- AO prevalence and overlap with organic: 2025 Semrush AI Overviews study and 2025 Search Engine Land analysis.

- Perplexity citations: Perplexity’s Publishers Program and Getting started.

- Microsoft Copilot grounding and references: Microsoft Support explainer and Microsoft Learn guidance.

- Structured data specs and validation: Intro to structured data, HowTo, FAQPage, and Article. FAQ eligibility changes: Aug 2023 announcement.

You now have a practical, evidence-led workflow to refactor any page for AI search optimization while upholding content quality and classic SEO fundamentals. Apply it to a single high-impact page first, verify citations, then scale across your library.