How to Interview a GEO Strategist: Step-by-Step Guide for AI Search

Learn how to interview a GEO Strategist with a practical, structured process—scorecards, real exercises, red flags, and evidence-based evaluation steps.

A quick note on the role: here, GEO means Generative Engine Optimization—earning accurate inclusion, citations, and positive representation for your brand in AI-generated answers. Think ChatGPT Search, Perplexity, and Google AI Overviews. It is not a geospatial or real estate “geo” role. If you want a deeper contrast with classic SEO, see this comparison of Traditional SEO vs GEO.

What you’re actually hiring for

You’re hiring someone who can systematically increase your brand’s presence and accuracy in AI answers. Outcomes include: higher inclusion and citation share across engines, fewer hallucinations about your brand, and clear playbooks spanning content, structured data, and third‑party authority. Platform behaviors differ—Google notes there’s no special markup for AI features and standard quality + structured data applies; see Google’s “AI features and your website” guidance. OpenAI confirms that ChatGPT Search presents answers with a Sources button linking to references; see OpenAI’s ChatGPT Search announcement. Perplexity documents the PerplexityBot and appearance guidelines; see Perplexity’s bot documentation. For a practical definition of GEO itself, Search Engine Land’s overview of GEO is a solid primer.

The GEO competency model and scorecard

Use a structured scorecard. Calibrate in advance, score independently, and discuss later. Suggested weights below—adjust to your needs.

| Competency | Weight | What “meets” looks like (behavioral signals) |

|---|---|---|

| Strategy | 20% | Explains how LLMs retrieve/synthesize; sets entity‑first strategy; defines inclusion/citation goals by query cohorts; prioritizes based on impact and feasibility. |

| Semantic/Entity Optimization | 15% | Maps entities, aligns schema, drafts disambiguation; ensures author/org markup; proposes corroborating third‑party sources. |

| Technical GEO | 15% | Audits crawl/indexability; uses structured data; understands robots.txt and AI agents (GPTBot, Google‑Extended, PerplexityBot); flags nonstandard controls like “llms.txt” as unofficial. |

| Content for AI Extraction | 15% | Produces answer‑extractable structures (clear definitions, FAQs, tables, citations); writes evidence blocks with primary sources. |

| Measurement/Analytics | 15% | Defines inclusion rate, citation share, sentiment, platform mix; suggests reproducible prompt batteries and logging; proposes review cadence. |

| Communication & XFN Leadership | 10% | Translates GEO to execs and content teams; partners with PR/Comms, Legal, Product, Data; documents playbooks. |

| Adaptability/Experimentation | 10% | Designs small controlled tests; monitors model/policy changes; updates hypotheses responsibly. |

A structured, fair interview plan

Consistency protects both quality and fairness. Align with your HR and legal teams and apply the same process to all candidates for the same level. The EEOC’s Uniform Guidelines encourage job‑related, consistent selection procedures; treat them as north stars for process design and documentation. See the EEOC’s Uniform Guidelines on Employee Selection Procedures.

- Role calibration and scorecard finalization. Confirm business objectives, core query cohorts, priority engines, and the weighted scorecard. Define must‑haves vs nice‑to‑haves.

- Screen for fundamentals. Short call: GEO vs SEO differentiation, platform literacy (ChatGPT Search vs classic ChatGPT, Perplexity’s citation patterns, Google AI Overviews), and evidence practices.

- Work samples and exercises. Use standardized tasks (below). Provide accessible formats and equal time limits. Pay for substantial take‑homes.

- Panel interviews. Assign focus areas: strategy, semantic/technical, content ops, measurement/analytics, and stakeholder communication. Score independently using the same rubrics.

- Debrief with documentation. Compare notes against the scorecard, not persona fit. Summarize rationale and retain records per policy.

Fairness and privacy reminders: keep tasks job‑related and proportional; offer reasonable accommodations; avoid sensitive data in exercises (use synthetic or public sources). If you evaluate bot controls, remember that some crawlers have been observed bypassing robots rules—Cloudflare reported undeclared crawlers allegedly used by Perplexity; see Cloudflare’s analysis of stealth crawling. Evaluate candidate awareness without encouraging any policy‑violating tactics.

The exercise library (with rubrics)

Keep all instructions identical for each candidate at the same level. Require timestamped screenshots, prompts, and links. Define pass thresholds in advance (e.g., 3.0/5.0 on each competency within the task).

1) Live prompt‑debugging (Perplexity)

Scenario: Your brand is missing from a Perplexity answer for “best X platforms for Y.” The candidate has 20 minutes to improve inclusion or clarify citation.

What good looks like: Hypotheses are explicit; the candidate inspects entity ambiguity, adds or references corroborating sources, and suggests content/snippet adjustments. They document steps and capture before/after states. Grade on hypothesis clarity, entity handling, evidence quality, and transparency.

2) Mini AI‑visibility audit

Scenario: Provide a sample domain and existing structured data. Ask the candidate to identify missing entities, propose schema updates, and draft a short, answer‑extractable section (concise definition, 3–5 bullet summary, a small specs table, and 2–3 primary citations).

What good looks like: Prioritized, feasible fixes aligned with Google’s stated guidance that no special AIO markup exists; emphasis on quality content and appropriate structured data per Google’s AI features documentation. Grade on prioritization, feasibility, and alignment.

3) Metrics design task

Scenario: Define KPIs and instrumentation for AI visibility, including inclusion rate, citation share, sentiment, and platform mix. Outline a reproducible testing cadence (prompt batteries, locations, time windows).

What good looks like: Clear metric definitions, collection methods, and review cadence. Ethical guardrails (no policy tampering). For deeper literacy on measurement concepts, you can brief interviewers with LLMO metrics for accuracy, relevance, and personalization.

4) Policy‑aware disambiguation task

Scenario: Your brand name collides with an unrelated entity. Ask the candidate to propose a disambiguation block and citations policy that reduce hallucinations.

What good looks like: Precise language, explicit entity cues (schema, on‑page, and corroborating sources), and editorial fit. Grade on accuracy standards and clarity.

Portfolio and evidence verification

Request a small, well‑documented portfolio rather than big claims. Ask for: reproducible prompts, platform + date/time + location settings, screenshots with visible sources, and links to cited pages. Encourage archiving of key sources (e.g., via the Wayback Machine) to preserve the state of evidence. Favor examples where hypotheses connect to plausible mechanisms—entity fixes, schema updates, authoritative coverage—over “this tool did it” narratives.

When technical controls come up, probe for specific, correct knowledge. Candidates should understand robots.txt directives for GPTBot, Google‑Extended, and PerplexityBot. They should also note that proposed controls like “llms.txt/ai.txt” are unofficial as of today. Official bots and controls are documented by platforms and public guidance.

Red flags you can spot early

- Tool‑only thinking without an entity/semantic model of how inclusion happens.

- Guaranteed placements or promises of exact AIO slotting.

- No reproducible evidence (no prompts, no timestamps, no links/archives).

- Dismisses structured data, authorship, or corroboration from third‑party authorities.

- No plan to monitor inclusion, citations, and sentiment over time.

Practical example: reviewing a candidate’s KPI proposal with Geneo

Disclosure: Geneo is our product.

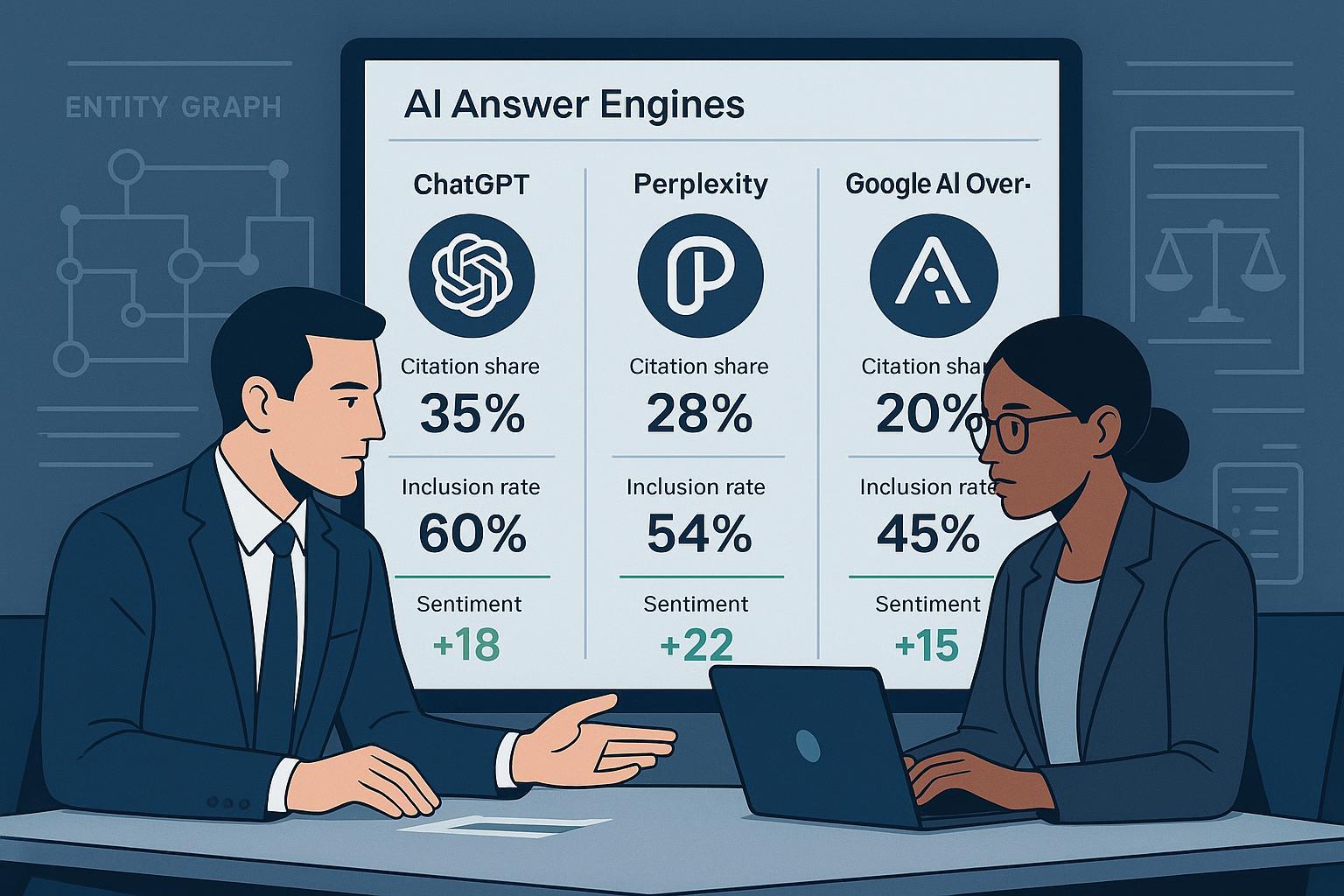

Here’s a simple, neutral workflow you can run during the debrief. Ask the finalist to walk through their KPI proposal—how they’d track inclusion rate and citation share across ChatGPT Search, Perplexity, and Google AI Overviews, and how they’d monitor sentiment over time. Geneo can be used to compare cohorts of tracked queries, see which engines cite your brand, and observe sentiment trends so you can validate whether the candidate’s measurement plan is practical. Keep the conversation focused on definitions, cadence, and stakeholder reporting. For a market view of tools that monitor AI Overviews, see this landscape of Google AI Overview tracking tools.

Post‑interview validation and documentation

Before making an offer, run two quick checks. First, reference calls focused on process and reproducibility (“How did they validate that inclusion gains stuck across time and locations?”). Second, a short, paid trial or a bounded pilot sprint using synthetic or public data. Document your final decision against the scorecard, not gut feel.

FAQs you can use during the interview

- How would you explain the difference between GEO and SEO to our CEO in 90 seconds?

- When an AI answer omits our brand, what are your top three hypotheses and how would you test them?

- Show me how you would structure a page so an answer engine can extract a clean, attributed snippet.

- What is your KPI set for AI visibility, and how often would you review it with stakeholders?

- How do you handle crawler controls for GPTBot, Google‑Extended, and PerplexityBot—and what do you do when crawlers don’t follow the rules?

Closing notes for interviewers

- Stay evidence‑first and process‑true. Score against behaviors you can observe, not charisma.

- Keep a living rubric. As models and policies shift, update what “good” looks like and communicate those changes to your panel.

- Avoid over‑engineering. Two to three well‑designed exercises plus a calibrated panel is usually enough.