How to Increase Brand Mentions in ChatGPT: A Practical Guide

Step-by-step AEO/GEO tactics to increase brand mentions in ChatGPT—entity, schema, content engineering, and measurement tips.

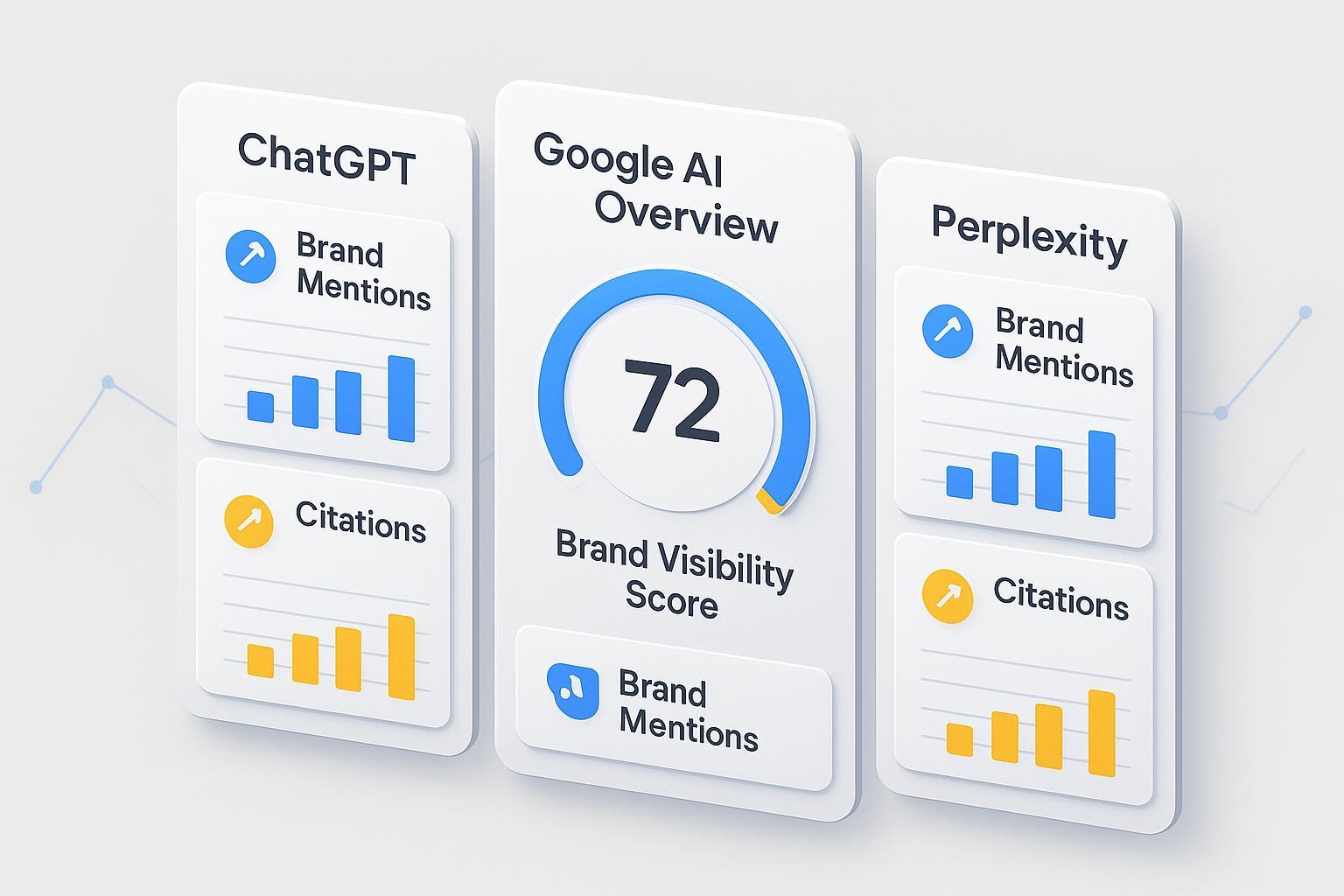

If you ask ChatGPT for recommendations, there’s a good chance you’ll get generalized guidance instead of named brands. For marketers, that omission hurts: fewer citations, weaker referrals, and lost mindshare. This how‑to guide explains why under‑mention happens and lays out a practical plan to increase brand mentions in ChatGPT and other answer engines—anchored by a Brand Visibility Score so you can measure real progress.

Why low mentions happen and why a score matters

Under‑mention isn’t random. It’s driven by training‑data patterns, conservative safety policies, mode‑dependent citation behavior, and inconsistent entity signals. ChatGPT typically shows citations when its browsing or Deep Research tools are active; in pure model mode, sources often aren’t displayed. OpenAI’s own pages on ChatGPT capabilities and Deep Research describe when and how sources appear, but not the internal ranking logic.

On Google’s side, AI Overviews synthesize across standard ranking systems and Gemini grounding, then present selected citations. Google reminds publishers that structured data aids interpretability but does not guarantee inclusion, per AI features and your website. Perplexity, by contrast, retrieves in real time and consistently shows numbered sources, emphasizing recency as described in Perplexity Deep Research.

Because you can’t directly control these engines, you need a north star metric. A Brand Visibility Score (for example, a 0–100 measure that rolls up brand mentions, link visibility, reference counts, and competitive positioning across engines) gives your team a single number to target while you execute improvements and track momentum. For background on KPI design and Share‑of‑Answer (SoA), see this AI Search KPI framework.

Quick diagnosis: find why you’re being replaced by generic advice

Use this short checklist to pinpoint the primary failure mode:

Your brand entity is unclear or inconsistent (different names, missing schema, no Wikidata/Wikipedia).

Your content is hard to quote (dense blocks, no answer‑first sections, few concise statements).

Structured data is sparse or invalid (no Organization/FAQPage/HowTo; broken JSON‑LD; missing sameAs/@id).

Freshness signals are weak (rare updates, undated stats, slow performance undermining crawl/extraction).

Off‑site corroboration is thin (few third‑party mentions or reviews; claims aren’t echoed elsewhere).

How to increase brand mentions in ChatGPT: the practitioner playbook

1. Clarify your entity and knowledge‑graph footprint

Define a canonical Organization entity with JSON‑LD on your homepage. Use a stable @id (for example, your root URL with a fragment), authoritative sameAs links (LinkedIn, X/Twitter, Crunchbase, Wikidata/Wikipedia), and a clean description. Schema.org’s Organization and sameAs are the core references. Create and maintain a Wikidata item, add reliable statements and sitelinks, and link it in sameAs. If Wikipedia notability is borderline, build independent coverage first; Wikipedia’s Notability guideline explains the threshold.

Expected effort: Moderate; 1–2 weeks for Wikidata, longer for Wikipedia depending on coverage.

2. Engineer answer‑first content that’s easy to cite

Refactor key pages so engines can lift clean passages. Use short paragraphs, explicit question‑answer blocks, numbered steps, and tables where appropriate. Add quote‑worthy lines (one‑sentence “TL;DRs”) that summarize a claim with a source. Place executive summaries at the top of long pages, then a deeper dive below. Keep HTML simple; avoid burying essential copy in complex JS.

Expected effort: Moderate; 1–3 weeks across priority pages.

3. Add structured data that matches visible copy

Implement FAQPage and HowTo where they reflect real on‑page content. Validate with Google’s Rich Results Test and a schema validator; ensure on‑page parity to avoid suppression. Google reiterates that markup helps understanding but isn’t a guarantee of display, as summarized in AI features and your website. Use Organization/Brand on the homepage and Product/Review on product pages, linking entities via @id and brand.

Expected effort: Moderate; 4–8 developer hours per template.

4. Strengthen technical inclusion signals

Maintain Core Web Vitals at healthy thresholds—LCP ≤ 2.5s, INP ≤ 200ms, CLS < 0.1—per Google’s updates where INP replaces FID in Search guidance (Search documentation updates). Keep robots.txt clear for AI crawlers (Google‑Extended, GPTBot, ClaudeBot). Consider an optional llms.txt to curate helpful resources; remember it’s advisory and doesn’t override robots.txt, as explained in Semrush’s llms.txt overview.

Expected effort: Moderate; ongoing.

5. Build off‑site corroboration and consistent anchors

Seed neutral, third‑party coverage that backs up your claims: industry directories, analyst notes, community platforms, and reputable blogs. Aim for consistent anchors (name, description, category) so engines can triangulate your entity. Consolidate key claims on canonical URLs and ensure external references point there.

Expected effort: Moderate; 4–12 weeks.

6. Add visible recency and update signals

Publish versioned updates with visible “last updated” notes, dated statistics, and changelogs on product pages and documentation. For long researches, include a brief “What changed” section at the top. Engines favor content that demonstrates maintenance and clarity.

Expected effort: Easy; ongoing.

7. Measure with prompt panels and a Brand Visibility Score

Define AI KPIs: Share‑of‑Answer (SoA), citation rate, citation quality, freshness lag, and sentiment markers. Run a standard set of queries across ChatGPT (Search or Deep Research modes), Google AI Overviews, and Perplexity; capture screenshots, sources, and wording. Track changes monthly and quarterly.

Disclosure: Geneo is our product. It can be used to unify this measurement across engines, offering a Brand Visibility Score and dashboards for SoA, brand mentions, link visibility, and reference counts. For KPI context, see Brand Visibility Score for agencies and the AI Search KPI framework. You can also assemble a manual prompt panel with spreadsheets and screenshots if you prefer.

Expected effort: Moderate; initial setup 1–2 weeks, then monthly iterations.

8. Iterate quarterly with a focused improvement loop

Every quarter, review SoA and citation quality. Identify top gaps: pages that are never cited, entities that aren’t recognized, or engines where you lag. Ship targeted fixes—new FAQ blocks, clearer schema, better off‑site corroboration—and re‑run the prompt panel. Treat improvements like releases with owners and dates.

Expected effort: Moderate; recurring.

Engine playbooks: adapt your tactics by surface

ChatGPT: mention mechanics and prompts

Mentions rise when your content is cleanly quotable and when browsing/Deep Research is used. If your topic is evaluative (e.g., best tools), aim for comparison pages with neutral criteria, clear pros/cons, and links to third parties.

Capture outputs with sources when possible. OpenAI’s docs explain how Deep Research presents citations; avoid asking for citations in pure model mode since accuracy can drift. A 2025 review of LLM citation accuracy in biomedical contexts highlights fabrication risks without grounded sources, summarized by the PMC review.

Google AI Overviews: structured signals and authority

Ensure strong traditional SEO: topical authority, high‑quality references, and clean structured data. Google states AI features leverage standard ranking systems and quality; inclusion is volatile.

Expect concentration effects on big brands; compete with depth, originality, and maintenance. Keep CWVs tight and schema validated.

Perplexity: recency and clarity

Perplexity consistently shows sources. Publish clear, up‑to‑date summaries and research pages that map claims to references. Keep titles literal and avoid ambiguous branding.

Use canonical URLs and stable anchors; Perplexity’s layout favors concise claims with straightforward citations.

Technical annex: schema and inclusion cues

Organization JSON‑LD pattern: stable

@id,url,name,description,logoas ImageObject,sameAs(LinkedIn, X/Twitter, Wikidata, Wikipedia, Crunchbase),contactPoint, and location details when relevant. Validate using Google’s Rich Results Test and a schema validator.Brand vs Product linking: if your brand differs from the company name, add a Brand node and link products via

brandwith the Brand’s@id.Robots vs llms.txt: robots.txt is authoritative for crawler permissions; llms.txt is advisory guidance to curate resources for LLMs.

Core Web Vitals targets: LCP ≤ 2.5s, INP ≤ 200ms, CLS < 0.1.

Troubleshooting: quick fixes for common failure modes

Wikipedia rejected: strengthen independent coverage with reliable sources and revisit later; maintain Wikidata in the meantime.

Misattribution to a competitor: consolidate claims on canonical pages, align schema, and ensure off‑site references use your exact name.

No citations despite strong content: check robots.txt, sitemaps, CWVs; simplify HTML; add clearer Q&A blocks and summaries.

Schema warnings: fix required properties and ensure on‑page parity; invalid or mismatched markup is often suppressed.

What success looks like and how to keep momentum

When you put these steps together, you’ll see cleaner mentions, more consistent citations, and fewer generic answers that skip your brand. The Brand Visibility Score becomes your progress beacon, rolling up presence and quality across engines. Keep the improvement loop tight: re‑measure monthly, review quarterly, and double down on pages and queries where you’re gaining traction.

If you want a unified way to run prompt panels and track a Brand Visibility Score across ChatGPT, Google AI Overviews, and Perplexity, Geneo supports multi‑platform monitoring, competitive benchmarking, and white‑label reporting for agencies. Or build your own spreadsheet workflow—the key is to measure, iterate, and keep publishing answer‑first content that engines can confidently cite.