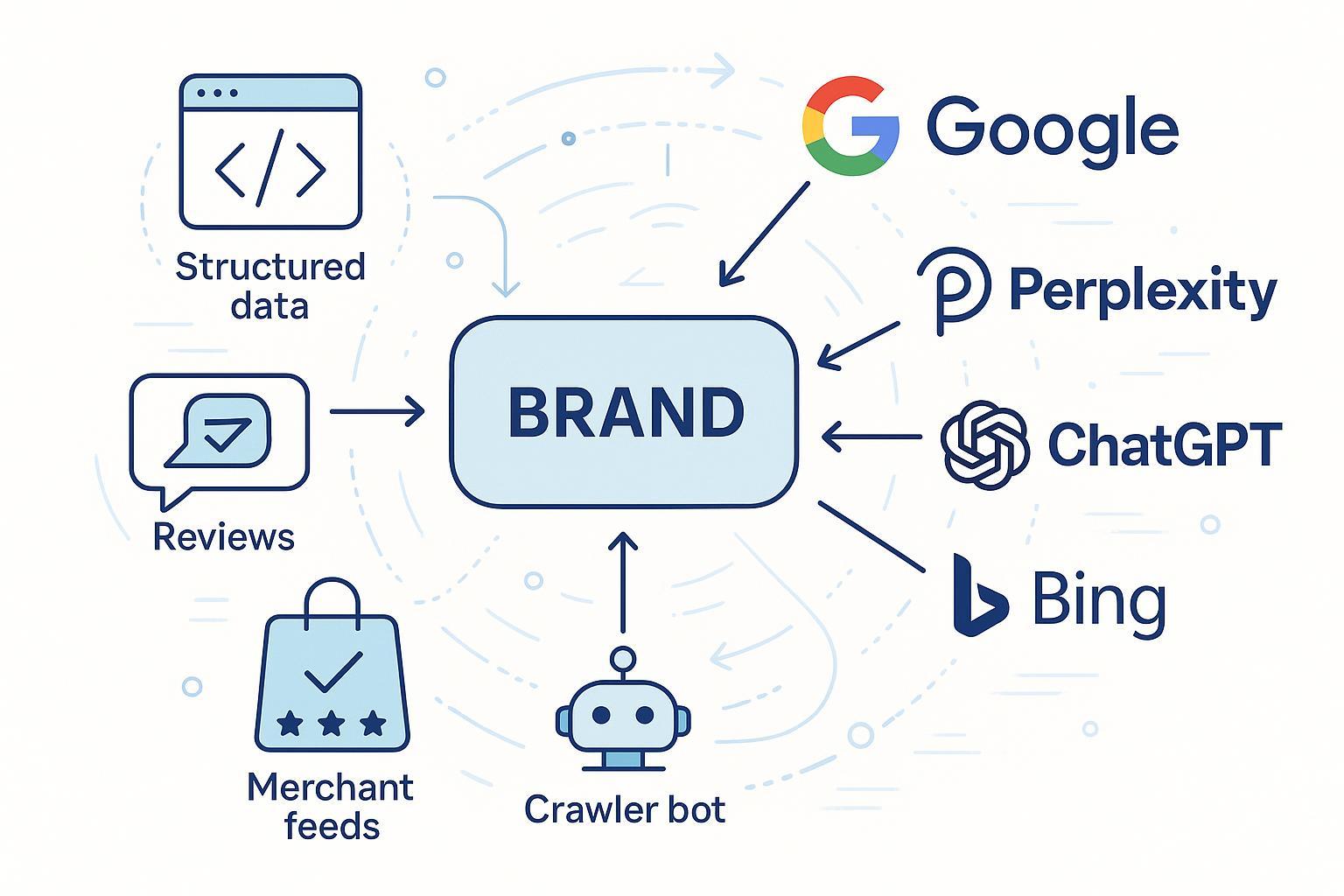

How to Get Your Brand Into Generative AI Product Recommendations

Step-by-step guide to earn brand inclusion in Google AI Overviews, Perplexity, ChatGPT, and Bing Copilot. Covers platform workflows, verification, and troubleshooting.

Updated for 2025. This practical playbook shows you how to earn inclusion and citations in AI-driven recommendations on Google AI Overviews, Perplexity, ChatGPT Search/Browsing, and Bing Copilot. Expect intermediate difficulty and a multi‑quarter effort spanning technical hygiene, structured data, feeds, authoritative content, and reviews. We’ll give you step-by-step actions, verification methods, KPIs, and a troubleshooting matrix.

Why this works: AI answer engines tend to cite crawlable, high‑quality, clearly‑structured information from trusted publishers and merchant data feeds. Google’s guidance for AI features emphasizes helpfulness, structured data alignment, and crawlability (Google Search Central: AI features in Search, 2024–2025). For review and product content, align with Google’s helpful content principles and reviews guidance to maximize eligibility and trust signals (Google: Creating helpful, people‑first content).

Before you start: prerequisites checklist

- Technical health

- Your key pages are indexable (no accidental noindex/nosnippet) and fast

- Robots.txt allows Googlebot; decide policy for GPTBot and PerplexityBot (see Step 1)

- XML sitemaps are current; canonical tags are consistent

- Data fidelity

- Product, Review, and Organization schema present on relevant pages and reflect on‑page content

- For variants, implement ProductGroup to consolidate signals (Google Product variants update, 2024)

- Merchant/retail readiness (if ecommerce)

- Google Merchant Center account verified; feeds valid and up‑to‑date (GMC get started)

- If applicable, Microsoft Merchant Center is set up and healthy (Microsoft Product Ads guide)

- Content & authority

- Publish hands‑on reviews, comparisons, specs, and FAQs with clear authorship and sources

- Maintain legitimate reviews on trusted platforms; avoid incentivized or fake reviews

Time expectations: Initial setup 2–6 weeks; inclusion can take additional weeks as systems recrawl and refresh.

Tools and stack (neutral options)

Use a combination of official validation utilities and third‑party visibility monitors:

-

Monitoring AI visibility (neutral list)

- Geneo — monitor brand visibility and citations across AI answer engines. Disclosure: Geneo is our product.

- seoClarity: AI Overviews tracking — detect AI Overviews triggers and cited domains at scale.

- SISTRIX: AI Overviews resource — monitor AI Overviews appearances and visibility trends.

- STAT Search Analytics — commonly used for SERP feature tracking; verify AI features tracking directly with the vendor.

-

Official validation utilities

- Rich Results Test — validate Product, Review, Organization schema

- Google Search Console URL Inspection — indexation and structured data surfacing

- Google Merchant Center Diagnostics — feed issues and disapprovals

- Microsoft Merchant Center & Shopping setup — catalog ingestion and troubleshooting

Step 1) Make your site and data crawlable

Actions (15–60 minutes):

- Ensure robots.txt allows essential bots on public content that you want cited:

- Always allow Googlebot on product, review, and documentation pages

- Decide whether to allow GPTBot (OpenAI) and PerplexityBot on those same public pages

- Keep XML sitemaps updated; submit in Search Console/Bing Webmaster Tools

- Fix canonical inconsistencies and remove accidental noindex/nosnippet directives

Example robots.txt rules:

User-agent: Googlebot

Allow: /

User-agent: GPTBot

Allow: /

Disallow: /private/

User-agent: PerplexityBot

Allow: /

Disallow: /private/

Verify

- Check server logs for “Googlebot,” “GPTBot,” and “PerplexityBot” crawling target URLs

- Use Search Console URL Inspection to confirm indexability and last crawl

- For GPTBot controls and syntax, see OpenAI GPTBot documentation. For overall behavior in ChatGPT Search, review OpenAI’s ChatGPT Search announcement (2024–2025).

Common mistakes

- Blocking critical resources (JS/CSS) or review/product pages

- UA‑based allowlisting without validating actual crawls in logs

Step 2) Ship crystal‑clear entities with structured data

Actions (1–3 hours per template):

- Add/validate Organization, Product, and Review schema. Ensure values match visible page content and authorship

- For multi‑variant products, implement ProductGroup to consolidate product signals and variesBy attributes

- Include GTIN/brand where applicable; keep pricing/availability current for offers

Minimal JSON‑LD example (Product + Review):

{

"@context": "https://schema.org",

"@type": "Product",

"name": "Acme Smart Kettle",

"brand": "Acme",

"sku": "KETTLE-9000",

"gtin13": "0123456789012",

"offers": {

"@type": "Offer",

"price": "79.99",

"priceCurrency": "USD",

"availability": "https://schema.org/InStock"

},

"review": {

"@type": "Review",

"author": { "@type": "Person", "name": "Jane Rivera" },

"reviewRating": { "@type": "Rating", "ratingValue": "4", "bestRating": "5" },

"reviewBody": "Hands-on test: boiled 1L in 2:45, consistent temperature hold."

}

}

If you have variants, group them using ProductGroup per Google’s 2024 update (Google: Product variants and ProductGroup) and implement per the Product structured data spec (Google Product structured data).

Verify

- Validate in the Rich Results Test until zero critical errors

- Check Search Console Enhancements for Products/Reviews surfacing

Common mistakes

- Markup not matching visible content (violates guidelines)

- Forgetting variant grouping, which splits signals

Step 3) Publish experience‑rich reviews and comparisons

Actions (2–8 hours/page, ongoing):

- Create hands‑on reviews and comparisons with test data, photos, pros/cons, and clear bylines

- Add scannable summaries and FAQs that LLMs can cite cleanly

- Cite your sources and keep timestamps current; update high‑intent pages quarterly

Why this matters: Google’s helpful content and reviews guidance rewards original, people‑first content with transparent expertise and evidence (Google helpful content guidance). Research in 2025 also shows AI systems tend to link to credible, non‑spammy sources, elevating experienced, authoritative pages (arXiv 2025: News Source Citing Patterns).

Verify

- Your reviews earn organic citations or mentions in AI answers for target queries over time

- Pages carry author bios, sources, and updated dates

Common mistakes

- Thin reviews with stock images and no firsthand detail

- Missing author identity and expertise signals

Step 4) Optimize for Google AI Overviews

Actions (1–3 days setup, ongoing):

- Ensure pages meet Search Essentials and AI features guidance; avoid deceptive or unsupported claims

- Keep structured data accurate and aligned; fix errors flagged in Enhancements

- For ecommerce: Set up and maintain Google Merchant Center feeds (price, availability, returns) and resolve disapprovals quickly (GMC get started; Product data spec)

Why this matters: AI Overviews pull from diverse, relevant sources when they can add value beyond classic results (Google AI features overview). Product data freshness and fidelity help eligibility in shopping‑related experiences.

Verify

- Use real queries to check if AI Overviews appear and whether your pages or trusted third‑party reviews are cited

- Track structured data health in Search Console; validate with the Rich Results Test

Common mistakes

- Stale price/availability in feeds; missing GTINs

- Product pages thin on specs, FAQs, or comparisons

Step 5) Optimize for Perplexity

Actions (1–2 days setup, ongoing):

- Allow PerplexityBot on public docs/reviews you want cited (policy permitting). See Perplexity’s bot guidance for identifiers and controls (Perplexity Bots guide)

- Pursue citations from reputable, topic‑authentic publishers (gov/edu/major industry media) with digital PR and expert content

- Consider applying to the Perplexity Publishers Program if you’re a suitable publisher

Why this matters: Perplexity typically shows explicit source links and rewards clear, well‑sourced, recent information.

Verify

- Run commercial‑intent queries in Perplexity and log whether your brand appears in the answer or sources

- Review which domains are cited; prioritize outreach to those that influence your niche

Common mistakes

- Blocking PerplexityBot unintentionally

- Over‑indexing on first‑party pages when third‑party reviews dominate citations

Step 6) Optimize for ChatGPT Search/Browsing

Actions (1–2 days setup, ongoing):

- Ensure public product/docs/review pages are scannable with concise summaries, specs, and FAQs

- Keep official docs, changelogs, and comparison pages current; include clear anchors and headings

- Decide whether to allow GPTBot on target sections; control via robots.txt as needed (OpenAI GPTBot docs)

Why this matters: OpenAI indicates ChatGPT Search surfaces answers with links to original sources and a Sources view, favoring clear, citable pages (Introducing ChatGPT Search, 2024–2025).

Verify

- Test your priority queries in ChatGPT Search/Browsing; check the Sources pane for your brand or key third‑party citations

- Monitor server logs for GPTBot on target URLs

Common mistakes

- Docs without summaries or headings, making them hard to cite

- Out‑of‑date changelogs or specs

Step 7) Optimize for Bing Copilot (shopping)

Actions (1–2 days setup, ongoing):

- Set up Microsoft Merchant Center, verify your site, and submit a complete product catalog with required attributes (Microsoft Product Ads guide)

- Keep feeds fresh (at least every 30 days; more frequent for dynamic catalogs); resolve Diagnostics issues promptly

- Build legitimate review coverage on trusted platforms; ensure PDPs are complete and authoritative

Why this matters: Bing’s shopping experiences and Copilot leverage catalog data and authoritative sources to assemble recommendations.

Verify

- Check Merchant Center Diagnostics and campaign performance where applicable (Shopping content get started)

- Run target queries in Copilot and note inclusion/citations over time

Common mistakes

- Incomplete attributes (e.g., missing brand or category)

- Stale pricing/availability

Step 8) Earn and maintain third‑party authority

Actions (ongoing):

- Digital PR for editorial reviews and expert roundups on trusted, topical sites

- For developer/SaaS products: keep GitHub READMEs, docs, and integration pages current

- Encourage customers to leave honest, policy‑compliant reviews on high‑trust platforms

Why this matters: Studies and field evidence show answer engines often lean on reputable, informational domains for citations in commercial contexts (Search Engine Land 2024 analysis).

Verify

- Track which external domains most often appear in AI citations for your space; prioritize relationships with those publications

- Maintain a quarterly refresh cadence for your top 20% of pages by commercial intent

Common mistakes

- One‑off PR spikes without maintaining freshness or relationships

- Review gating or incentives that violate platform policies

Step 9) Verify inclusion and troubleshoot systematically

Weekly inclusion audit (30–60 minutes):

- Query test set: “best [category],” “[brand] vs [competitor],” “top [use case] tools,” “[product] reviews” across Google, Perplexity, ChatGPT Search, and Copilot

- Record whether your brand appears in the AI answer and which domains are cited

- Check Search Console Enhancements and Merchant/Diagnostics for new issues

- Sample server logs for GPTBot/PerplexityBot/Googlebot on target pages

If you’re not appearing

- Re‑validate structured data health in the Rich Results Test

- Improve depth/experience in reviews and comparisons; add specs, test data, and FAQs

- Strengthen third‑party coverage in your niche

Step 10) Monitor KPIs and set a refresh cadence

Core KPIs

- Inclusion rate by platform (percent of tracked queries where your brand is cited/linked)

- Citation share by domain type (first‑party vs third‑party)

- Structured data error rate (zero critical errors)

- Merchant feed health (disapprovals, freshness ≤ 24–48 hours for dynamic catalogs)

- Review freshness (≥ 1 updated review/quarter for key products)

Cadence

- Weekly: Inclusion audit and Diagnostics checks

- Monthly: Structured data and feed health sweep; update top pages

- Quarterly: Content refresh on high‑intent reviews/comparisons; outreach for new third‑party coverage

Troubleshooting matrix

| Symptom | Likely cause | Fix |

|---|---|---|

| AI answers omit your brand | Thin or outdated reviews; weak third‑party coverage | Publish hands‑on, updated reviews with evidence; pursue reputable editorial reviews and list inclusions |

| Wrong variant/URL cited | Missing ProductGroup or inconsistent canonicals | Implement ProductGroup; fix canonicals; consolidate signals |

| No citations from Google AI Overviews | Structured data errors; low page helpfulness | Pass Rich Results Test; align content with helpful content guidance |

| No Perplexity mentions | PerplexityBot blocked; weak reputable citations | Allow PerplexityBot; earn coverage from trusted publishers; consider Publishers Program |

| ChatGPT Search doesn’t show your pages | GPTBot blocked; docs not scannable | Allow GPTBot on target pages; add summaries, FAQs, and clear headings (GPTBot docs) |

| Shopping visibility is low | Feed disapprovals; stale price/availability | Fix GMC/MMC Diagnostics issues; update feeds more frequently (GMC spec) |

Verification and KPI checklist (copy/paste)

- Robots & crawlability

- [ ] Googlebot allowed on key pages; GPTBot/PerplexityBot policy decided and implemented

- [ ] Logs show recent crawls by target bots

- Structured data

- [ ] Product/Review/Organization schema valid; no critical errors

- [ ] ProductGroup implemented for variants

- Feeds (if ecommerce)

- [ ] GMC and MMC set up; zero critical disapprovals in Diagnostics

- [ ] Price/availability freshness ≤ 24–48 hours

- Content & authority

- [ ] Hands‑on reviews/comparisons updated in last 90 days

- [ ] Third‑party reviews or editorial coverage secured on trusted sites

- Inclusion tracking

- [ ] Inclusion rate measured weekly by platform

- [ ] Citation share by domain type tracked and improving

Sources and further reading

- Perplexity & OpenAI

- Microsoft

- Research & industry analyses

Pro tip: Prioritize clarity of entities, structured data correctness, reputable third‑party coverage, and feed freshness. Most inclusion issues trace back to one of these four levers.