How to Diagnose and Fix Low Brand Mentions in ChatGPT: Actionable GEO Guide

Learn to audit, benchmark, and improve your brand mentions in ChatGPT with a practical diagnostic workflow and multi-engine monitoring using GEO tools.

If your brand rarely shows up in ChatGPT answers—especially on non‑branded, decision‑stage questions—you’re flying blind where your buyers are increasingly researching. The good news: you can measure it, find root causes, and fix it with a repeatable workflow. To set expectations, ChatGPT tends to surface sources that look trustworthy, current, and concise. Practitioners note that accuracy, freshness, recognizable authority, and solid off‑site signals increase the odds of being mentioned or cited, according to the 2025 analysis from Fortis Media on ChatGPT ranking factors.

What “low brand mentions” really mean (and why ChatGPT chooses certain sources)

Low brand mentions means your brand appears in a small fraction of relevant AI answers versus peers. Separate the signals:

Mentions: Your brand name appears in the answer.

Citations: The answer references or quotes your content.

Links: The answer includes a correct, owned‑domain link.

These signals feed your broader AI exposure. For foundations and stakeholder context, see this primer on AI visibility.

Why does ChatGPT pick certain sources? In 2025, volatility has increased, with referral traffic dropping and citations consolidating toward hubs like Reddit and Wikipedia. That pattern points to recency and community signals gaining weight. See the mid‑year coverage from PPC Land in “ChatGPT referral traffic drops 52% as citation patterns shift” (2025).

Build a replicable diagnostic (prompt set, logging, cross‑engine snapshots)

You need a measurement loop you can run today and again next month.

Step 1: Design a prompt set

Create 20–50 non‑branded prompts that match buyer research: “best X for Y,” “alternatives to Z,” “how to…” scenarios.

Document engine, model/mode, and date for each run.

Step 2: Log the outcomes

For every prompt, capture: mentioned (Y/N), cited (Y/N), linked (Y/N), position (first/second/lower), sentiment (positive/neutral/negative), competitors present, and cited domains.

Save the full answer and citation list as evidence.

Step 3: Cross‑platform snapshots

Repeat the set in ChatGPT, Perplexity, and Google AI Overviews. Differences are normal; Perplexity often shows explicit citations while Google emphasizes structured snippets. If you’re new to the drivers behind brand selection, this explainer helps: Why ChatGPT mentions certain brands.

Step 4: Compute your baseline

Calculate mention rate, link attribution rate, position distribution, sentiment mix, and competitive presence.

Build a position‑weighted Share of Voice (SOV) per engine.

KPIs you can trust (with formulas)

Below are practical KPIs to quantify low mentions and track improvement.

KPI | How to calculate | What it tells you |

|---|---|---|

Mention rate | Prompts with a brand mention ÷ total prompts | Overall presence across decision‑stage queries |

Link attribution rate | Correct owned‑domain links ÷ total brand mentions | Whether AI answers point to your site, not third parties |

Position‑weighted SOV | Sum of per‑prompt weights (1.0 first; 0.5 second; 0.25 third) ÷ total prompts | Visibility adjusted for prominence |

Sentiment mix | Count of positive/neutral/negative mentions per engine | Reputation signal within answers |

Competitive benchmarking | Same KPIs for key competitors | Your relative footing and priorities |

Position weighting makes SOV more meaningful by valuing top placements. Practitioners widely use SOV to represent the percentage of AI answers that include your brand; weighting improves signal quality, as discussed in 2025 measurement guides like NAV43’s ethical GEO recommendations.

Additional validation and benchmarks: Position‑weighted visibility and SOV are well‑established in effectiveness and measurement literature. For context on how excess share of voice relates to market share growth (and its modern limitations), see IPA’s 2025 “From McCain to the mainstream”. Weighted visibility scoring is also standard practice in search tracking, as outlined in SISTRIX’s 2025 IndexWatch methodology where higher positions contribute more to a domain’s Visibility Index. And tooling commonly exposes a visibility percentage at the keyword‑set level—see Semrush’s 2025 Position Tracking API documentation for how “visibility” is calculated and returned across tracked terms.

Root causes and fixes you can implement

Once you see where you’re absent, map causes to targeted actions.

Likely root causes:

Weak presence in hubs ChatGPT leans on (encyclopedias, high‑authority lists, reputable community threads).

Outdated or non‑citable content: no crisp definitions, original data, or answer‑first formatting.

Poor structure for machines: thin headers, no tables or FAQs, missing schema.

Misaligned coverage: you don’t address popular evaluation prompts directly.

Negative or ambiguous sentiment from reviews and third‑party coverage.

Platform variance: strong in Perplexity but not in ChatGPT due to retrieval/reranking differences.

Targeted fixes:

Seed authority: pursue inclusion in reputable directories, databases, and credible “best‑of” lists. It’s not about gaming—aim for genuine qualifications and references.

Publish citable artifacts: original data, method notes, expert quotes, and concise definitions. Use answer‑first sections and scannable formatting.

Strengthen structure: add tables, FAQs, and schema; ensure clear headings and internal coherence.

Expand query‑specific content: produce comparison pages (X vs Y), alternatives, and step‑by‑step guides for common decision flows.

Manage reputation: respond to reviews; normalize brand messaging across third‑party profiles.

Distribute where engines look: contribute responsibly to communities (e.g., Reddit) and topic‑relevant publications. In 2025, consolidation toward these hubs was observed in PPC Land’s report on citation shifts.

Want engine‑by‑engine nuances? This overview compares monitoring across ChatGPT, Perplexity, Gemini, and Bing: AI engine monitoring comparison.

Monitoring cadence and attribution (GA4 + CRM)

Think of this as a program, not a one‑off.

Daily: Spot‑check 5–10 top prompts.

Weekly: Run your full prompt set; update KPIs and note significant citation changes.

Monthly: Deep‑dive on slipping topics; refresh content and outreach.

Quarterly: Re‑map prompts to reflect emerging questions and platform behavior.

For analytics, standardize UTM tagging in any links you share publicly so AI‑sourced clicks can be attributed:

utm_source=chatgpt or perplexity.ai; utm_medium=ai_chatbot; utm_campaign=ai_referral; utm_term=prompt_topic.

Create a GA4 custom channel group like “AI Chatbots” using source/medium rules, and sync leads to your CRM via Measurement Protocol or pipelines. Google’s documentation explains common tagging pitfalls and custom channel setup in GA4 help (2025).

Practical micro‑example (with disclosure)

Disclosure: Geneo is our product.

Here’s how an in‑house team can use it without changing the core methodology:

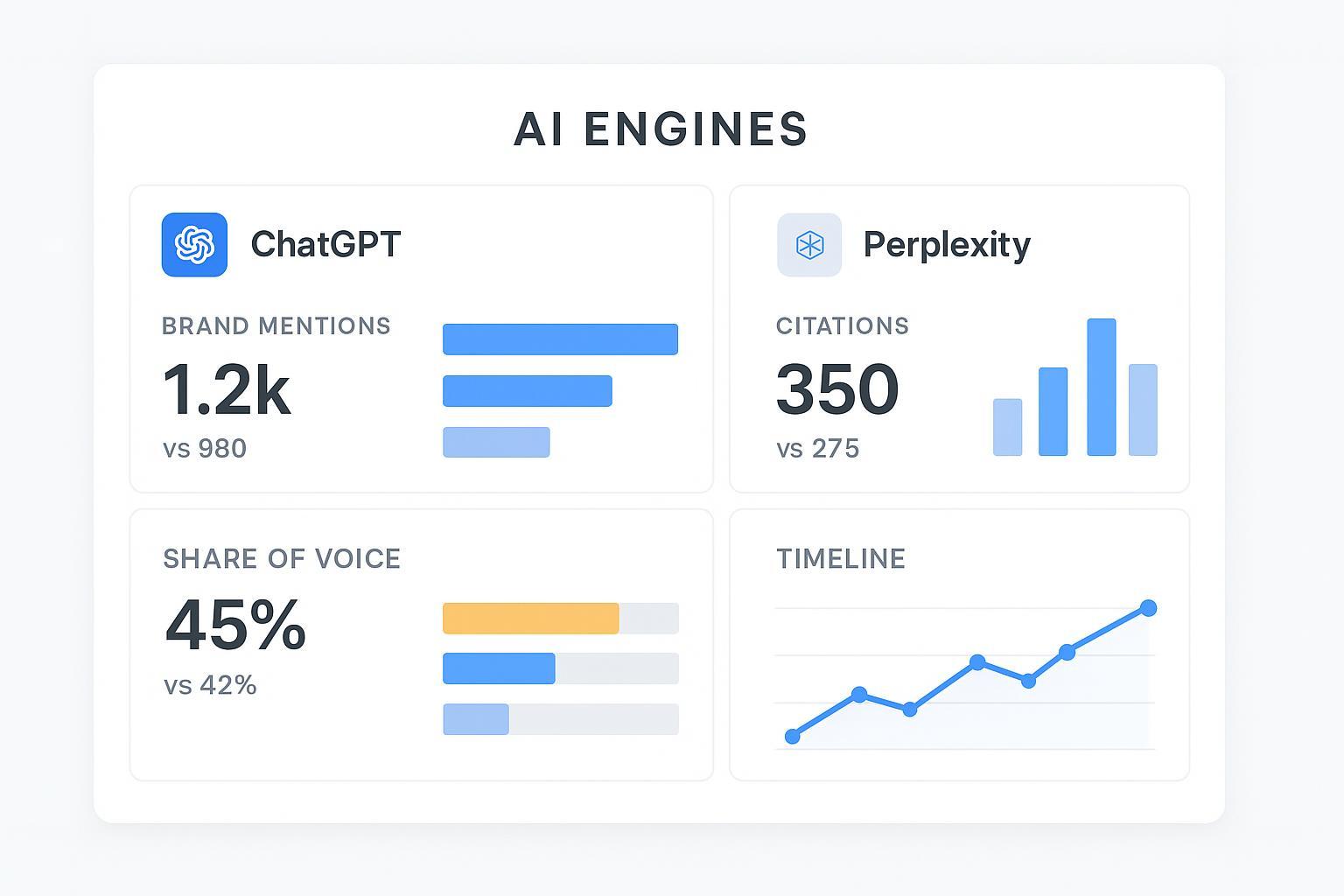

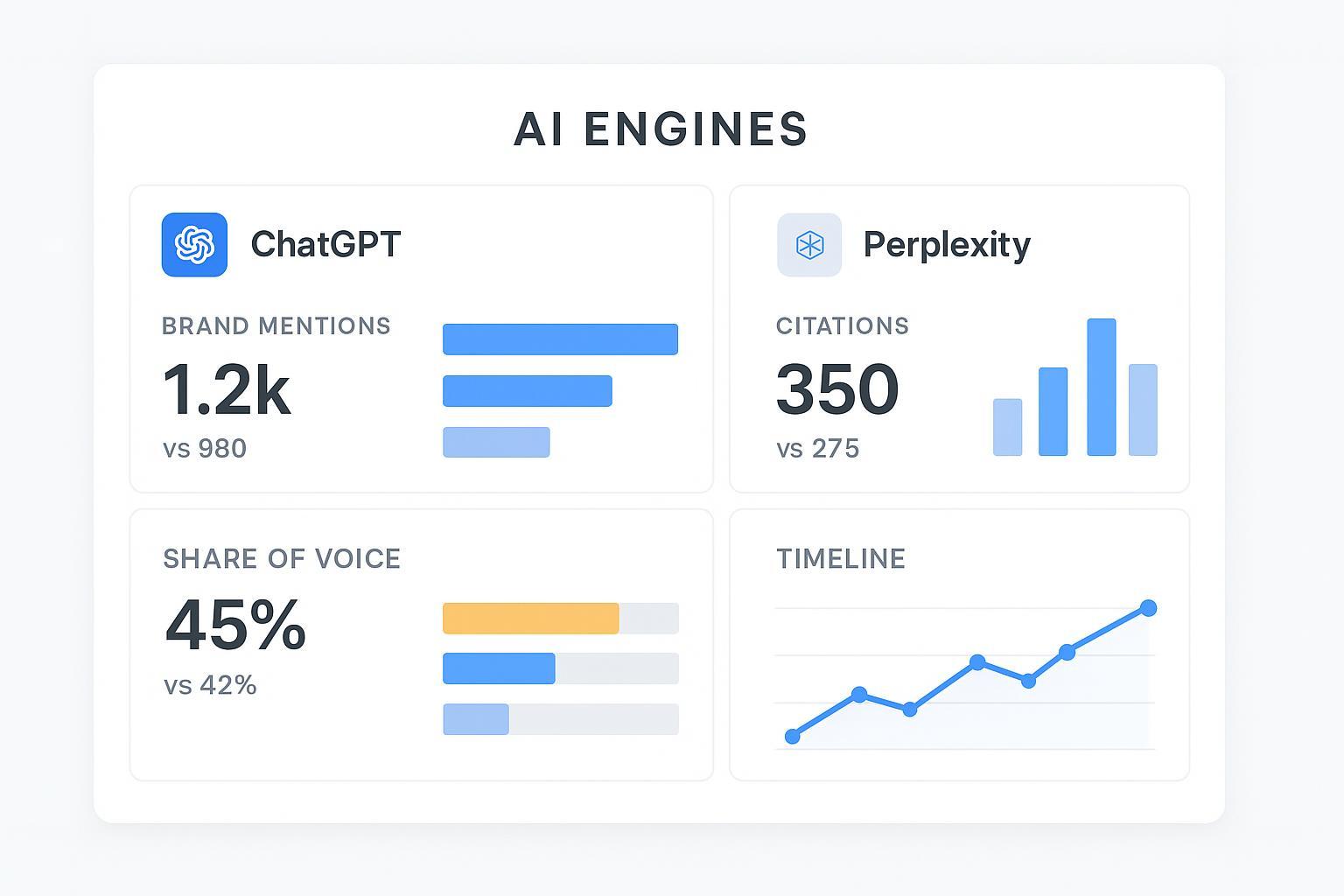

Run a baseline across ChatGPT, Perplexity, and Google AI Overviews, logging mention rate, link attribution, position, and sentiment per prompt.

Use competitive dashboards to see which rivals appear on “best X for Y” prompts and which sources are cited most often.

Track weekly changes via a visibility score that blends frequency, sentiment, and ranking, then prioritize topics where mentions dip or citations shift.

This example simply consolidates the workflow and makes multi‑engine monitoring and benchmarking easier; it doesn’t change the underlying audit logic.

Next steps

Build your prompt set and logging sheet; capture model/mode/date on every run.

Calculate KPIs, identify root causes, and align fixes to the most valuable prompts.

Operationalize your cadence and attribution so improvements are measured and attributed.

When you’re ready to formalize this program, initiate a free AI visibility audit to establish your baseline and monitoring plan.