How to Boost Google AI Overviews Citations Fast in 6 Weeks

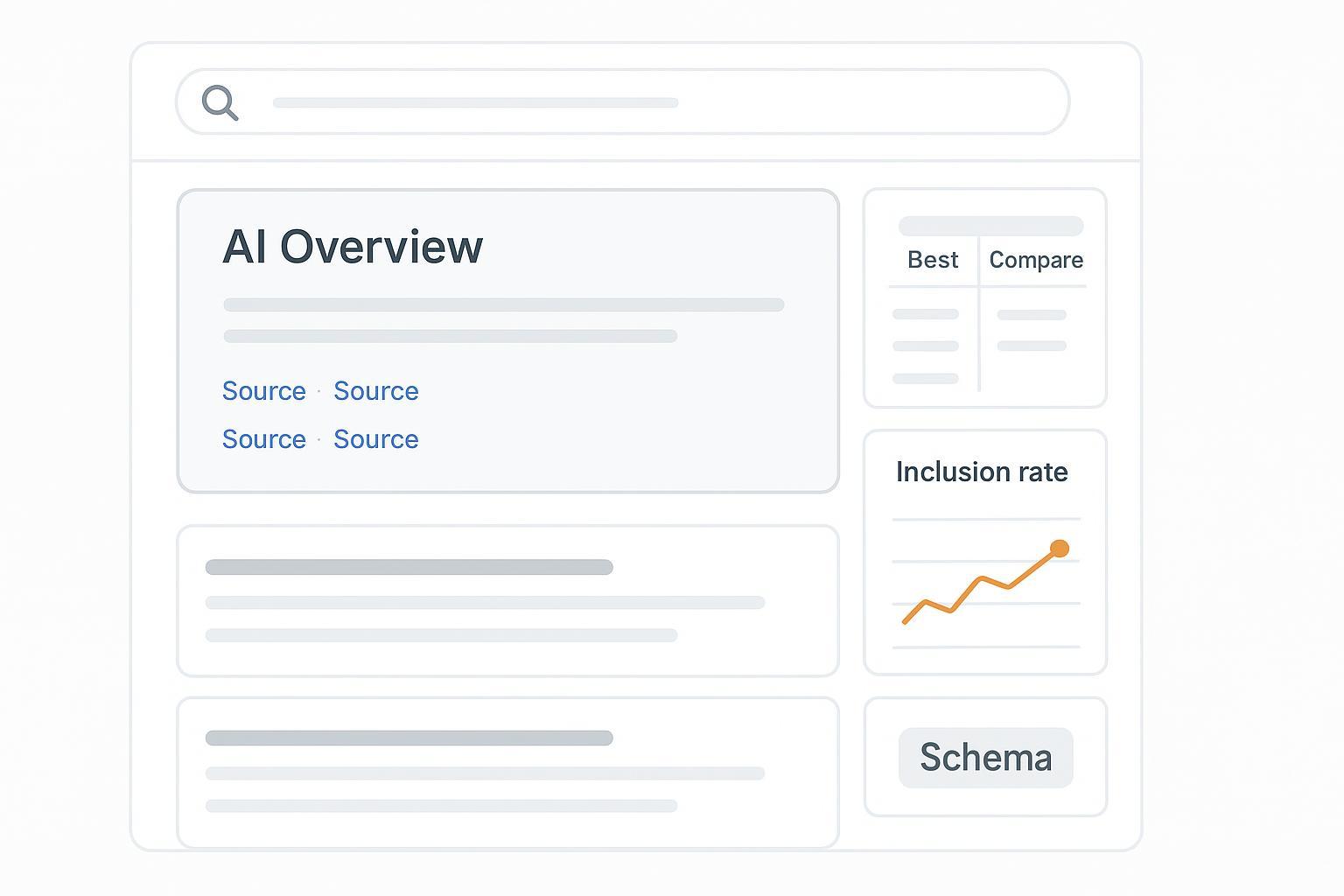

A practical 6-week how-to to raise inclusion rate and Google AI Overviews citations for high-intent “best vs compare” pages — steps, schema, and monitoring.

If your clients compete on “best vs compare” terms, winning visibility in Google’s AI Overviews starts with a single north‑star KPI: inclusion rate — the percentage of target queries where your brand is cited in the overview. Optimize that first, then expand to share of voice, sentiment, and pipeline attribution.

How AI Overviews select and cite sources

Google describes AI Overviews as selectively triggered answers that synthesize information and display supporting links from indexed pages. From the site‑owner perspective, eligibility hinges on helpful content, crawlability, and standard technical hygiene; there are no special tags required beyond normal Search. See Google’s guidance in AI Features and Your Website.

Technical underpinnings point to retrieval‑augmented generation and query fan‑out. One Google patent, US11769017B1 on generative summaries for search results, describes searching the original and related queries, gathering documents, generating a summary, and “linkifying” portions to sources — aligning with how visible citations appear.

What tends to correlate with citations across engines? Authority, clear passage‑level answers, and freshness. Search Engine Land’s analysis summarizes these patterns in insights from 8,000 AI citations.

The 6‑week quick‑win playbook for “best vs compare” pages

Week 0 — Audit and setup (2–6 hours per site)

Build a target query set (e.g., “best B2B CRM for SMBs,” “HubSpot vs Salesforce”). Create a tracker for inclusion rate and annotate weekly changes.

Verify indexing health, canonicalization, robots, and snippet controls. Fix crawlability issues and submit updated sitemaps.

Baseline metrics: inclusion rate, citation share of voice, sentiment context, and CTR deltas when AI Overviews appear.

Week 1–2 — Content refresh + structure (1–4 hours per page)

Add 50–120 word fact blocks answering sub‑queries directly (“X vs Y pricing,” “best for SMBs,” “pros and cons”). Include specs tables and a TL;DR verdict.

Update freshness (2024–2026 data), remove outdated claims, and cite reputable third‑party sources where appropriate.

Map sections to query variants (“X vs Y,” “best [category] for [segment]”) to improve passage retrievability.

Week 1–2 — Schema hygiene (0.5–1.5 hours per page)

Inject JSON‑LD for Product, Review, FAQ, Article; include Organization and Person for brand/author identity.

Validate with Google’s Rich Results Test; fix errors; verify via URL Inspection.

Week 3–4 — External corroboration and mentions (3–8 hours per page/brand)

Secure third‑party reviews or mentions; ensure consistent naming and entity disambiguation (consider Wikipedia/Wikidata when appropriate).

Strengthen internal links to related guides/FAQs to improve topical completeness and passage discovery.

Week 5–6 — Measure, iterate, outreach (1–2 hours/week across portfolio)

Track inclusion rate weekly for your target set. Benchmark citation share of voice vs named competitors and note sentiment context.

Troubleshoot non‑inclusion: indexing/canonicals, weak passages, ambiguous entities, insufficient corroboration, or poor freshness. Iterate content and schema.

Note: Speed depends on authority, competition, and query selection. Treat expected uplift ranges cautiously and tie changes to evidence logs.

Google AI Overviews citations: deep techniques

For “best vs compare” content, prioritize passage‑level clarity. Keep answers tight, scannable, and backed by specifics: short verdict lines, price bands, and feature comparisons. Use internal links to connect adjacent topics (FAQs, how‑tos) so engines can retrieve relevant passages and reinforce your visibility in Google AI Overviews citations.

Implement JSON‑LD with valid types. Here’s a compact FAQ snippet pattern to anchor comparison sub‑queries:

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [{

"@type": "Question",

"name": "Which CRM is best for SMBs: HubSpot or Salesforce?",

"acceptedAnswer": {

"@type": "Answer",

"text": "For SMBs prioritizing ease of setup and bundled marketing features, HubSpot typically fits better at lower seat counts; Salesforce suits complex sales ops and deeper customization at higher seat counts."

}

},{

"@type": "Question",

"name": "What’s the typical pricing difference between HubSpot and Salesforce?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Entry tiers can look similar, but total cost diverges with add‑ons and automation volume. Provide price bands and disclaimers; link to official pricing pages."

}

}]

}

Validate markup and confirm indexing/canonicals using Google’s documentation (Rich Results Test and SEO Starter Guide).

Monitoring and measurement: track inclusion rate and benchmark

A simple KPI table keeps teams aligned in weeks 1–6.

KPI | Definition | How to use in weeks 1–6 |

|---|---|---|

Inclusion rate | % of target queries where your brand is cited in AIO | Track weekly; annotate content changes and newly detected citations |

Citation share of voice | % of citations vs named competitors | Benchmark to spot gap topics and opportunities |

Sentiment context | Positive/neutral/negative framing in answers | Aim to shift neutral → positive with clearer evidence |

CTR delta | Change in CTR when AIO appears | Use directionally to prioritize queries and reporting |

Programmatic detection helps at scale. DataForSEO’s update explains ai_overview item types and expansion in their SERP API — see AI Overview in Google SERP API.

Disclosure: Geneo is our product. For agencies, Geneo can be used to configure a client portfolio of target “best vs compare” queries, monitor weekly inclusion rate and citation share of voice across Google AI Overviews, ChatGPT, and Perplexity, annotate content changes, and export white‑label dashboards. For definitions and KPI frameworks, see AI search KPI frameworks and platform nuances in ChatGPT vs Perplexity vs Google AI Overviews.

Troubleshooting: why you’re not being cited

Indexing and canonicals: Confirm pages are indexed and not canonicalized away; review robots/snippet controls that might limit passage extraction.

Weak passages: Replace generic copy with 50–120 word fact blocks, explicit verdicts, specs tables, and price bands.

Ambiguous entities: Harmonize brand/product names, add Organization/Person schema, and link author bios; consider reputable knowledge base entries for disambiguation when appropriate.

Insufficient corroboration or freshness: Add third‑party references, refresh data points, and remove outdated claims. Re‑submit sitemaps and re‑crawl key pages after updates.

Behavior context: Studies indicate lower click propensity when AI summaries appear; plan reporting accordingly.

Tools and templates

SERP APIs: Programmatically detect AI Overviews and parse citations with DataForSEO (link above) or SerpApi.

Validation: Use Google’s Rich Results Test for JSON‑LD and Search Console’s URL Inspection for indexing and structured data reports.

Internal trackers: Create a weekly inclusion‑rate sheet and dashboard; store prompts/screenshots when AI Overviews appear and log cited sources.

Next steps

Operationalize the 6‑week playbook across your “best vs compare” portfolio and standardize the KPI cadence.

Build an internal SOP for passage‑level edits and schema validation, then scale reporting to clients with competitive benchmarking.

If you need deeper definitions and dashboards, explore Geneo resources like KPI frameworks and platform comparisons linked above.