How AI Search Platforms Choose Brands: Mechanics & Strategies

Explore how AI search platforms choose brands. Learn eligibility, citation mechanics, and monitoring strategies to boost brand visibility in AI answers.

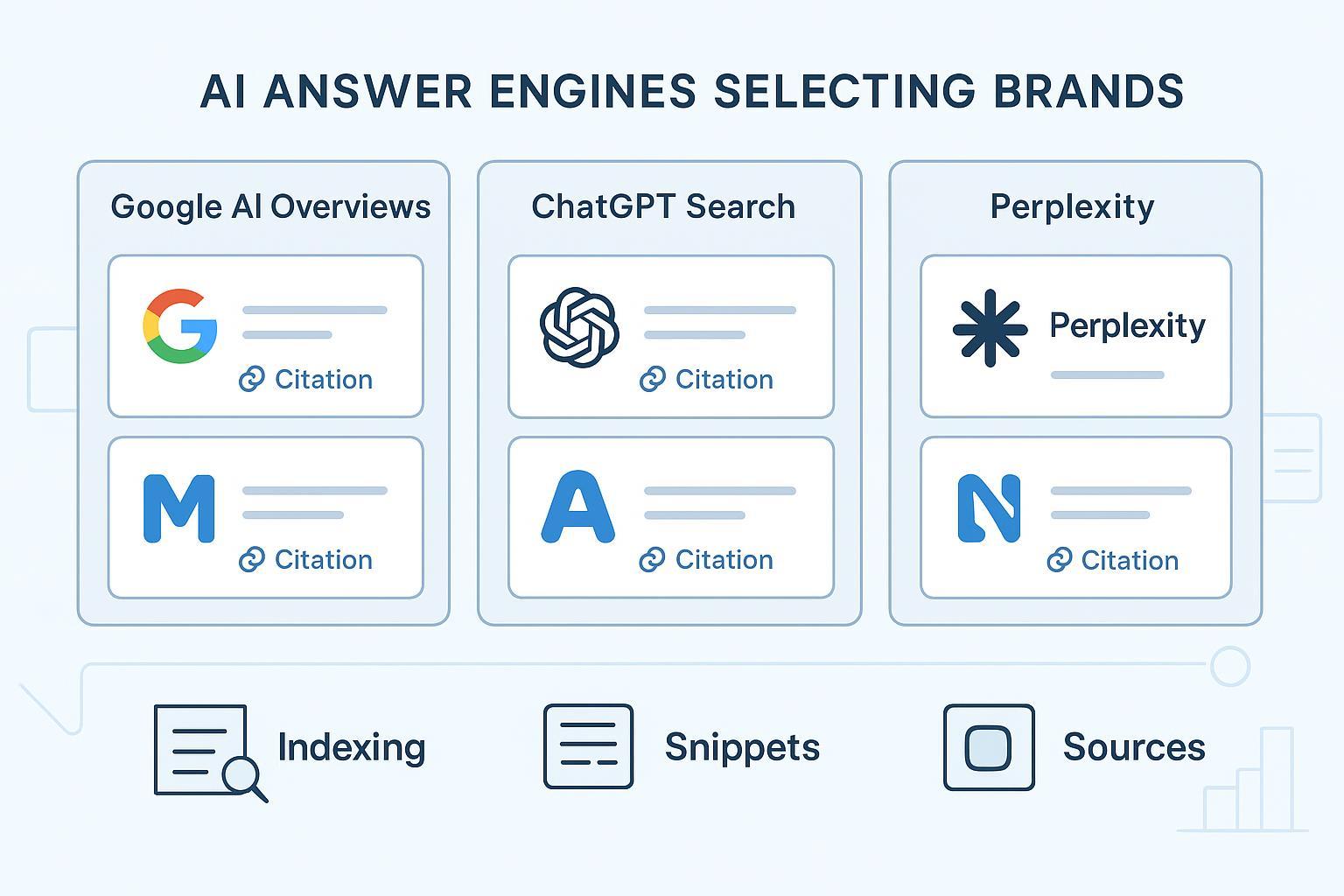

Which brands get cited by AI search platforms—and why? If you care about visibility in answers from Google’s AI Overviews, ChatGPT Search, or Perplexity, understanding the mechanics behind selection is the difference between being referenced and being invisible.

What “How AI Search Platforms Choose Brands” means

At its core, this term describes how AI answer engines decide which brand sources to include as citations or supporting links in generated responses. Two behaviors matter:

- Retrieval-based answers: The system performs a web search and incorporates external sources. Citations and links usually appear.

- Model-only answers: The system relies on internal knowledge without retrieving new sources. Links may not appear.

If you’re optimizing for visibility in AI answers, you’re optimizing for retrieval and citation. For foundational context on AI visibility, see What Is AI Visibility? Brand Exposure in AI Search Explained.

Google AI Overviews and AI Mode: Eligibility and how supporting links appear

Google’s guidance is explicit on eligibility. According to Google’s “AI features and your website” (Search Central), to be eligible as a supporting link in AI Overviews or AI Mode, a page must be indexed and eligible to be shown in Google Search with a snippet. Google also emphasizes that AI Overviews are grounded in top web results and provide supporting links to help users verify information.

Implications for brands:

- If your page isn’t indexable or snippet-eligible, it cannot appear as a supporting link.

- Beyond baseline eligibility, selection is influenced by Google’s broader ranking and quality systems; there isn’t a special AI-only checklist. People-first content principles from core updates still apply.

ChatGPT Search: When citations appear and how sources are shown

OpenAI describes ChatGPT Search as providing timely answers with links to relevant web sources, including in-line attribution and a Sources sidebar. See Introducing ChatGPT Search.

Practically:

- Retrieval-based behavior: When ChatGPT determines a query needs up-to-date information or verification, it performs a web search and displays citations and links.

- Model-only behavior: When no search is performed, the answer is generated from internal knowledge and may not include external links.

For brands, your content must be discoverable and relevant during retrieval: crawlable pages, comprehensive topical coverage, clear headings, and trustworthy information increase the likelihood of being cited.

Perplexity: Capabilities, limits of disclosure, and observed patterns

Perplexity differentiates Quick Search (fast default) from Pro Search (deeper, available to Pro users) and offers a Search Guide and API documentation with domain filters and recency controls. See Perplexity’s Search Guide.

Perplexity does not publish a detailed ranking formula (e.g., domain weighting, reranking specifics, time-decay). Industry context pieces discuss likely influences and answer-engine optimization approaches; treat observed patterns as directional rather than definitive.

Practically speaking, brands tend to fare better with:

- Clean structure: Pages with clear headings, organized sections, and FAQs are easier to retrieve and cite.

- Freshness signals: Up-to-date content, visible update timestamps, and change logs align with recency filters and user expectations.

Cross-platform E‑E‑A‑T signals that raise your odds

While Google doesn’t publish an AI-overviews-only checklist, the same quality systems influence whether your pages are trusted as sources. A pragmatic approach across engines:

- Experience and expertise: Use bylines, show credentials, and have qualified practitioners author content.

- Authoritativeness: Earn references from reputable sites and maintain organizational transparency.

- Trustworthiness: Cite original sources and explain your methodology; avoid vague claims.

- Freshness and coverage: Keep high-velocity topics updated and cover subtopics comprehensively.

Structure and technical hygiene: A concise checklist

A small set of non-negotiables boosts eligibility and retrieval quality:

- Indexation and snippet eligibility: Confirm Googlebot access, remove unintended noindex/nosnippet controls, and verify in Search Console.

- Crawl-friendly pages: Clean canonicalization, logical internal linking, sitemaps submitted, and stable HTTP 200 responses.

- Structured content: Use descriptive headings, summaries, and tables; apply appropriate schema (Article, FAQ, Product, Organization) that matches visible content. While schema isn’t required for AI Overviews eligibility, it helps general understanding in Search.

- Evidence-forward writing: Add sources, bylines, update stamps, and change logs on high-velocity topics.

Monitoring and evidence logging: Make selection measurable

If selection depends on eligibility, structure, and quality, measurement needs to reflect those variables. Here’s a compact monitoring schema teams can implement to capture citations and mentions across engines.

| Field | Why it matters |

|---|---|

| Query and page URL (canonical) | Ties citations to intents and the exact source page. |

| Timestamp and engine (Google AIO, ChatGPT Search, Perplexity) | Establishes when and where you were cited. |

| AI output excerpt and mention text | Captures the wording for sentiment and accuracy analysis. |

| Supporting link displayed (Y/N) and link type | Distinguishes citations from unlinked mentions. |

| Index status and preview controls (noindex, nosnippet, max‑snippet) | Validates eligibility and flags potential blocks. |

| Structured data types present | Shows alignment with visible content for understanding. |

| Screenshot or HTML snapshot | Provides audit evidence for future comparison. |

| Notes and remediation actions | Drives iterative improvements. |

For measurement frameworks that pair well with this schema, see LLMO Metrics: Measuring Accuracy, Relevance, Personalization in AI and our blog hub with GEO audit checklist.

Example workflow: Monitoring AI citations with Geneo

Disclosure: Geneo is our product.

Here’s a practical way brand teams can capture AI citations without reinventing their stack:

- Define priority queries and URLs per product or category.

- Monitor Google AI Overviews supporting links, ChatGPT Search citations, and Perplexity answers weekly; log query, engine, timestamp, and link details.

- Record sentiment and accuracy using consistent tags; flag issues for editorial updates.

- Align findings to index status, snippet eligibility, and structured data on each page; remediate blockers.

Geneo supports multi-engine monitoring and evidence capture—query-level tracking, engine-specific citation logs, and sentiment tagging—to help teams operationalize this workflow.

A pragmatic wrap: Eligibility first, monitoring always

Will selection mechanics change? Yes—these systems evolve. But the fundamentals hold: eligibility (indexable, snippet-eligible content), retrieval-friendly structure, and trustworthy writing. Build a monitoring habit and keep iterating.

If you’re ready to make AI citations measurable across Google, ChatGPT, and Perplexity, Geneo can be used to centralize tracking and evidence logging for your brand teams. No hype—just a practical way to see what’s working and fix what isn’t.