GEO Success Stories 2025: How Brands Win AI Search Visibility

Discover 2025 GEO best practices powering brands in AI search. Practitioner case studies, actionable dashboards, KPI frameworks, and sentiment tracking for leaders.

AI-driven answers now sit in front of traditional blue links. When ChatGPT, Perplexity, or Google’s AI Overview summarizes a query, your brand either appears in the answer—or it’s invisible. Generative Engine Optimization (GEO) is how teams earn citations, positive sentiment, and downstream conversions inside these answers. Let’s dig in.

GEO vs. SEO vs. AEO: What’s different—and why it matters

Traditional SEO still helps, but AI answer engines introduce new rules: source credibility in LLMs, structured context, and brand sentiment inside summaries. Think of SEO as optimizing the library catalog, AEO (Answer Engine Optimization) as shaping the librarian’s reply, and GEO as influencing how a team of expert librarians (multiple AI systems) cites and frames your brand across contexts.

| Practice | Primary goal | Where it shows | Core signals | Example KPIs |

|---|---|---|---|---|

| SEO | Rank web pages | SERP blue links | Technical health, content relevance, backlinks | Organic clicks, impressions, CTR |

| AEO | Earn direct answer placement | Single-engine answer modules | Entity clarity, schema, concise Q&A content | Answer inclusion rate, featured answer share |

| GEO | Win citations and sentiment across multiple AI engines | ChatGPT, Perplexity, Google AI Overview | Credible sources, clean facts, brand mentions, sentiment, multi-format proofs | AI citations, visibility score, sentiment index, assisted conversions |

Case snapshots: How brands earned AI search wins in 2025

The strongest proof comes from practitioner-led stories that show tactics and measurable changes.

-

Xponent21 documented a hands-on approach to engineering top positions in AI-led results, detailing content structuring, entity reinforcement, and validation cycles in their AI SEO case study (2025). Their playbook emphasized precise question-led content, verifiable sources, and iterative testing in answer engines—elements that consistently drive GEO citations.

-

Conductor’s customers highlighted methods that align with AEO/GEO—tight intent matching, schema discipline, and answer-ready content—through its award program, summarized in Conductor’s “Searchies” winners overview. While results vary by brand, the shared pattern is clear: content designed for answers, not just pages.

-

Contently’s tool review explored the real stacks teams use to operationalize GEO, including auditing, citation tracking, and testing workflows. See Contently’s 2025 roundup of top GEO tools for how marketers assemble platforms to measure and improve AI visibility.

-

Alpha P Tech collected real-world GEO examples with step-by-step frameworks—entity mapping, source standardization, and multi-engine validation—in their case study series (2025). Practitioners can mirror these steps to shift from “hope” to repeatable wins.

These examples share a playbook: structure content around explicit questions, cite authoritative sources, reinforce entities, and validate how AI answers render your brand. Do you see your current content doing all four?

The GEO KPI framework marketers actually use

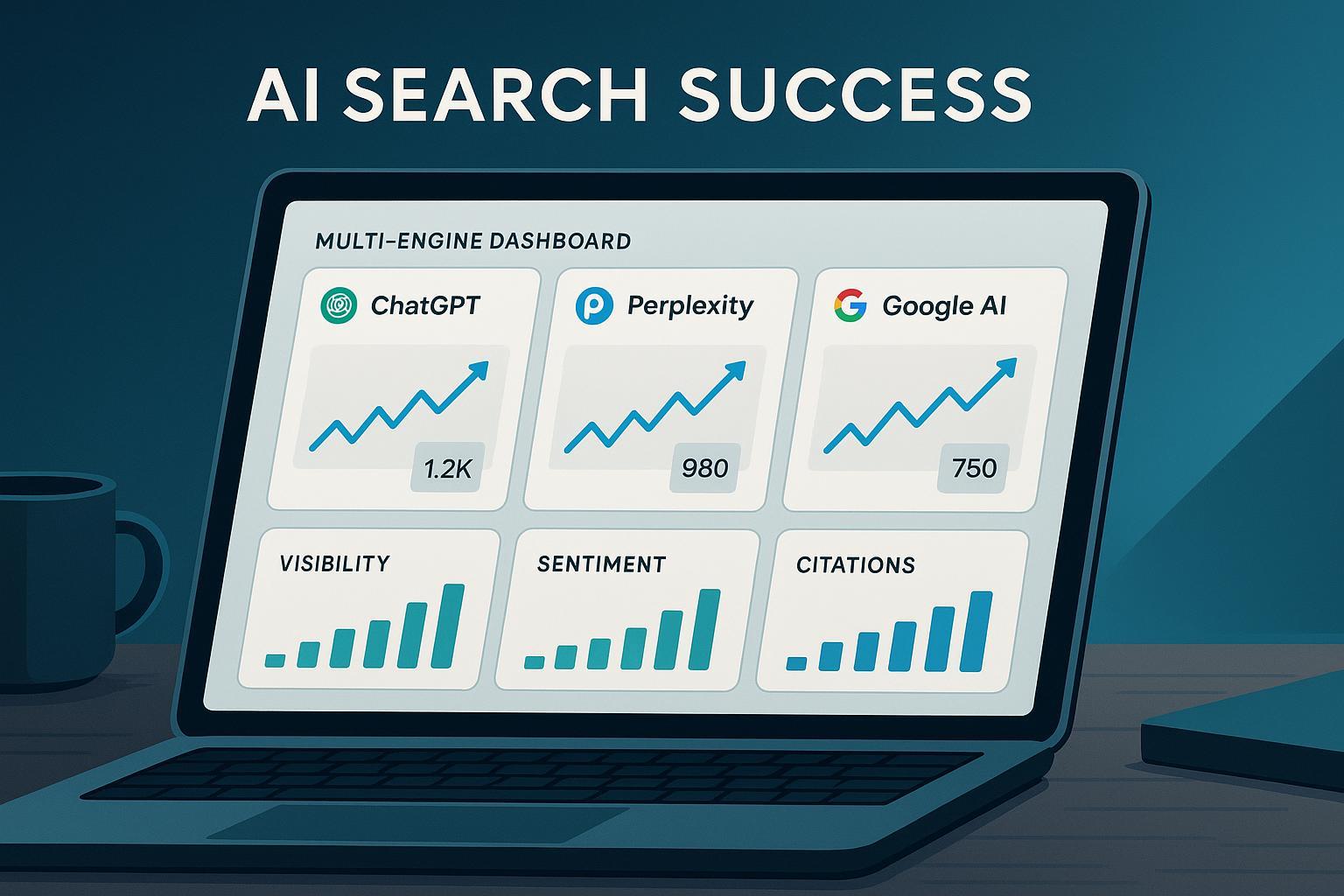

Winning AI search requires KPIs that map to answer engines, not just web pages. A practical set:

- Visibility: Share of queries where your brand appears in ChatGPT, Perplexity, and Google AI Overview answers.

- Citations: The number and quality of brand mentions and linked references inside AI answers.

- Sentiment: Polarity of how AI frames your brand (positive, neutral, negative), tracked over time.

- Accuracy and relevance: Whether answers describe your products/services correctly and match user intent.

- Assisted conversions: Downstream actions correlated with AI visibility (e.g., demo requests, sign-ups).

For deeper measurement ideas and a working cadence, see Geneo’s internal guide AI Search KPI Frameworks for Visibility, Sentiment, Conversion (2025) and our comparison Traditional SEO vs GEO: A 2025 Marketer’s View.

A repeatable workflow for GEO programs

Here’s a field-tested flow you can adapt across industries:

- Define queries and intents: Build a list of answer-focused questions (core, comparative, objection-handling). Map engines: ChatGPT, Perplexity, Google AI Overview.

- Audit current answers: Check what each engine says today. Note citations, tone, and gaps.

- Structure “answer-first” content: Create Q&A pages, comparison sheets, and proofs (data, customer quotes, third-party links). Keep facts clean.

- Reinforce entities and references: Ensure schema, consistent naming, and canonical links. Publish source-backed facts with date/context.

- Validate across engines: Test prompts and queries; capture examples; update content where answers fall short.

- Track KPIs: Visibility, citations, sentiment, and accuracy; set monthly reviews.

- Iterate with playbooks: Expand query sets, add multimedia proofs, and refine copy to improve tone and clarity.

To measure answer quality more precisely, marketers can adapt LLM evaluation concepts; Geneo’s primer LLMO Metrics for accuracy, relevance, personalization outlines practical dimensions you can score for ongoing QA.

Sentiment optimization: Making sure answers favor your brand

Even when you appear in an answer, the framing matters. A jarring summary can undo months of work. Practical steps:

- Clarify differentiators with plain language and evidence (customer quotes, analyst notes).

- Publish trust signals on canonical pages—pricing clarity, policies, awards—so engines can cite them.

- Address common objections with calm, verified explanations.

- Use consistent names, product terms, and structured data so engines don’t mix entities.

- Monitor sentiment shifts and update copy where tone skews negative.

Think of sentiment like the “voice” of the librarian explaining your brand. You want a clear, fair, and confident answer—never hedgy or vague.

Practical example (disclosure): Setting up an AI search visibility dashboard

Disclosure: The following example references Geneo, a platform focused on AI search visibility.

A lightweight dashboard helps teams see where they stand and what to fix next:

- Connect tracked queries: import your Q&A list for ChatGPT, Perplexity, and Google AI Overview.

- Log current answers: record whether your brand is cited, how, and with which links.

- Score sentiment and accuracy: tag answers positive/neutral/negative; flag misstatements.

- Set alerts: watch for brand mentions gained/lost and notable tone changes.

- Review monthly: compare trend lines and plan content updates.

If you want to test-drive this workflow, you can explore the Geneo platform, which supports multi-engine tracking, sentiment analysis, and historical query comparisons.

Putting it all together: Your cross-engine checklist

Use this as your weekly QA:

- Identify 25–50 priority questions; include “why choose,” “best for,” and competitor comparisons.

- Publish answer-first pages with clean facts, schema, and canonical references.

- Validate rendering in ChatGPT, Perplexity, and Google AI Overview; capture screenshots.

- Track visibility, citations, sentiment, and accuracy; adjust copy and sources where needed.

- Run monthly sprints to add proofs (case quotes, awards, third-party reviews) and expand queries.

Want more detailed playbooks? Compare frameworks in Traditional SEO vs GEO (2025) and operationalize KPIs via AI Search KPI Frameworks.

Sources and further reading

- Practitioner case playbook: Xponent21 AI SEO case study (2025)

- Award program methods: Conductor’s “Searchies” winners overview

- Tool-stack roundup: Contently’s top GEO tools (2025)

- Framework examples: Alpha P Tech real-world GEO cases (2025)