25 Essential GEO Resources for Learners in 2025 | Top Reading List

Unlock 25 top GEO resources for 2025—guides, playbooks, and research to master Generative Engine Optimization. Stay ahead and start optimizing now!

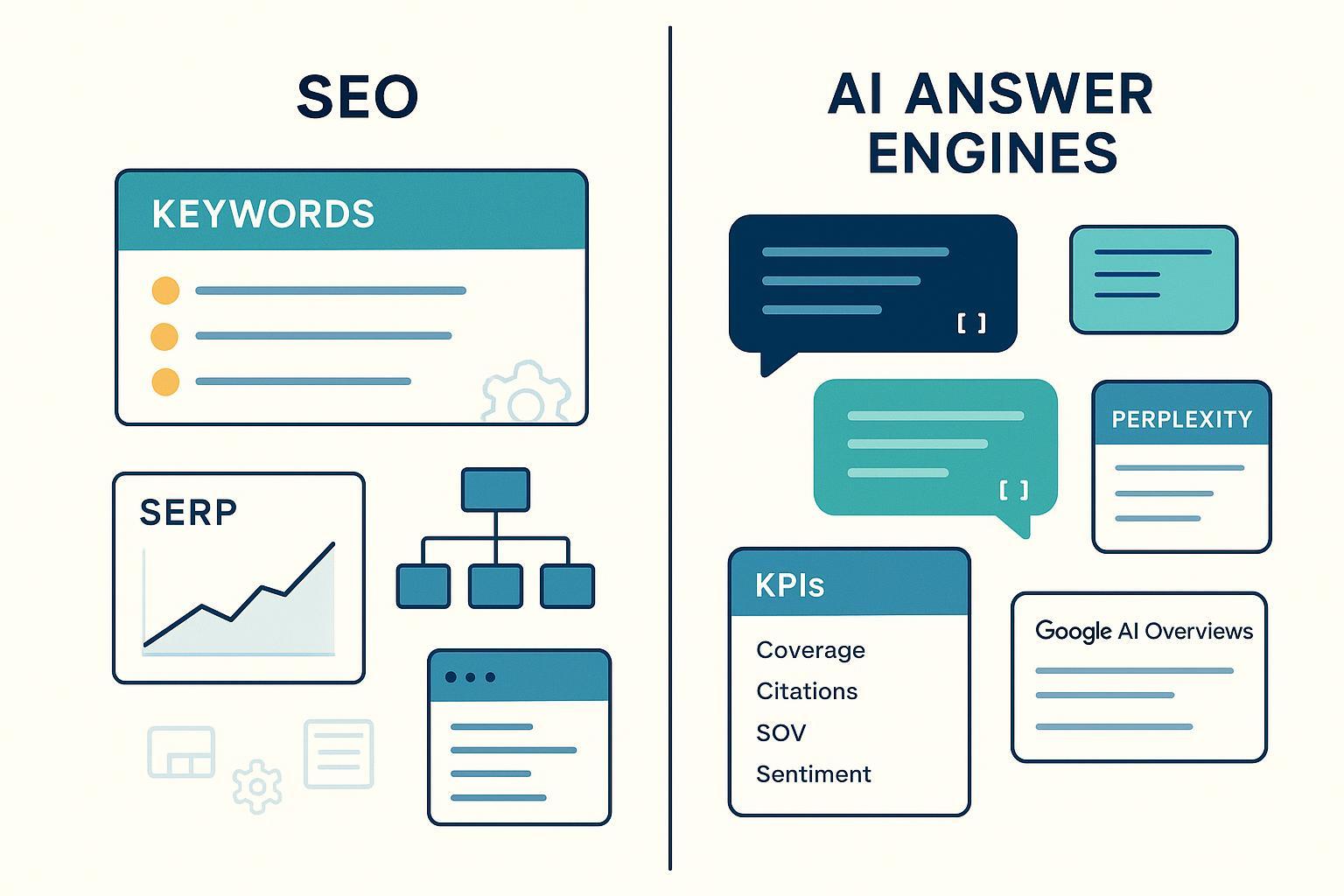

If you’re shifting from classic SEO to Generative Engine Optimization (GEO), you don’t need a thousand bookmarks—you need a reliable stack you can use this quarter. This curated list focuses on current (2024–2025) resources that help you earn citations in AI answers, structure content for model consumption, track AI visibility, and report outcomes stakeholders actually care about. Think of it as a syllabus you can act on right away.

Start Here: Foundational Overviews

What is generative engine optimization (GEO)? — Search Engine Land (2024; updated 2025)

- Positioning: The clearest mainstream definition of GEO and how it differs from SEO.

- Why read: It explains how to research AI answer structures, use schema for clarity, and track AI referrals/citations with examples and up-to-date guidance. See: Search Engine Land’s “What is generative engine optimization (GEO)?” (2024/2025).

- Best for / Not for: Best for teams aligning on terminology; not for deep technical tactics.

Generative Engine Optimization (GEO): What to Know in 2025 — Walker Sands (2025)

- Positioning: Agency perspective on brand representation inside AI answers.

- Why read: Covers entity clarity, citations, and monitoring—useful framing for execs and clients. Link: Walker Sands: “GEO: What to Know in 2025”.

- Best for / Not for: Best for stakeholder education; not for code-level implementation.

Generative Engine Optimization (GEO): Is It the New SEO? — Built In (2025)

- Positioning: Accessible primer that contrasts GEO’s “earn citations in AI answers” with SEO’s “earn clicks from SERPs.”

- Why read: Good examples and straightforward language. Link: Built In’s “Generative Engine Optimization (GEO): Is It the New SEO?” (2025).

- Best for / Not for: Best for newcomers; not for advanced practitioners.

Practitioner Guides & Playbooks (2024–2025)

10-Step GEO Framework — TryProfound (2025)

- Positioning: A stepwise, auditable system you can run in a quarter.

- Why read: Maps objectives to KPIs, includes prompt mapping, AI-friendly summaries (TL;DR, bullets, schema), and quarterly iteration. See TryProfound’s “Generative Engine Optimization (GEO) Guide 2025”.

- Best for / Not for: Best for program owners; not for those seeking vendor-neutral tooling only (discloses tool ties).

Generative Engine Optimization (GEO): How-To — Backlinko (2025)

- Positioning: A structured, example-rich action plan for content and distribution.

- Why read: Emphasizes building mentions/co-citations, multi-platform presence, and niche source checks, plus tracking AI citations. Link: Backlinko’s “Generative Engine Optimization (GEO)” (2025).

- Best for / Not for: Best for content teams; not for heavy technical deep dives.

The Hidden Playbook for Generative Engine Optimization — Torro (2025)

- Positioning: A concise checklist for quick wins.

- Why read: Focuses on citation audits, sitewide structured data, semantic coverage, and tracking—strong for getting momentum fast. Link: Torro’s “Hidden Playbook for GEO” (2025).

- Best for / Not for: Best for time-strapped leads; not for comprehensive strategy.

Generative Engine Optimization: Explanation & Strategy — FirstPageSage (2025)

- Positioning: Strategy framing with KPIs and CAC considerations.

- Why read: Ties authoritative content and structured data to business outcomes and co-citations. Link: FirstPageSage’s “GEO: Explanation & Strategy” (2025).

- Best for / Not for: Best for exec buy-in; not for hands-on configuration.

Technical Deep Dives: Schema, Entities, Crawl Directives

Structured data documentation — Google Developers (updated 2025)

- Positioning: Canonical guidance for JSON-LD patterns and validation.

- Why read: While the Google Developers Structured Data docs (2025) page cited here focuses on Events, it models current JSON-LD guidance, validation, and eligibility logic you can apply across types (Article, FAQPage, HowTo, Organization, Product, Review). Validate with Rich Results Test; remember that rich results eligibility ≠ AI citation guarantee.

- Best for / Not for: Best for technical implementers; not for strategic planning.

Schema Markup: What It Is and Why It Matters — Backlinko (2025)

- Positioning: Clear, up-to-date schema implementation guide.

- Why read: Advocates JSON-LD with @id-connected entities, internal linking between Organization/Person/Product, and practical validation steps. Link: Backlinko’s “Schema Markup Guide” (2025).

- Best for / Not for: Best for dev/SEO collaboration; not for policy discussions.

GEO Technical Foundations (schema, architecture, llms.txt) — iFactory (2025)

- Positioning: Practical technical patterns with llms.txt suggestions.

- Why read: Promotes consistent JSON-LD, site performance, and a thoughtful llms.txt layout (note: experimental; no official standard). Link: iFactory’s “GEO Technical Foundations” (2025).

- Best for / Not for: Best for teams managing both content and infra; not for academic research.

Structured Data in 2024: Key Patterns — Search Engine Journal (2024)

- Positioning: Trend analysis of structured data adoption and knowledge graph usage.

- Why read: Aligns your schema roadmap with broader adoption patterns and emerging types. Link: Search Engine Journal’s “Structured Data in 2024: Key Patterns” (2024).

- Best for / Not for: Best for planning and prioritization; not for code snippets.

Courses & Workshops

Optimize Pages for AI Search with GEO/AEO (Live Cohort) — CXL Institute (2025)

- Positioning: A reputable cohort course focused on AI search visibility.

- Why read/watch: Topics include structuring citable content, tracking AI citations, and contrasting AI user behavior with classic SERPs. Pricing: from ~$499 (subject to change). See CXL Institute’s “Optimize pages for AI search with AEO/GEO” (2025).

- Best for / Not for: Best for practitioners wanting guided assignments; not for those seeking a free option.

GEO Workshops / Consulting — TryProfound (2025)

- Positioning: Vendor-led enablement focused on prompt mapping and analytics.

- Why read/watch: Useful for teams that want to operationalize a framework with external facilitation. Pricing varies (contact sales). Reference framework: TryProfound’s “GEO Guide 2025”.

- Best for / Not for: Best for orgs with budget; not for self-serve learners.

Research & Industry Tracking

GEO: Generative Engine Optimization (paper + GEO-bench) — arXiv (preprint 2023; widely referenced 2024–2025)

- Positioning: The most cited neutral baseline for GEO testing.

- Why read: Introduces Geo-bench with 10,000 queries and metrics like Position-Adjusted Word Count; useful for experimental setups and evaluation thinking. Link: arXiv: “GEO: Generative Engine Optimization” (2023/2024 use).

- Best for / Not for: Best for method-minded teams; not for light reading.

Technology & Media Outlook 2025 — Activate Consulting (2024)

- Positioning: Macro adoption signals for generative AI in search behavior.

- Why read: Forecasts growth in generative platforms as a starting point for information discovery—context your GEO investment. Link: Activate Consulting’s “Technology & Media Outlook 2025” (2024).

- Best for / Not for: Best for market context slides; not for how-to.

Artificial Intelligence Index Report — Stanford HAI (2025)

- Positioning: Broad, data-rich overview of AI’s usage and impact.

- Why read: Use it to frame executive narratives around AI reliance and information-seeking behavior. Link: Stanford HAI’s “AI Index Report 2025”.

- Best for / Not for: Best for executive decks; not for GEO tactics.

awesome-generative-engine-optimization — GitHub (updated 2025)

- Positioning: Curated repository of GEO links.

- Why read: Helpful for discovery and keeping current, though curation quality varies. Link: GitHub’s “awesome-generative-engine-optimization” (2025).

- Best for / Not for: Best for ongoing exploration; not a vetted curriculum.

GEO Best Practices Guide — Orange 142 (2024–2025)

- Positioning: Tactics aligned with agency execution.

- Why read: A pragmatic checklist you can adapt quickly; complements the playbooks above. Link: Orange 142’s “GEO Best Practices Guide” (2024–2025).

- Best for / Not for: Best for fast-start teams; not a deep research source.

Tools & Internal Resources (neutral module)

- Also useful for monitoring your GEO progress: Geneo: AI Visibility Platform — Disclosure: Geneo is our product. Track brand mentions, AI citations, sentiment, and multi-engine visibility to validate whether the resources in this list move your metrics.

- Concept explainer to pair with metrics: What Is AI Visibility? Brand Exposure in AI Search Explained (Geneo, 2025) — clarifies AI visibility vs SEO visibility, including how to think about citations and sentiment.

How We Chose These Resources (methodology)

We evaluated resources against five criteria:

- Authority and independence (peer review, recognized outlet): 30%

- Practical applicability (steps, examples, templates): 25%

- Recency (2024–2025 updates): 20%

- Evidence quality (citations, disclosed methods): 15%

- Accessibility (free/low friction; clarity): 10%

We favored original/primary sources and publisher pages, limited vendor claims unless auditable, and avoided stale posts. Where concepts are emerging (for example, llms.txt), we label them experimental rather than prescriptive.

30-Day Action Plan to Use This Stack

- Days 1–3: Align on definitions. Read the foundational explainers from Search Engine Land, Walker Sands, and Built In. Draft your working definition of GEO, success metrics, and AI engines to prioritize.

- Days 4–7: Audit your AI visibility. Run a citation/sentiment spot-check across Gemini, ChatGPT, and Perplexity. Document which sources those answers cite for top prompts in your category.

- Days 8–14: Implement technical basics. Add or refine JSON-LD with @id-connected entities (Organization, Person, Product, Article) using Google’s docs and Backlinko’s schema guide. Validate with Google’s Rich Results Test. Create or review an experimental llms.txt following iFactory’s guidance.

- Days 15–20: Execute a focused play. Pick one TryProfound or Torro checklist and apply it to 3–5 high-potential pages. Structure AI-friendly summaries (TL;DR, bullet answers, FAQs) and tighten citations to authoritative sources.

- Days 21–25: Measure. Re-run AI answer checks. Track citations gained/lost, sentiment shifts, and any AI referral traffic. If you need a KPI framework for evaluation dimensions, see the “AI visibility” explainer above.

- Days 26–30: Institutionalize. Capture what worked into a repeatable workflow, set a quarterly review cadence, and plan one training slot (e.g., CXL’s cohort) for your team.

Notes, Caveats, and Next Steps

- Rich results vs AI citations: Implementing structured data increases clarity but does not guarantee inclusion in AI answers. Treat it as necessary but not sufficient.

- Evidence mix: Where vendor content appears, it’s because the methods are transparent and actionable; use your own testing to confirm fit.

- Research gaps: Neutral, data-heavy comparisons of AI citation rates across engines are still limited. The arXiv GEO-bench paper (2023/2024 use) offers methods you can adapt for internal testing.

Want a simple way to track whether your next sprint actually shows up in AI answers? Consider adding a lightweight monitoring layer so you can see citations and sentiment across engines over time—start with your top 25 prompts and expand from there.