How GEO Works in AI Search: Latest 2025 Analysis & Citation Trends

Discover how Generative Engine Optimization (GEO) shapes AI search in 2025. Data-backed strategies for Google, ChatGPT, Perplexity. Read now!

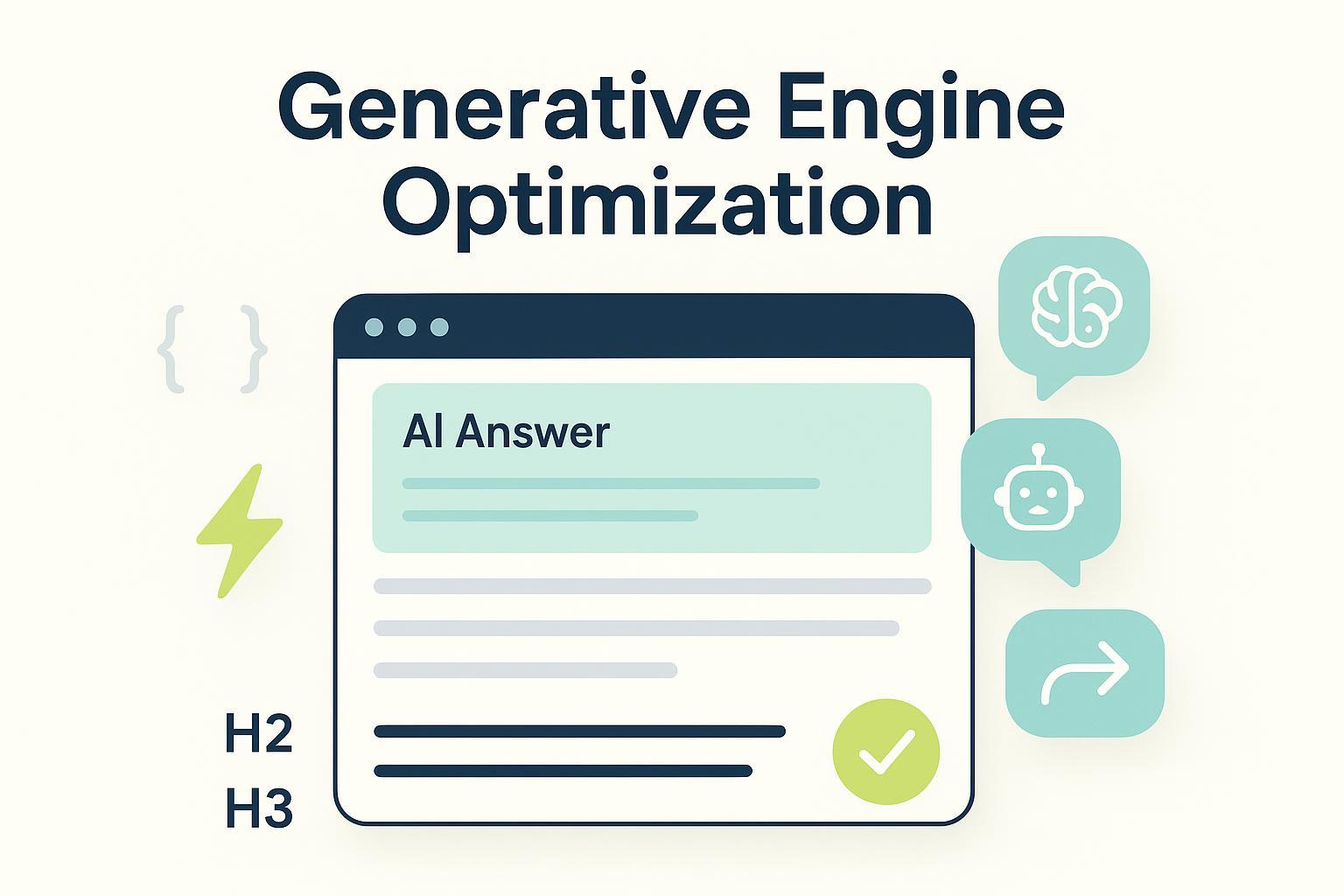

If your organic clicks have slipped while AI answers take center stage, you’re not imagining it. Answer engines—Google’s AI Overviews, ChatGPT’s Search/Browse, and Perplexity—now synthesize results into a single response, often with supporting links. Generative Engine Optimization (GEO) is the discipline of making your content easy for these systems to find, interpret, and confidently cite.

What GEO Is—and How It Diverges from SEO

GEO focuses on inclusion and citation within AI-generated answers, while traditional SEO centers on ranking pages in SERPs. Practically, GEO emphasizes answer-friendly structures (clear Q&A, concise steps, explicit sources) and entity clarity so models can unambiguously understand who or what you’re describing.

Authoritative explainers align on these points. HubSpot defines GEO as optimizing for AI-powered search and answer engines that synthesize multiple sources, and it stresses structured content and consistency for machine interpretation in its Generative engine optimization overview (2025). Search Engine Land’s foundational GEO guide (2024) and Built In’s generative search overview (2025) echo that GEO complements SEO by aligning with how LLMs assemble answers.

Think of it this way: SEO wins the blue links; GEO earns the mention inside the answer box. That shifts your working metrics from clicks and CTR toward citations, co-mentions, and sentiment in AI outputs—plus AI-sourced referral traffic.

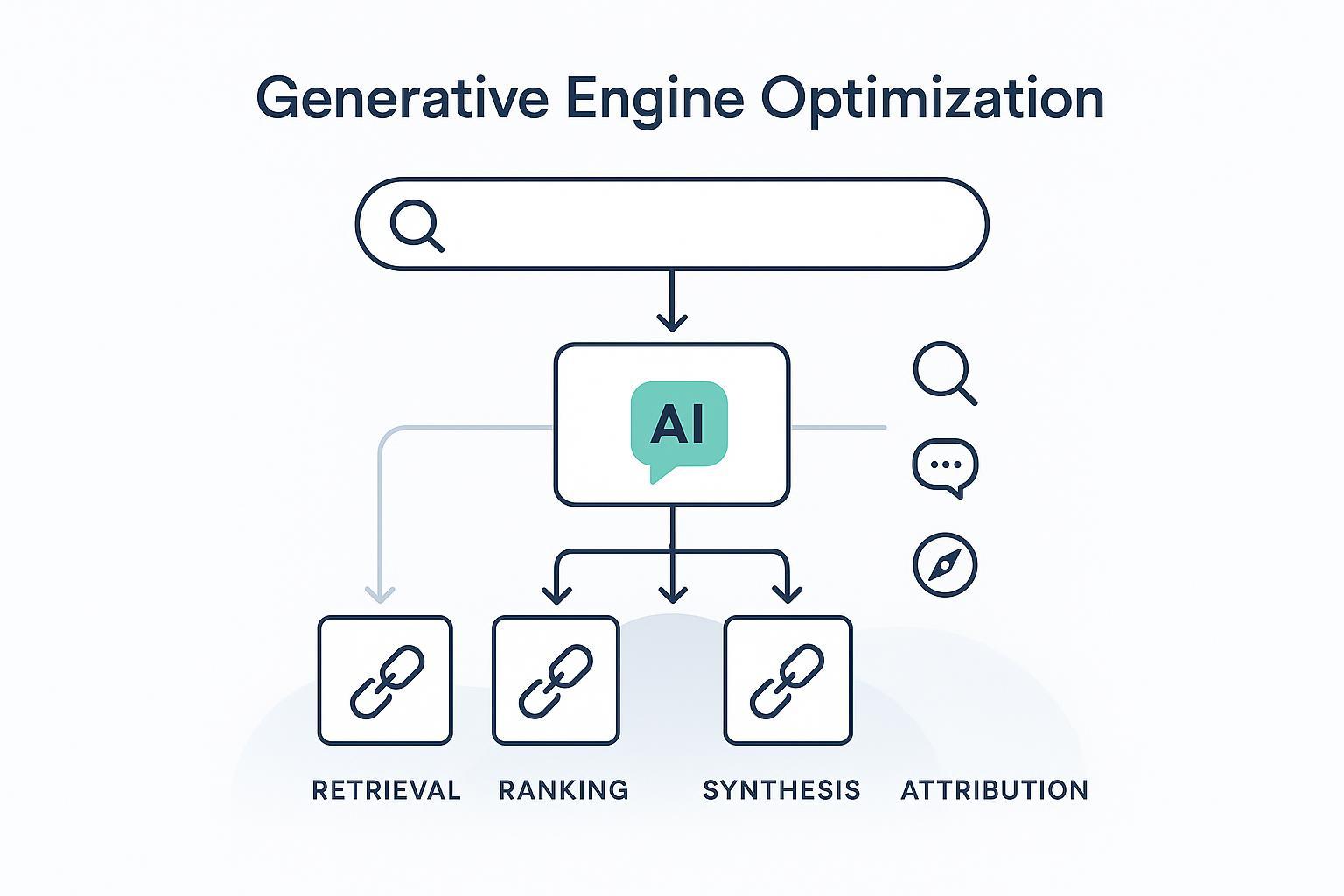

How AI Engines Actually Assemble Answers

Although each platform differs, the general pipeline runs through five stages. First, the engine interprets intent and often expands the query into sub-questions. Second, it retrieves relevant sources from the live web or indexes. Third, it ranks and filters evidence by authority, freshness, semantic coverage, and consistency. Fourth, it synthesizes a response by summarizing or quoting multiple sources. Finally, it attributes supporting links or inline citations.

Google documents AI Overviews and AI features for site owners in its Developers AI Features page (May 2025), while the product blog (May 2024) confirms expanded AI-organized results powered by Gemini. Observational analyses indicate Overviews appear when reliability thresholds are met and can be suppressed for sensitive YMYL queries; see SEOZoom’s overview mechanics analysis (Mar 2025) and Cyber‑Duck’s rollout notes (Feb 2025). Where specifics exceed official docs, treat them as informed observations.

Perplexity is notable for citation-first answers with visible source links. Third-party audits report strong preferences for trusted, fresh domains and broad domain diversity. See Keyword.com’s ranking factors synthesis (Oct 2025) and Profound’s comparative citation patterns (Jun 2025). Because Perplexity provides limited official technical detail, treat granular ranking claims as observed patterns rather than confirmed policy.

OpenAI’s Introducing ChatGPT Search (Oct 2024) confirms improved browsing/search with linked sources, and the ChatGPT release notes (Nov 2025) document ongoing product changes. The consumer products surface citations in the UI, while the API lacks native browsing; developers implement retrieval and attribution themselves.

A compact view of platform behaviors (late 2024–2025)

| Platform | Browsing/Live Web | Citation Display | Official docs | Notes |

|---|---|---|---|---|

| Google AI Overviews (Gemini) | Yes; AI Overviews in Search | Supporting links in Overviews | Developers AI Features; Product blog | Inclusion and suppression behaviors partly observational; evolving |

| Perplexity | Yes; real-time retrieval | Inline citations; visible source list | Limited official detail; third-party audits (2025) | Audits report trust/freshness patterns; treat specifics as observed |

| ChatGPT (consumer) | Yes; Search/Browse | Links alongside answers | OpenAI announcement; Release notes | API lacks native browsing; citations are UI-dependent |

Make Your Content Citation-Ready

Answer engines reward content that’s easy to parse and triangulate. Structure for parsing by adding Q&A sections marked up with FAQPage schema; use compact steps for process guides with HowTo schema; and write claim-backed paragraphs with inline references to primary sources, keeping claims scoped and dated. Strengthen entity clarity with unambiguous “About” components using Organization or Product schema—include canonical name, alternates, URL, logo, founding date, and social profiles—and keep naming consistent across your site and profiles. Encourage triangulation by citing authoritative external references within your content and varying evidence types, such as official docs, trade press, and standards bodies, to support synthesis.

A quick micro-example: For “OEM private-label supplement manufacturing,” include a short Q&A block answering capacity, certifications, lead times, and pricing models, each with concise, sourced statements. This pattern improves machine readability and citation confidence. For practical illustrations of prompt-level visibility analysis, see Geneo’s query report example page.

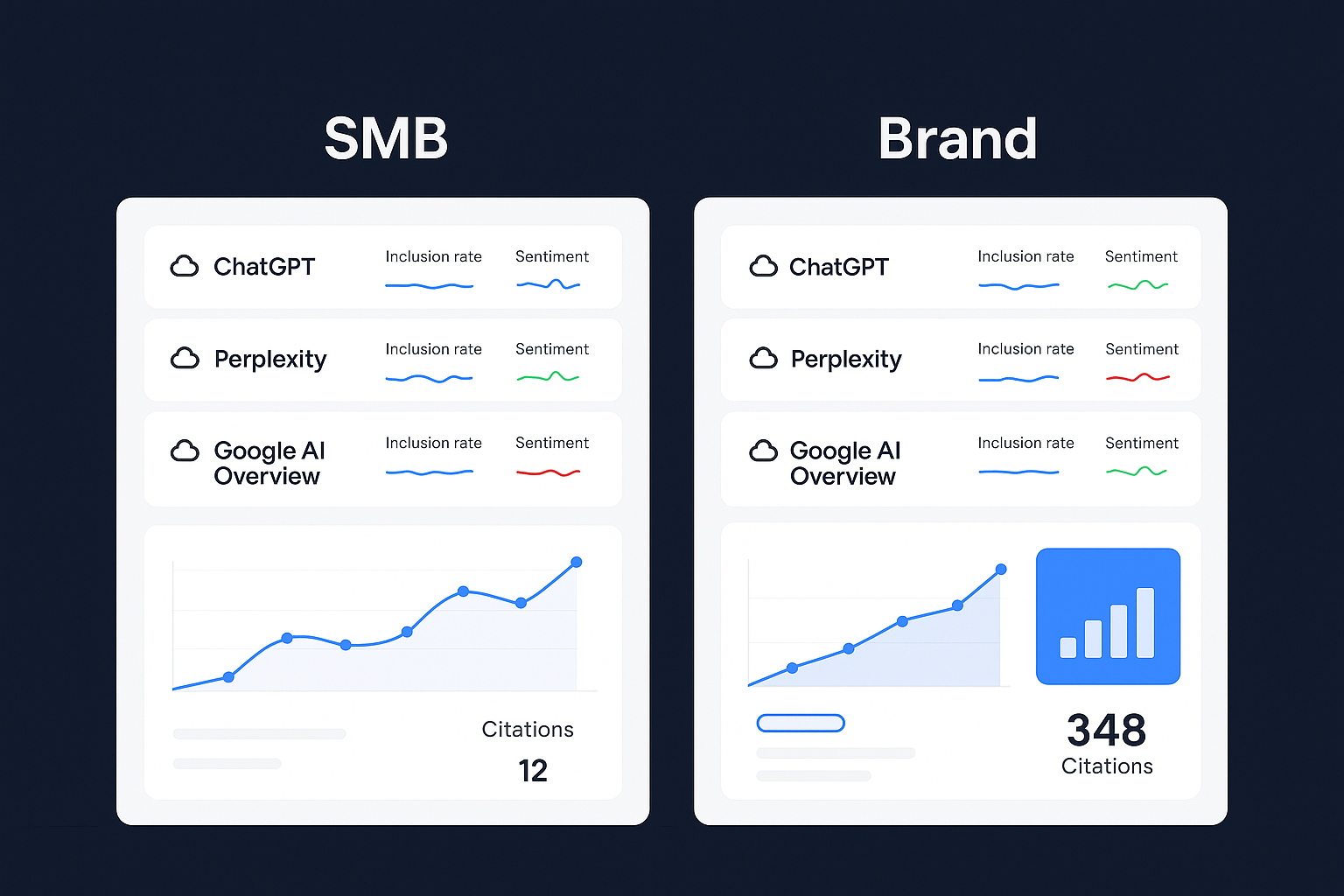

Measure AI Visibility, Citations, Sentiment, and Referrals

You can’t improve what you don’t instrument. Start by building a query library from customer language, tagged by cluster (branded, category, problem-solution). Audit weekly across Google/ChatGPT/Perplexity, and maintain a short daily list for priority queries. Log presence, mentions vs citations, source URLs, co-mentions, and sentiment context per engine, then summarize into a visibility scorecard. In analytics, segment GA4 traffic by Source/Medium (e.g., perplexity/referral, openai/referral, google-gemini/referral) and visualize in Looker Studio. Finally, maintain a change-log: mark evolving statements with “Updated on {date},” note platform policy changes, and track shifts in citation frequency.

For measurement perspectives and templates, Search Engine Land offers a brand visibility benchmarking framework (Nov 2025), and Team Lewis shows GA4/Looker dashboards for AI visibility (Jul 2025). To compare how platforms differ in monitoring and citation behavior, see our contextual explainer: ChatGPT vs Perplexity vs Gemini vs Bing monitoring comparison.

Ethics, Governance, and Non‑Manipulative Practices

GEO should raise the quality and transparency of the ecosystem, not game it. Expert sources consistently emphasize structured content, consistency, and authoritative contributions over schemes. HubSpot’s GEO overview (2025) and Backlinko’s action plan (2025) align on avoiding manipulative tactics.

Practical governance includes maintaining source hygiene with canonical documents and publication years, preferring primary sources; considering bot directives (e.g., LLMs.txt-like guidance) and monitoring unusual crawler behavior; publishing and updating editorial standards for claims, sources, and refresh cadence with a visible change-log; and avoiding over-claiming performance. When using heuristics or proxies, label them clearly.

Example Workflow: Cross‑Engine Monitoring and Iteration

Disclosure: Geneo is our product.

Here’s a neutral workflow you can adapt with your stack. Ingest a list of target queries across branded, category, and problem-solution clusters. Run scheduled audits across Google AI Overviews, ChatGPT (Search/Browse), and Perplexity to capture presence, mentions vs citations, link destinations, and sentiment snippets. Tag anomalies (misattributions, outdated facts) and feed them into content fixes: add Q&A modules, tighten entity schema, and insert dated references; then re-audit after changes. Report weekly with visibility scorecards, citation deltas, and sentiment distribution by engine. Use examples and prompt-level views like the query report page for real-time analytics databases for inspiration. Geneo can be used in this workflow to centralize multi-platform monitoring, track citations and sentiment, and generate content strategy suggestions based on observed gaps. Keep usage descriptive and auditable; avoid attributing outcomes without data.

What to Do Next

Refactor one high-impact page per week: add Q&A, steps, explicit sources, and entity schema. Stand up a cross-engine audit and measurement loop, and keep link density low but descriptive in your own content. Establish governance for source hygiene, refresh cadence, and a change-log. One question to ponder as you start: which claims on your site lack dated, canonical sources—and would you trust an answer engine to repeat them?

If you want a practical way to monitor AI visibility across engines, you can explore Geneo and compare it with other options using tool-neutral criteria in our market landscape note. No matter your stack, the goal is consistent: make your content citation-ready and keep measuring so you can iterate as AI search evolves.