Ultimate Guide to Generative Engine Optimization (GEO) for Enterprise Brands

Discover the complete guide to Generative Engine Optimization (GEO) for enterprise brands: earn citations, improve AI visibility, and master cross-platform governance. Click for the authoritative enterprise blueprint.

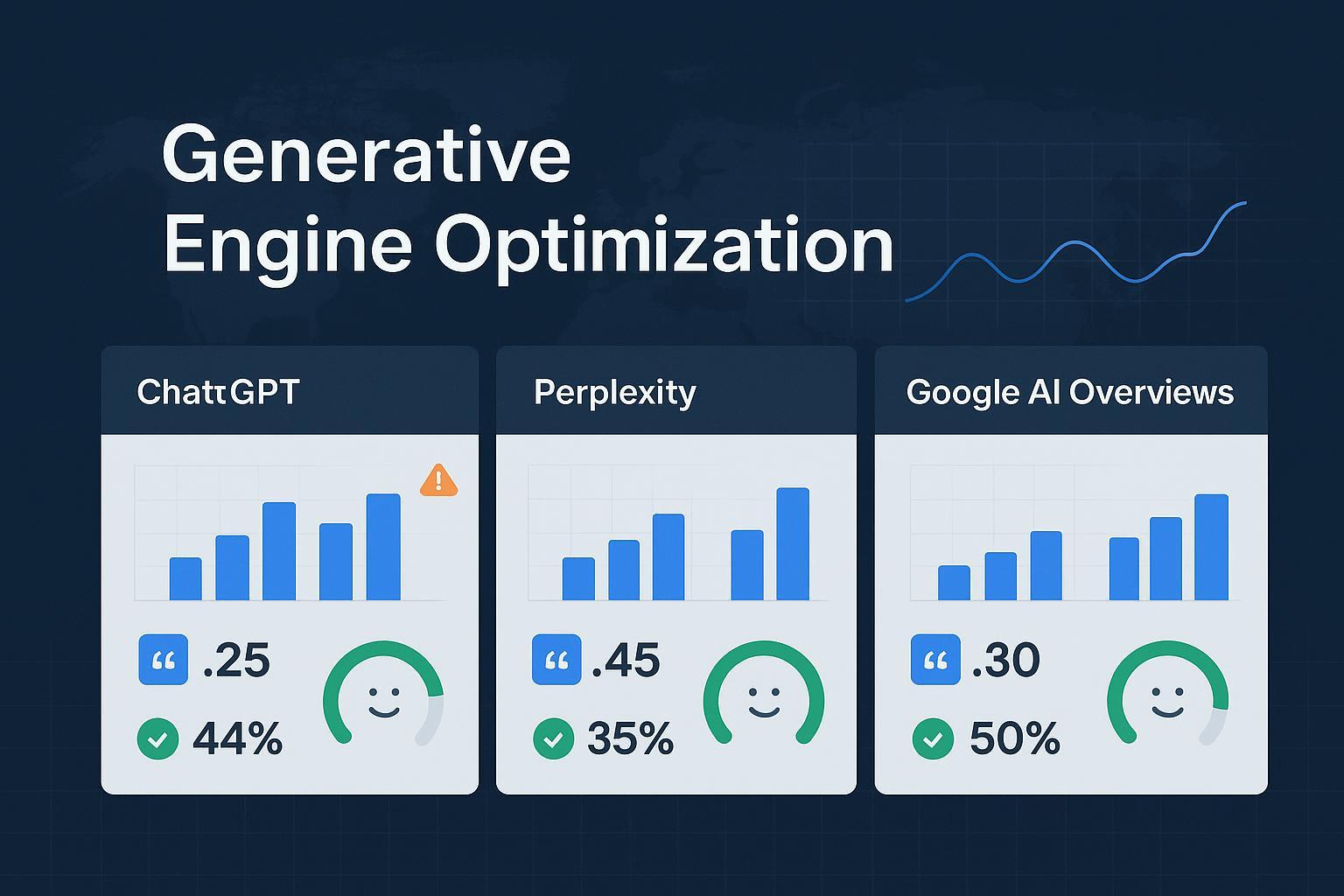

If your brand isn’t being cited in AI answers, you’re invisible where your customers increasingly decide. Generative Engine Optimization (GEO) is the discipline of earning accurate presence and citations in responses produced by engines like ChatGPT, Perplexity, and Google’s AI Overviews—complementing, not replacing, traditional SEO. For a deeper primer on why AI answer visibility matters and how it differs from classic rankings, see the internal explainer on AI visibility and brand exposure in AI search.

GEO vs. SEO for enterprises: different outcomes, overlapping inputs

SEO optimizes for ranked listings on search engine results pages (SERPs). GEO optimizes for inclusion and correct attribution inside AI-generated answers across multiple engines. Authoritative sources converge on this distinction: GEO focuses on visibility in AI-driven responses while SEO concentrates on organic rankings. For example, Search Engine Land states GEO is about “boosting visibility in AI-driven search engines,” including Google AI Overviews and ChatGPT, and complements SEO (2024) in its GEO explainer. AIOSEO (2025) offers a similar definition emphasizing citations in AI answers, not just SERP links, in its beginner’s guide to GEO.

For enterprises, this practical difference changes operations:

- SEO measures rank, traffic, and conversions from traditional listings.

- GEO measures whether your brand appears, is cited correctly, and is described accurately inside AI answers for priority intents.

- Investment decisions shift toward authoritative, extractable passages; structured data; unique facts and studies; and cross-platform monitoring.

Think of GEO as the “presence layer” across AI answers. The inputs overlap with SEO—E-E-A-T, structured data, performance—but the outcome you’re optimizing for is a cited, accurate mention inside the generated response.

How AI engines choose citations (and what that means for you)

No platform fully discloses ranking algorithms for AI answers, but public behaviors paint a clear picture:

- ChatGPT often surfaces established, encyclopedic sources; citations appear when external grounding is used. Third-party analyses note heavy Wikipedia presence and selective citation behavior. See overview discussions in Profound’s citation patterns roundup and a recap from Search Engine Roundtable.

- Perplexity emphasizes citations in almost every response and runs its own crawler (PerplexityBot). Studies show its cited URLs often overlap with Google’s top results more than other engines; it frequently pulls from community sources like Reddit and YouTube. See Ahrefs’ 2025 analysis of AI search overlap.

- Google AI Overviews state answers are “backed by top web results,” implying traditional ranking signals strongly influence candidate sources. Google’s public posts focus on accuracy and safety without detailed weights. Reference Google’s AI Overviews update (2024), and a practitioner explainer on source mix such as ClickGuard’s overview of where Google gets AI Overview info.

Implications for enterprise programs: Be among the “top results” candidates for Google by maintaining technical eligibility and extractable facts, and provide concise, verifiable passages and data that can be lifted cleanly—think Q&A blocks and summary tables. Expect platform-specific preferences and tailor examples accordingly without fragmenting your editorial standards.

Inclusion signals and technical implementation

Enterprise GEO succeeds when your content is authoritative, machine-readable, and easy for retrieval systems to extract. Prioritize:

- E-E-A-T and source credibility: Clear authorship, credentials, citations, and transparent corrections increase selection likelihood. Google underscores that AI Overviews are grounded in high-quality web results; see Google’s overview post (2024) and practitioner guidance such as Proceed Innovative’s E-E-A-T alignment for AI search.

- Structured data and entity clarity: Use Schema.org types (Organization, Article, FAQPage, QAPage, Product, HowTo, Review) with required properties. Validate with Google’s Rich Results Test and fix issues in Search Console. See Google Developers structured data docs and Search updates.

- Passage-level extractability: Retrieval pipelines favor compact, self-contained answers (roughly 40–80 words), question-led headings, and tables for comparisons. Practitioner checklists reflect this; see SEOSLY’s AI and SEO checklist.

Here’s a compact JSON-LD example for an FAQ section designed for clean extraction:

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is Generative Engine Optimization?",

"acceptedAnswer": {

"@type": "Answer",

"text": "GEO is the practice of earning accurate citations and presence in AI-generated answers across engines like ChatGPT, Perplexity, and Google AI Overviews."

}

},

{

"@type": "Question",

"name": "Which schema types improve extractability?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Organization, Article, FAQPage, QAPage, HowTo, Product, and Review—implemented with required properties and validated in Google’s Rich Results Test."

}

}

]

}

Validate your markup via Google’s tools, monitor warnings in Search Console, and request recrawl when you deploy meaningful changes.

The enterprise GEO operations playbook

GEO is not a one-off tactic; it’s an operating model. Build the following program components so execution is reliable at scale:

- Governance and roles: Assign an executive sponsor (CMO/VP), a GEO lead (Head of SEO/Content), technical owners (SEO engineering, web platform), editorial owners (managing editors), PR/comms partners, and legal/compliance reviewers for regulated topics.

- Editorial standards: Define answer-nugget patterns, citation rules, author bios and credentials, conflict-of-interest disclosures, and correction workflows. Keep a style guide for question-led headings and 40–80 word lead answers.

- Technical hygiene: Maintain structured data coverage and entity clarity across Organization, Product, and FAQ content; ensure performance, accessibility, and canonical URL integrity.

- Cadence: Quarterly GEO reviews; monthly measurement reports; sprint-level updates tied to new campaigns or product launches.

- International operations: Localize facts and examples; adapt schema and legal disclaimers per market; track per-engine behavior variance across languages.

A printable governance diagram should map owners to surfaces (homepage, product pages, blogs, docs, FAQs) and list handoffs to Legal and Comms for sensitive topics. If you manage many brands, formalize approvals via an agency ops framework—see multi-brand workflows and white-label collaboration for context.

Measurement and KPIs that matter

Optimize for presence and correctness, then measure the change over time. Vendor-agnostic KPIs most enterprise teams track include:

- Share-of-answer: Percentage of tested prompts where your brand is mentioned or cited vs. competitors across engines.

- Citation coverage: Count and distribution of explicit citations by engine and intent category.

- Attribution accuracy: Rate of correct URL/entity attribution (your canonical pages vs. syndications or third-party summaries).

- Sentiment of mentions: Polarity and subjectivity scores attached to passages about your brand.

- Change-over-time: Time series for all above metrics, with alerts for spikes/drops.

Search Engine Journal (2025) described visibility metrics resembling internal reporting constructs used by model vendors—see its analysis of ChatGPT’s confidential report visibility metrics. For a deeper dive into evaluating AI outputs on accuracy, relevance, and personalization, review the internal framework on LLMO metrics. Keep link density tight: one authoritative external link per claim is generally enough.

Risk management and crisis response

Enterprise brands must prepare for hallucinations, misattribution, and regional variances.

- Hallucination mitigation: Ground sensitive content in curated data sources; implement human-in-the-loop review for YMYL-adjacent material. Consider RAG/GraphRAG for complex domains. See governance discussions in Menlo VC’s state of enterprise generative AI (2024) and legal context in IAPP’s GDPR note on AI hallucinations.

- Misattribution response: Document a correction playbook—capture the AI output, trace sources, submit corrections to platforms or publishers, and update your own canonical pages with clear facts and citations. Train comms teams for rapid, factual responses.

- Robots and crawler controls: Use robots.txt directives to manage AI crawlers, recognizing compliance varies. You can restrict GPTBot, Google-Extended, PerplexityBot, and CCBot via standard syntax; see Cloudflare’s guidance on controlling content use for AI training and its overview of who’s crawling your site in 2025. Network-level bot management and log monitoring provide additional control.

- Regional variance: Maintain market-specific editorial notes, legal disclaimers, and escalation paths. Track engine behavior by language; don’t assume one market’s gains translate directly to another.

For teams tracking Google AI Overviews globally, review practical nuances discussed in this AI Overview tracking guide for GEO teams.

Composite enterprise case example (illustrative)

A global CPG company manages five master brands across 30 markets. The team identifies 120 priority intents (FAQs, comparisons, and “how it works”) and rewrites lead paragraphs into 40–80 word answer nuggets with question-led headings. They deploy Organization, Product, and FAQ schema and add provenance citations to each fact block. Quarterly reviews pair editorial updates with technical fixes.

After 12 weeks of a focused GEO sprint, their tracked basket shows:

- Share-of-answer across Perplexity and AI Overviews rising from 11% to 26% for priority queries.

- Correct attribution improving from 68% to 84%.

- Negative-sentiment mentions dropping by 14% after updating outdated data and adding authoritative references.

These figures are illustrative benchmarks tied to the measurement definitions above, not guarantees. The gains follow from cleaner extractability, stronger E-E-A-T signals, and consistent monitoring rather than a single tactic.

Practical workflow: monitoring citations and sentiment across engines

Disclosure: Geneo is our product.

For enterprise teams, a repeatable monitoring workflow looks like this:

- Define a query basket by intent (brand, product, category, how-to) and markets.

- Sample prompts across ChatGPT, Perplexity, and Google AI Overviews; record whether the brand appears and whether citations point to canonical URLs.

- Score sentiment on passages mentioning the brand; flag misattributions and inaccuracies for editorial/legal review.

- Track change-over-time and annotate major engine updates or content releases.

A platform that supports cross-engine citation tracking, sentiment scoring, and multi-brand reporting can reduce manual effort and improve auditability. In our experience, Geneo helps teams operationalize these steps with entity-level tracking and reporting without locking you into a single metric set.

Next steps for enterprise rollout

- Align leadership and assign owners for GEO across SEO, Content, PR/Comms, and Legal.

- Standardize answer-nugget patterns, provenance citations, and author credentials. Validate structured data across core surfaces.

- Establish KPIs: share-of-answer, citation coverage, attribution accuracy, sentiment, and change-over-time.

- Set a monthly reporting cadence; review by market; adjust content and schema based on findings.

- Prepare crisis playbooks for hallucinations and misattribution; rehearse internal and external responses.

If you manage multiple brands or regions, consider formalizing collaboration via the agency and white-label workflows. And if Google AI Overviews are a key surface for your category, bookmark this practical guide to tracking AI Overviews for your technical leads.

GEO is the operating system for enterprise visibility inside AI answers. Build authoritative, extractable content; mark it up well; measure presence and correctness across engines; and run a steady governance cadence. Do that, and your brand won’t just show up—it’ll be cited accurately where decisions are being made.