How Generative AI Is Transforming SEO & Search Visibility (2025 Report)

Discover how Generative AI shifts SEO in 2025: new AI Overviews, citation-first visibility, and CTR drops. Expert data and action steps—read now!

Updated on: October 10, 2025

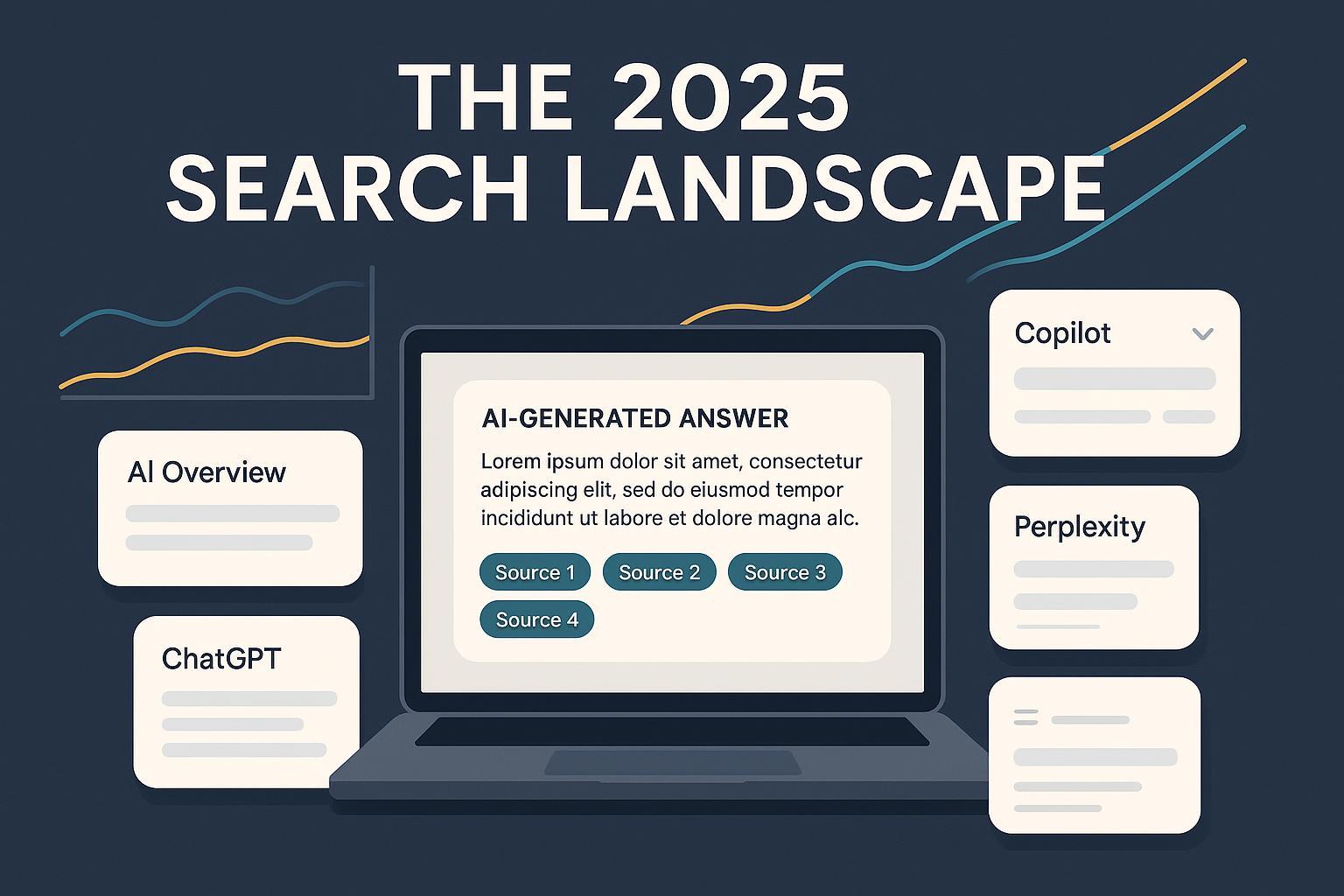

Generative AI has turned search from a page of blue links into an answer surface. In 2025, success is no longer defined solely by where you rank—it’s whether your brand is present, cited, and accurately represented inside AI-generated answers across Google, ChatGPT, Perplexity, and Microsoft Copilot. This piece breaks down what changed, what you can still control, and how to measure visibility in this new environment.

What changed in 2025 (and why it matters)

- Google expanded AI Overviews/AI Mode globally and emphasized usage growth for queries that trigger Overviews. According to the May 2025 update on the Google Search blog, AI Overviews rolled out to 200+ countries and 40+ languages, and Google reported “10%+ increase in usage… for the types of queries that show AI Overviews” using a custom Gemini 2.5 model in the U.S. See the details in the Google Search blog’s May 20, 2025 AI Mode/Overviews update.

- User behavior shifted. A July 2025 behavioral study by the Pew Research Center found that AI summaries appeared in 18% of searches by 900 U.S. adults; when summaries appeared, users clicked traditional links in 8% of visits vs 15% without, and only 1% of visits included a click within the summary. Read Pew’s findings in the 2025 Google search behavior study with AI summaries.

- Trigger rates climbed through early 2025. Semrush’s March 2025 analysis estimated AI Overviews appeared for 13.14% of queries (up from 6.49% in January), with most being informational. See their methodology and trendline in the Semrush 2025 AI Overviews trigger study.

The takeaway: attention is compressed into AI answer modules. Your goal is to be included and accurately cited where those answers appear, and to design content that still earns the click when users choose to go deeper.

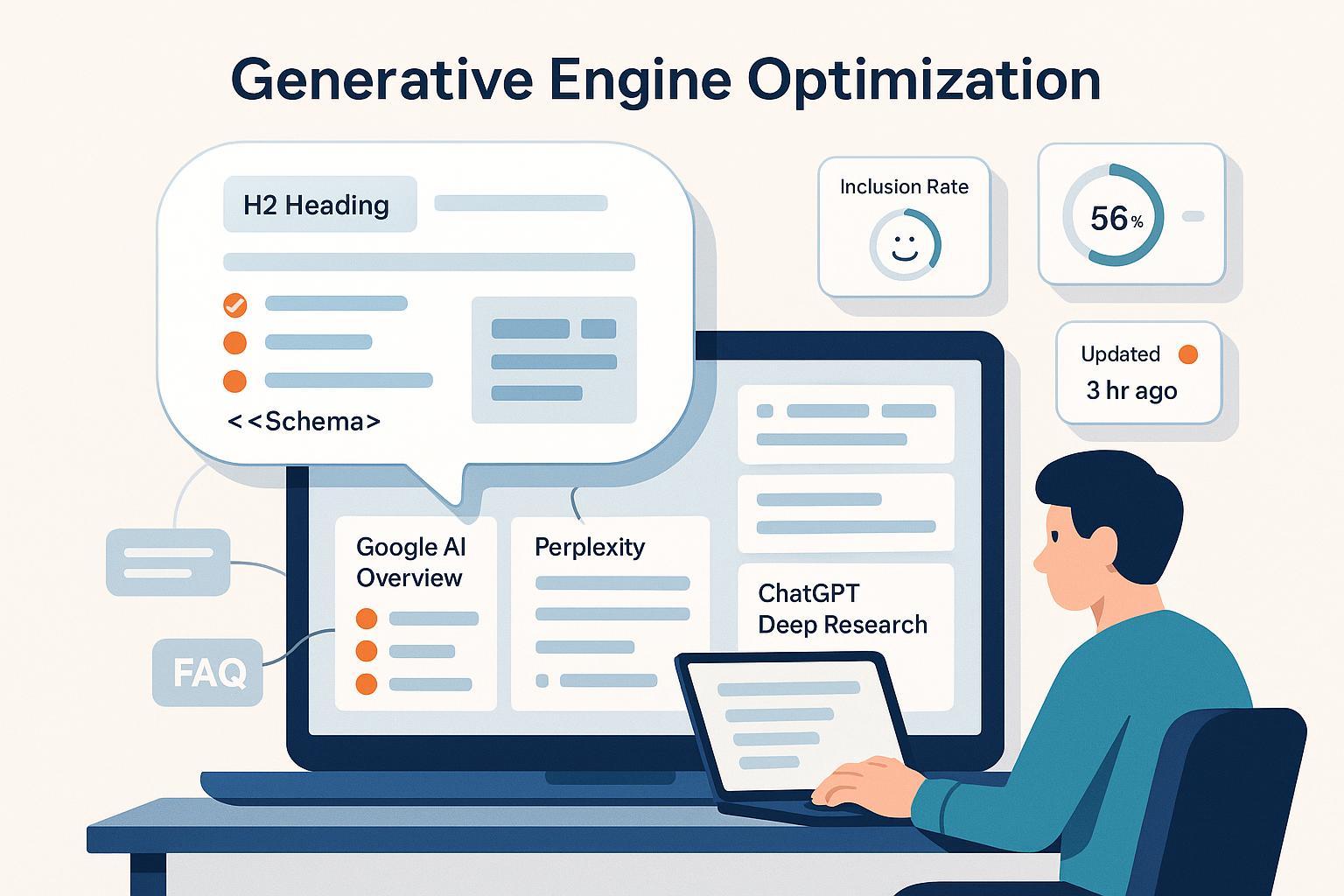

From rankings to citations: new levers for inclusion

Search is now a citation game as much as a rankings game. Generative engines prefer sources that are:

- Evidence-dense: original data, clearly attributed quotes, dated methodology, and outbound links to authoritative references.

- Extractive-friendly: concise definitions, FAQs, and step-by-step blocks that models can summarize without ambiguity.

- Entity-strong: consistent Organization/Person identity across the web, supported by structured data and third-party profiles.

Tactics you can deploy now:

- Structure pages for answers: Use clear H2/H3 question formats, concise definitions, and tabled data with source notes. Add appropriate schema (Organization/Person, Article, FAQPage, HowTo, WebSite + Sitelinks SearchBox; Speakable where eligible) following Google Search Central’s structured data guidance.

- Publish “evidence pages”: statistics hubs, benchmarks, and glossaries with transparent methods and downloadables. Time-stamp everything.

- Build entity authority: maintain consistent org/person profiles, and seek expert quotes and reputable third‑party mentions that LLMs can corroborate.

- Leverage communities to seed credible citations: high-quality, non-promotional contributions in expert forums like Reddit can influence what AI engines surface; see practical tactics in Driving AI Search Citations Through Reddit Communities for an extended walkthrough (owned resource).

Platform-by-platform: what changed and what you control

- Google AI Overviews/AI Mode: Global coverage expanded with iterative presentation tweaks. You can’t force inclusion, but you can improve odds by supplying extractive-friendly, well-cited content and robust schema. Google also documents how AI features select supporting sources for summaries; review guidance in Google’s “AI features and your website” documentation.

- OpenAI/ChatGPT with web results: Chat answers increasingly show transparent citations for licensed and crawled sources. Governance choices (allow/block GPTBot) matter for discovery and training posture; control it via OpenAI’s GPTBot documentation.

- Perplexity: Source-forward by design, with late‑2025 “Comet Plus” rolling out publisher compensation based on usage and citations. Learn how Perplexity frames its program in the Perplexity “Introducing Comet Plus” announcement (2025). Participation is a strategic choice; weigh visibility gains versus licensing terms and operational overhead.

- Microsoft Copilot Search (Bing): Copilot integrates AI summaries with clearly cited sources and a “See all links” control, as outlined in the Bing team’s April 2025 Copilot Search introduction. While official referral metrics are limited, citation transparency and link modules create click opportunities when you supply distinct value (original data, tools, calculators, visuals).

Measurement reset: build an AI visibility scorecard

Classic SEO dashboards don’t capture AI presence. Add a layer that measures:

- Inclusion rate by engine: Are you cited in AI answers on Google, ChatGPT, Perplexity, and Copilot for your priority queries?

- Citation prominence and link style: Above/below the fold; inline vs expandable sources; direct link vs hover-only.

- Sentiment and accuracy: Is the model describing your brand/product correctly? Is sentiment neutral/positive/negative?

- Referral quality: Track LLM-origin traffic and assisted conversions from AI engines and chat surfaces (UTM conventions plus landing-page patterns).

- Query-class coverage: Segment informational vs commercial vs local. Expect lower clicks on purely informational terms; double down on high-intent classes where users still click.

Neutral example workflow (mid-funnel): For weekly tracking across engines, some teams use cross‑engine monitors to log which queries surface citations, which URLs are referenced, and how sentiment trends over time. A tool like Geneo supports multi-engine citation tracking and sentiment logging for AI answers across ChatGPT, Perplexity, and Google AI Overviews. Disclosure: Geneo is our product.

If you want to compare options in the monitoring landscape, see this owned but objective overview: Profound review 2025 with alternative recommendation (contextual reading; not evidentiary).

To visualize what a cross-engine AI visibility output can look like, here’s a labeled example of an owned reporting page: AI Search Visibility Report (sample query view) — use it purely as an illustrative format reference.

Operational cadence

- Weekly: Crawl priority queries across engines; capture screenshots; log inclusion, citation style, and sentiment. Note deltas after you publish new evidence-dense assets.

- Monthly: Update your scorecard; correlate AI presence with assisted conversions, branded search lift, and PR pickups.

- Quarterly: Reassess licensing/opt-out posture and structured data coverage; refresh cornerstone evidence pages with new data.

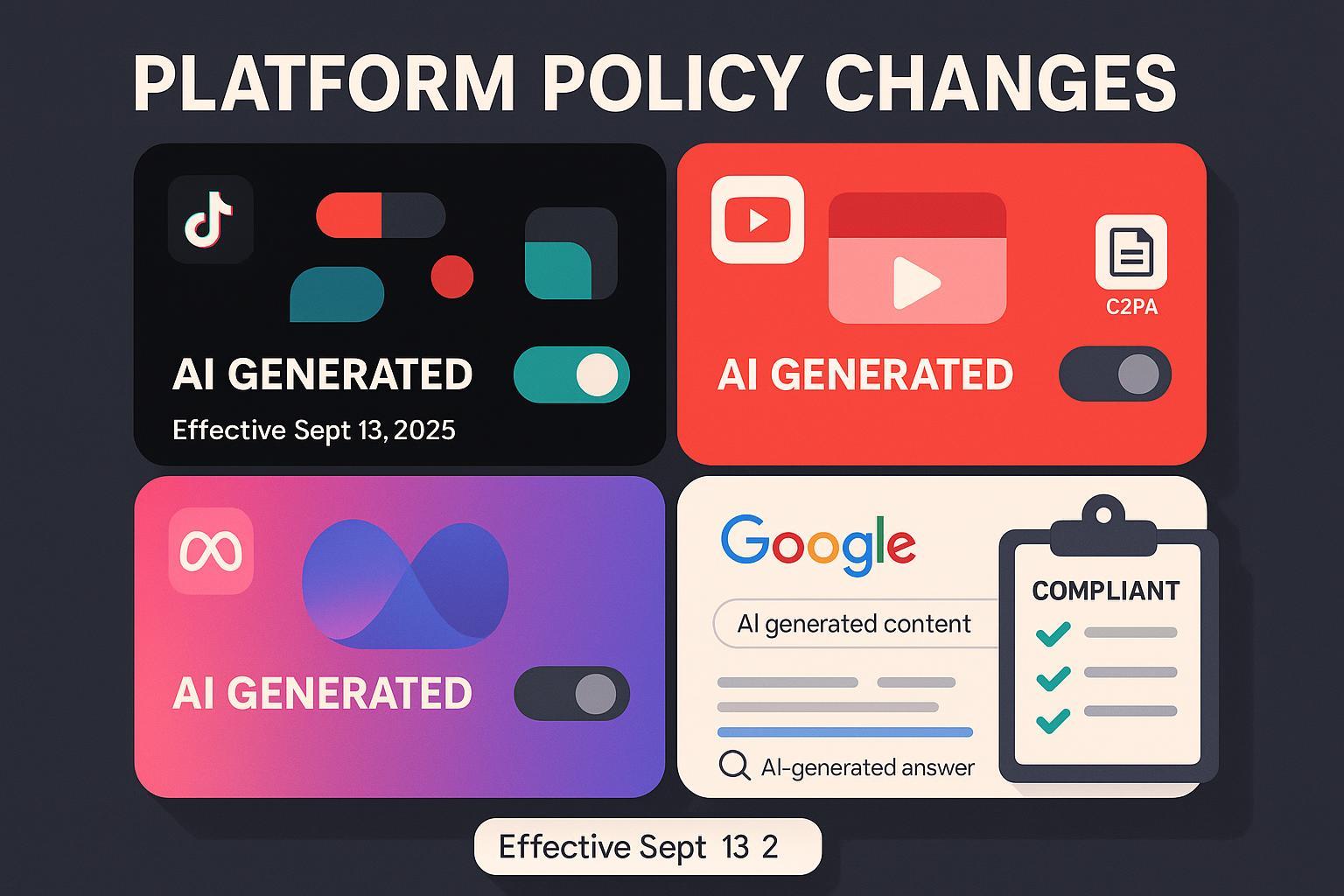

Governance and risk: licensing, opt‑outs, and misattribution

- Licensing posture: Evaluate participation in publisher programs (e.g., Perplexity Comet Plus) and potential OpenAI partnerships via newsroom or publisher-announced deals. Model the trade-offs between broader inclusion and content control.

- Robots and crawlers: Make an intentional decision about allowing GPTBot (OpenAI) and PerplexityBot; manage via robots.txt and server rules. Start with OpenAI’s GPTBot controls and Perplexity’s bot documentation (and published IP ranges) and monitor server logs for anomalies.

- Google-Extended: If you need to opt out of Google’s generative model training while remaining indexable in Search, configure Google-Extended in robots.txt per developer guidance (documented on Google Search Central updates and related pages).

- Misattribution defense: Maintain a corrections workflow—capture the AI answer, note the incorrect claim, and submit feedback through platform channels. Track incidents and resolutions internally; publish a public corrections page for material errors that impact users.

What to track next

- Trigger rates and coverage by query class across all engines (informational vs commercial vs local)

- CTR deltas when AI answers display, with an eye on where clicks still flow (product, local, and transactional intent)

- Publisher program shifts and their inclusion/attribution norms

- Changes to link presentation (inline vs expandable panels) that alter click behavior

Conclusion

Generative AI has compressed attention into answer surfaces, shifting the game from pure rankings to presence and precision. Brands that win in 2025 will publish evidence‑dense, extractive‑friendly content; reinforce entity authority; and add an AI visibility measurement layer tied to business outcomes. If you need a lightweight way to track AI citations and visibility, you can evaluate Geneo alongside other monitoring options.

Revision log

- 2025-10-10: Initial publication with 2025 data (Google global rollout context; Pew behavior study; Semrush trigger rates; Perplexity Comet Plus; Microsoft Copilot Search overview).