Geneo vs Brandlight (2025): Tone-of-Voice Training & AI Visibility

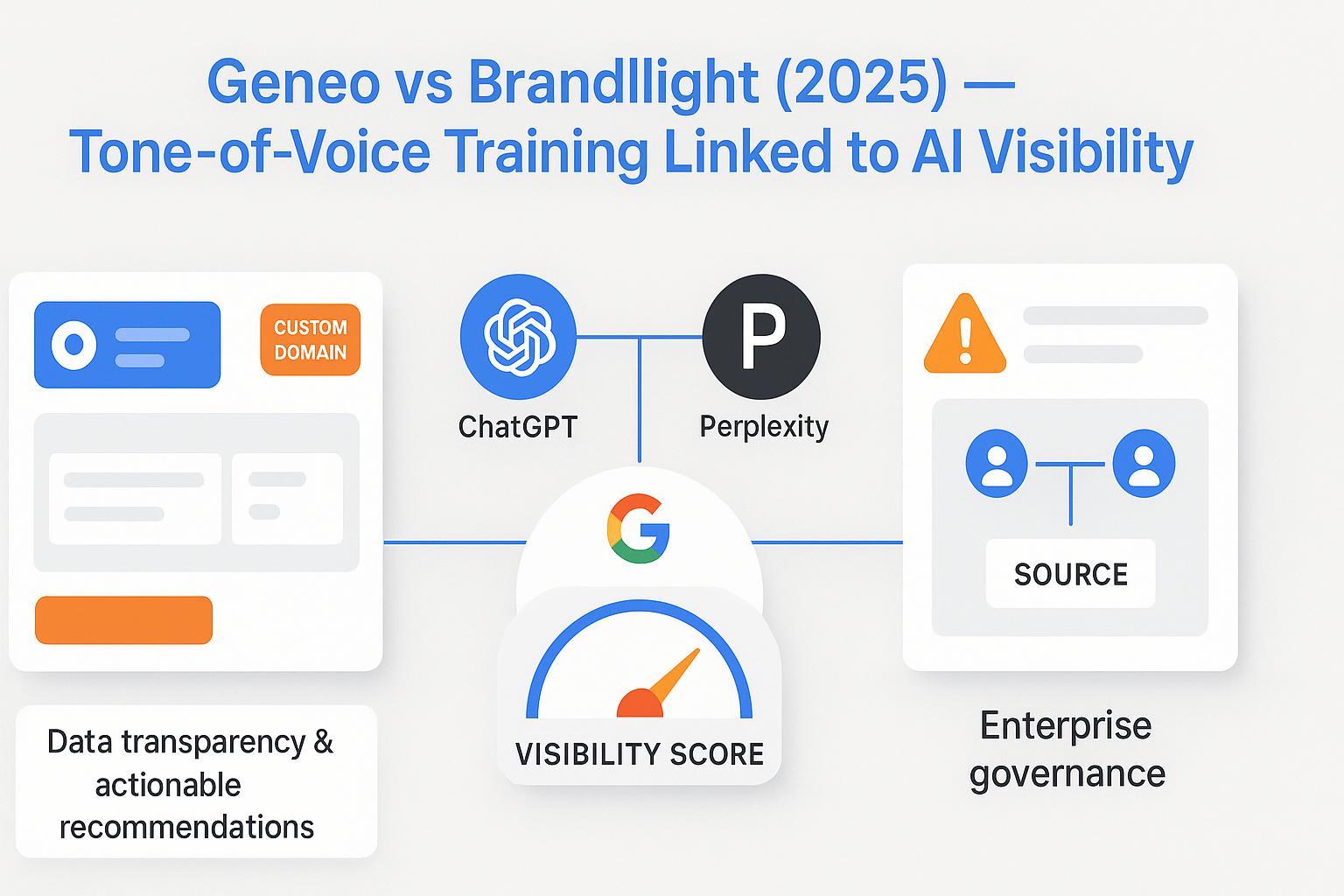

Compare Geneo vs Brandlight for 2025. See how each platform links tone-of-voice training to measurable AI visibility, covering engine coverage, reporting, and transparency.

If your team is trying to prove that tone‑of‑voice consistency actually moves the needle in AI‑generated answers, you’ve probably hit a wall. Across engines like ChatGPT, Perplexity, and Google AI Overviews, we found no public, empirical study in 2024–2025 that isolates tone consistency as a causal driver of inclusion or position. That doesn’t mean it has no effect—it means you need a controlled, evidence‑first workflow to test it.

Disclosure: Geneo is our product. This guide maintains neutrality and supports every key claim with dated, official sources. The primary conversion for agency readers is to click “Start Free Analysis” to instrument your own test on Geneo.

Who Each Platform Fits (at a Glance)

Geneo: Built for agencies that need white‑label dashboards, client‑ready reports, and transparent, actionable recommendations tied to a Brand Visibility Score. It covers ChatGPT, Perplexity, and Google AI Overviews and leans into Generative Engine Optimization (GEO) workflows with competitive analysis and CNAME branding. See the Agency page for white‑label specifics.

Brandlight: Positioned for enterprise CMOs/PR teams with broader claimed engine coverage, source attribution, sentiment/share‑of‑voice analytics, and governance‑style alerting. Official pages describe tracking across “11 top AI engines” and emphasize traceability and benchmarking. See Brandlight’s homepage and Solutions (updated 2025‑12‑18).

Side‑by‑Side Snapshot (as of late 2025)

Dimension | Geneo | Brandlight |

|---|---|---|

Engine coverage (official) | ChatGPT, Perplexity, Google AI Overviews — stated on the Geneo homepage and reinforced in the engine comparison blog (2025‑08‑04; updated 2025‑12‑19). | “11 top AI engines” including Google AI, Gemini, ChatGPT, Perplexity — per Brandlight’s homepage and Solutions (2025‑12‑18). Full list not publicly enumerated. |

Metric transparency | Brand Visibility Score with defined measurement fields (mentions, citations, sentiment, inclusion) documented across Agency and KPI posts (2025). Formula weights aren’t public, but pillars and fields are. | Sentiment, share‑of‑voice, and source attribution described on official pages. Public scoring formulas and per‑engine field lists aren’t published; emphasis is on governance and traceability. |

Actionability | Actionable recommendations for content improvements tied to visibility gaps; agency‑ready QBR narratives. Internal education on KPI pillars via LLMO metrics (2025‑08‑30; updated 2025‑12‑19). | Recommendations and distribution/governance posture referenced on site and SAT articles (e.g., predictive scoring for AI messaging, 2025‑12‑13). |

Reporting & governance | White‑label, custom domain (CNAME), competitive benchmarking, client‑facing dashboards — see Agency (2025‑12‑05 update). | Enterprise workflows with alerts, remediation, source‑level traceability; SLA/workflow UI details not fully public. See Enterprise (updated 2025‑12‑18). |

Pricing posture | “Start free” motion with credits and short trial language in 2025 site content; no public tier table captured during research. | No public self‑serve pricing; enterprise/white‑glove sales motion. |

Product Capsule: Geneo (Agency‑First GEO)

Specs

Coverage: ChatGPT, Perplexity, Google AI Overviews — official positioning on the homepage and reinforced in the engine comparison (2025‑12‑19 update).

Reporting: White‑label dashboards on custom domains (CNAME) with client‑ready QBR modules; competitive analysis and branded exports via the Agency page (2025‑12‑05 update).

Metrics: Brand Visibility Score consolidating mentions, citations, sentiment, and inclusion signals; KPI pillars documented in LLMO metrics and the AI visibility explainer.

Evidence notes

Geneo publishes definitional posts for AI visibility and operational KPI pillars (2025). Score weightings aren’t public, but measurement fields and method intent are.

Pros

Transparent measurement fields and report‑ready narratives for agencies; actionable recommendations that map observed gaps to content changes.

White‑label/CNAME setup streamlines multi‑client delivery and differentiates agency offerings.

Who it’s for

Growth‑focused agencies that need repeatable QBRs, competitive benchmarks, and a way to link interventions (including voice alignment) to visibility trends across engines.

Constraints & Pricing‑as‑of

As of 2025‑12‑19: “Start free” posture; confirm credits and any tiering at onboarding. No external proof that tone consistency alone drives ranking; use controlled testing.

Product Capsule: Brandlight (Enterprise Visibility & Governance)

Specs

Coverage: Claimed tracking across “11 top AI engines” including Google AI, Gemini, ChatGPT, Perplexity — per homepage and Solutions (updated 2025‑12‑18). The complete engine list isn’t public in our sources.

Reporting/Governance: Sentiment, share‑of‑voice, source attribution, dashboards, and alerting/remediation workflows; see Enterprise and SAT articles like real‑time AI brand visibility (2025‑10‑31).

Metrics: Emphasis on traceability and governance; public scoring formulas and per‑engine field enumerations aren’t provided.

Evidence notes

Brandlight’s SAT subdomain discusses API‑enabled dashboards and governance templates; a public endpoint‑level API reference wasn’t retrieved.

Pros

Enterprise posture with source‑level traceability and alerting; sentiment/share‑of‑voice analytics for PR/brand teams.

Broad claimed engine coverage suitable for risk and reputation monitoring.

Cons

No public pricing; limited third‑party validation and incomplete public metric definitions.

Public API spec and full engine list not surfaced in our research.

Who it’s for

Enterprise brand, PR, and comms teams needing cross‑engine risk monitoring, attribution, and incident‑style workflows.

Constraints & Pricing‑as‑of

As of 2025‑12‑18: Enterprise sales motion without public pricing. No empirical proof that tone consistency alone drives ranking; run controlled studies and include null results.

How to Run a Controlled Tone‑of‑Voice Experiment (Works in Either Platform)

Here’s the deal: to judge whether voice alignment affects AI visibility, design a small, disciplined study.

Baseline (week 0)

For each engine (ChatGPT, Perplexity, Google AIO), define 10–20 priority prompts your clients care about.

Capture: inclusion/position in answers; named brand mentions; citation/source mix; sentiment/tone descriptors; context (“recommended,” “consider,” etc.). Timestamp logs and screenshots.

Reference background reading on AI visibility KPIs and engine differences to refine fields.

Intervention (weeks 1–2)

Select a cohort of pages for tone‑of‑voice alignment (e.g., clarity, authority cues, consistent descriptors) while keeping control pages unchanged.

Keep non‑voice variables stable (URLs, schema, facts). If they change, annotate precisely.

Observation window (weeks 2–4)

Log daily/weekly deltas: inclusion/position shifts, mention frequency, citation patterns, sentiment/tone descriptors.

Annotate external events (competitor updates, model releases). Use difference‑in‑differences logic when feasible.

Analysis & reporting

Compute visibility deltas alongside confidence ranges; include null or negative results.

Produce a white‑label QBR report mapping interventions → score changes, plus caveats. If you use Geneo, the Brand Visibility Score can serve as the stability layer for trend tracking.

This workflow is platform‑agnostic and directly addresses the pain point: you’ll have a time‑stamped, auditable record to discuss with clients—even if the effect is small or indirect.

Decision Checklist and Risks to Watch

Fit

Agency with many clients and QBR cadence → Geneo’s white‑label/CNAME, competitive benchmarks, and actionable guidance.

Enterprise PR/brand with governance/alerts needs → Brandlight’s traceability and incident‑style workflows.

Evidence

Neither platform nor independent sources prove tone‑of‑voice alone drives rankings. Treat it as a hypothesis; measure rigorously.

Transparency

Confirm which fields are logged per engine, export options, and how recommendations tie to observed gaps.

Operations

Ask about multi‑account management, annotation features, and how to track external events.

What to Do Next

If you’re an agency ready to run the controlled test above and need report‑ready dashboards, start by instrumenting prompts, baselines, and annotations. Then run a 2–4 week intervention window and package the findings.

Want to set this up quickly? Click Start Free Analysis to get a baseline on Geneo and spin up a white‑label client view. It’s not a promise that tone alone will move rankings—but it’s the fastest way to turn a hunch into evidence.