Geneo vs AEO/GEO Tools: Measure AI Answer Visibility 2026

Compare Geneo, Semrush, BrightEdge, seoClarity, Similarweb and DIY to find the best Answer Engine Optimization tools for measuring brand citations, share of answers, and white‑label reporting in 2026.

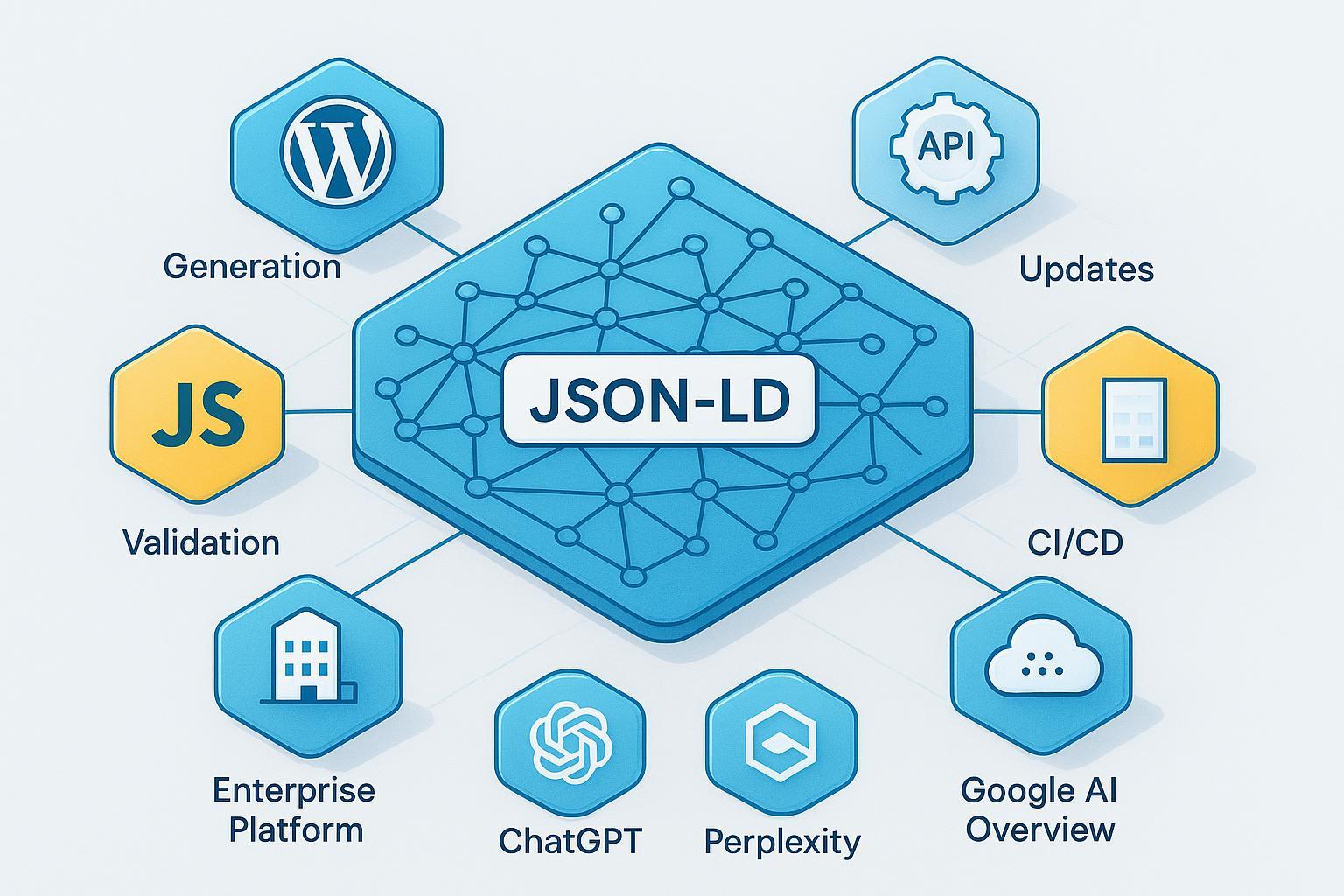

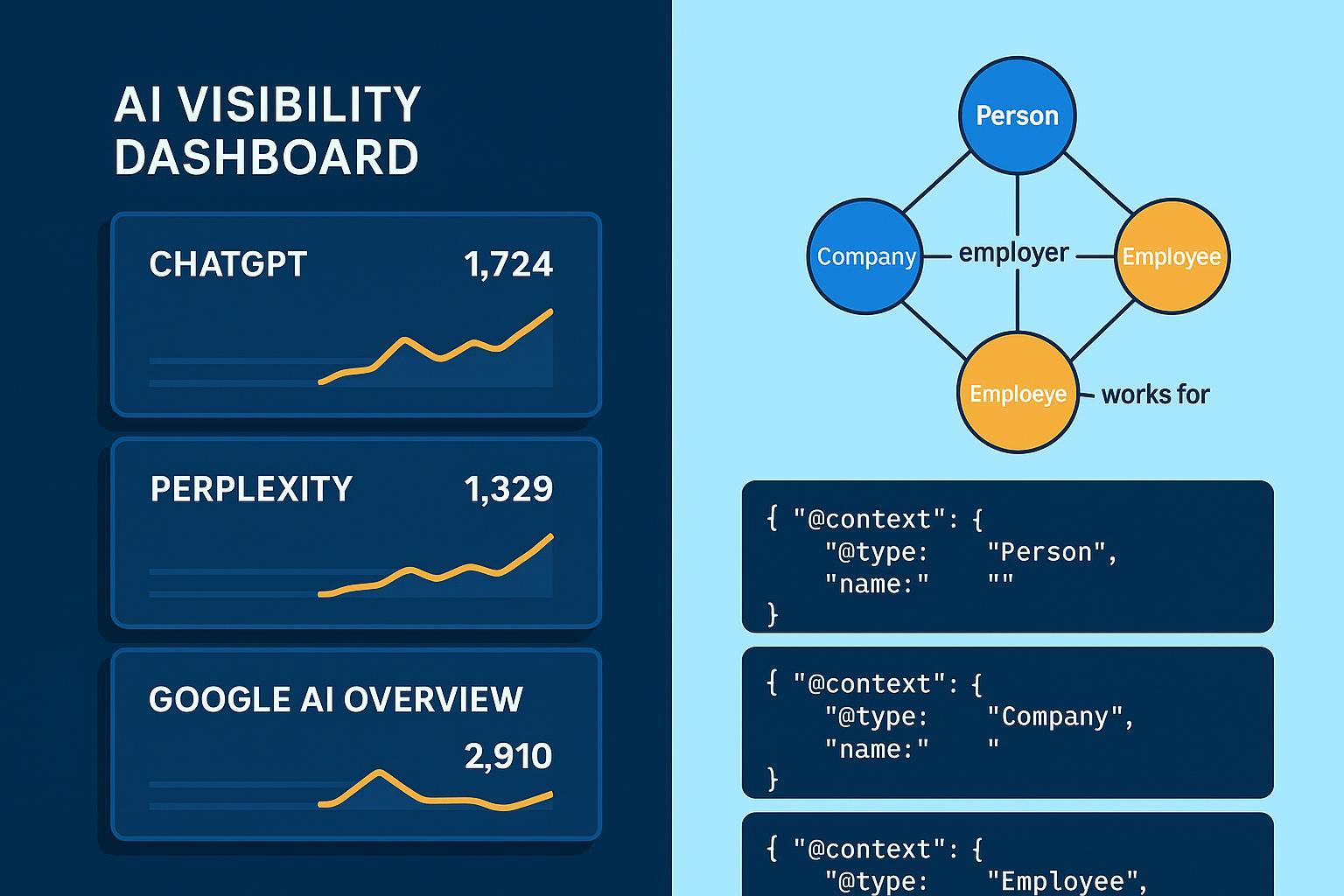

When AI engines summarize the web, most teams still ask a basic question: “Are we even visible inside those answers?” In 2026, the priority isn’t just rank—it’s whether your brand is cited, mentioned, or referenced inside ChatGPT, Perplexity, and Google’s AI Overview/AI Mode. This comparison focuses on the tools that help you measure brand exposure and citation share across engines, and on agency-ready white-label reporting.

If you need a fast primer on engine differences and measurement pitfalls, see the comparative guide to ChatGPT vs Perplexity vs Google AI Overview on Geneo’s blog: GEO comparison of AI engines.

Quick-scan comparison table (2026)

Product | Cross-engine coverage | Measurement focus | White-label signal | Pricing-as-of (2026-01) |

|---|---|---|---|---|

Authoritas | ChatGPT, Perplexity, Gemini, Claude, Google AIO (public pages) | AI Share of Voice; citations/mentions | Looker Studio templating (branding via dashboards) | Sales-led (no public tiers) |

BrightEdge | Google AI Overviews (research + platform) | Generative Parser-based analysis | Enterprise reporting; no explicit agency white-label emphasis | Sales-led enterprise |

Geneo | ChatGPT, Perplexity, Google AI Overview | Brand Visibility Score; mentions/citations; competitive benchmarking | Native white-label: custom domain (CNAME), branded dashboards, multi-client workspaces | Free/Pro/Enterprise; credits-based (site indicates tiers) |

Semrush | Google AI Mode + AI Overviews | Position Tracking AI Mode presence; citations/visibility trends | In-suite reporting; agency white-label via workflows | Toolkit add-on on top of paid plan (public materials show $99/month) |

seoClarity (ArcAI) | Google AIO & AI Mode; chat engines referenced | AIO/AI Mode triggers; domain/URL citations; optimization insights | Enterprise dashboards; white-label not a core public pitch | Sales-led enterprise |

Similarweb Rank Ranger | Google AI Overviews as SERP feature | AIO presence, citations, competitive share over time | Agency-friendly white-label reports and scheduling | Similarweb bundle-based; Team plan widely cited at $14k/year |

DIY (GA4 + GSC + scripts) | Indirect only (no native AI answer visibility) | Traffic proxies; no share-of-answers | Custom Looker Studio; indirect metrics | “Free” tools, but engineering/maintenance cost |

Last checked: 2026-01. Where pricing is sales-led or add-on based, confirm current packaging during procurement.

How we evaluated Answer Engine Optimization tools

We emphasized measurement depth and cross-engine visibility, with practical delivery for agencies and enterprises:

Measurement depth: 0.35

Cross-engine coverage: 0.20

Reporting/white-label: 0.15

Competitive intelligence: 0.15

Ops/integration: 0.10

Cost/complexity: 0.05

Authoritas

Coverage Authoritas publicly highlights multi-engine tracking across ChatGPT, Perplexity, Gemini, Claude, and Google AI Overviews. See the platform’s AI brand visibility pages and updates, including their AI Share of Voice framing: Authoritas AI brand visibility tracking.

Measurement The core lens is AI Share of Voice—frequency of appearances and citations across engines, including branded vs unbranded views. Their content emphasizes real-time alerting and multi-engine presence.

Competitive intel Authoritas surfaces dominant brands per topic and supports persona/prompt sets for discovery.

Reporting/white-label Public materials emphasize Looker Studio dashboard templates and combined AI+SEO reporting; native in-app white-label specifics aren’t prominently documented.

Integrations/APIs References to BigQuery/Looker Studio enable BI-style reporting.

Pricing (as of 2026-01) No public pricing table; sales-led.

Constraints/Evidence

White-label appears primarily via Looker Studio branding instead of in-app native white-label.

Pricing undisclosed; evaluate total cost via sales quotes.

Sources: Authoritas site and blogs; last checked 2026-01.

BrightEdge

Coverage BrightEdge’s research references a proprietary Generative Parser used to analyze AI Overviews and the evolution of AI search results. See the official 2025 report: BrightEdge AIO Overviews One Year Review (PDF).

Measurement Parser-based detection of AI Overviews and their implications across industries and query types, backed by research.

Competitive intel Competitive insights are a historical strength (e.g., Data Cube), though specific AI answer competitive dashboards are lighter on public details.

Reporting/white-label Enterprise reporting is robust; explicit agency-oriented “white-label” isn’t emphasized on public pages.

Integrations/APIs Typical enterprise connectors; confirm details via sales.

Pricing (as of 2026-01) Sales-led enterprise.

Constraints/Evidence

Public product docs for live citation dashboards are limited; rely on demos.

Implementation can be heavier for large organizations.

Source: BrightEdge research PDF; last checked 2026-01.

Geneo

Disclosure: Geneo is our product.

Coverage Geneo focuses on cross-engine monitoring across ChatGPT, Perplexity, and Google AI Overview, tracking mentions, citations, and references. Public positioning for agencies is here: Geneo’s agency overview.

Measurement Geneo exposes a Brand Visibility Score (0–100), plus counts for brand mentions, link visibility, and citations. It includes competitive benchmarking and sentiment views to help teams understand narrative accuracy.

Competitive intel Benchmark your brand against named competitors inside AI answers and monitor shifts over time.

Reporting/white-label Native white-label features include custom domains (CNAME), branded dashboards, multi-client workspaces, and scheduled exports designed for agency delivery. The agency page shows branding controls and client-ready reporting views.

Integrations/APIs Public API/export documentation is limited on-site; evaluate needs during onboarding if BI pipelines are required.

Pricing (as of 2026-01) Tiered (Free, Pro, Enterprise) with a credits-based model; confirm exact allowances and rates on purchase pages.

Constraints/Evidence

API/export specs are not extensively documented publicly; confirm prior to BI integration.

Credits-based pricing requires usage planning for high-volume sampling.

Source: Geneo site (agency page) and product positioning; last checked 2026-01.

Semrush (AI Visibility Toolkit)

Coverage Semrush introduced AI Mode as a selectable engine in Position Tracking and tracks AI Overviews. See the platform’s news post: Semrush adds AI Mode in Position Tracking.

Measurement Per-keyword monitoring for AI Mode presence and visibility, with citations and trend views in Semrush materials; toolkit content discusses AI visibility indices.

Competitive intel Competitive comparisons and gap identification within AI Overviews and AI Mode.

Reporting/white-label Strong reporting inside the suite; explicit agency white-label is typically achieved via workflows rather than native custom domains.

Integrations/APIs Broad ecosystem integrations; toolkit-specific APIs are less visible on public docs.

Pricing (as of 2026-01) Public materials widely cite the AI Visibility Toolkit as a paid add-on (often noted as $99/month) on top of a Semrush subscription. Confirm packaging at purchase.

Constraints/Evidence

Add-on pricing stacks on base plan; total cost depends on your subscription tier.

Cross-engine scope beyond Google varies by module.

Source: Semrush news/blogs; last checked 2026-01.

seoClarity (ArcAI)

Coverage ArcAI covers Google AI Overviews and AI Mode, with references to chat platforms for visibility. See official pages: seoClarity AI Overviews tracking and track Google AI Mode.

Measurement Identify AI Overview triggers, cited domains/URLs, and monitor presence; ArcAI Insights prioritizes fixes across visibility, accuracy, and sentiment.

Competitive intel Cross-engine comparisons and demand modeling help surface gaps and opportunities.

Reporting/white-label Enterprise dashboards and action layers; white-label is not a core public positioning.

Integrations/APIs Enterprise-grade integrations and security posture; validate export/API specifics in demos.

Pricing (as of 2026-01) Sales-led enterprise.

Constraints/Evidence

Non-Google chat engine coverage frequency/latency should be validated.

White-label specifics for agencies are not emphasized.

Sources: seoClarity product pages and news; last checked 2026-01.

Similarweb Rank Ranger

Coverage Rank Ranger tracks Google AI Overviews as a SERP feature, capturing presence, citations, and ownership over time. Similarweb’s broader Gen AI visibility adds prompts/citations views. See a public guide: Rank Ranger on Similarweb packaging.

Measurement AIO presence rates, tracked citations, and competitive share across keyword sets; correlation with traffic signals via Similarweb datasets where applicable.

Competitive intel Time-series visibility and competitive owners for AIO blocks enable practical gap analysis.

Reporting/white-label Agency-friendly reporting with white-label options and automated scheduling inside the Similarweb/Rank Ranger environment.

Integrations/APIs API access varies by Similarweb plan; Rank Ranger integrates into the SEO stack.

Pricing (as of 2026-01) Sales-led; widely cited Team plan at $14k/year for Similarweb (confirm current bundles and add-ons).

Constraints/Evidence

Cross-engine visibility beyond Google AIO depends on add-ons.

Pricing and scope depend on enterprise bundles.

Source: RankRanger.com guide and Similarweb docs; last checked 2026-01.

DIY stack (GA4 + GSC + scripts)

Coverage Google Search Console now surfaces AI features in reporting, but you can’t isolate AI Overview clicks/impressions in a way that reveals per-answer inclusion. See Google’s developer overview: Google’s AI features documentation.

Measurement These tools provide indirect proxies only—no direct measurement of “share of answers” or citation frequency at scale. Search Engine Land cautions against over-interpreting proxy data: Hard truths about measuring AI visibility (2026).

Competitive intel Not available natively.

Reporting/white-label Looker Studio dashboards are feasible, but they reflect traffic proxies rather than direct answer inclusion.

Integrations/APIs Broad connectors exist; data fields for AI answers are missing.

Pricing (as of 2026-01) Tooling is free; real cost is analyst/engineering time and maintenance.

Constraints/Evidence

No AI-only segmentation in GSC; GA4 can’t flag AI Overview clicks.

Unstable outputs make manual scripts brittle.

Sources: Google developer docs; Search Engine Land analysis (2026-01); last checked 2026-01.

Choosing Answer Engine Optimization tools: scenario-based recommendations

Best for agencies managing many clients

Geneo: Native white-label (custom domains, branded dashboards) and multi-client workspaces make recurring client delivery straightforward.

Similarweb Rank Ranger: Mature white-label reporting and scheduling; strong for Google AIO-centric programs.

Best for enterprise SEO operations

BrightEdge: Enterprise analytics depth and parser-based measurement; aligns with centralized SEO programs.

seoClarity (ArcAI): Enterprise dashboards and insights layer with governance.

Similarweb: Broad data ecosystem and APIs when bundled at enterprise tiers.

Best for rank-tracking-centric workflows

Similarweb Rank Ranger: AIO tracked as a SERP feature with competitive ownership and trends.

Best for teams standardized on Semrush

Semrush: Pragmatic add-on for AI Mode/AIO visibility alongside existing suite workflows.

Baseline/traffic attribution only

DIY (GA4 + GSC): Useful for traffic context; insufficient for measuring share-of-answers or per-answer citations.

How to choose, practically

Anchor on the measurement gap: Do you need per-answer citations, mentions, and share-of-answers across multiple engines—or only Google AIO? Choose Answer Engine Optimization tools that can show inclusion inside answers, not just rank proxies.

Confirm agency delivery: If you report to many clients, look for native white-label (custom domains, branded dashboards, scheduled exports) rather than stitching workflows. Several Answer Engine Optimization tools claim white-label; verify whether it’s in-app branding or external dashboards.

Validate evidence: Ask vendors for live examples of citation capture and volatility handling; request last-checked dates and retention windows.

Test cross-engine stability: Sample 60–100 prompts across 14 days for ChatGPT, Perplexity, and Google AI Overview; evaluate presence rates and citation frequency.

Plan integrations: If BI pipelines matter, confirm APIs/exports and governance before procurement.

Model costs: For add-ons or credits, forecast sampling volume and reporting cadence to avoid surprises.

For KPI context on visibility, sentiment, and conversion in AI search, see AI Search KPI frameworks (2025).

Methods and sources

Criteria weights: Measurement depth (0.35), Cross-engine coverage (0.20), Reporting/white-label (0.15), Competitive intel (0.15), Ops/integration (0.10), Cost/complexity (0.05).

Volatility note: AI answers are non-deterministic; assess visibility via repeated sampling and time-series views.

Evidence links: Vendor pages and recognized industry publications (checked 2026-01). Limit link density and prefer canonical sources.

Soft note for agencies: If native white-label with custom domains and multi-client workspaces is central to your workflow, Geneo is designed for that scenario; evaluate alongside Similarweb Rank Ranger to match your engine coverage and budget.

Visit product sites for current capabilities, packaging, and pricing. Confirm scope during demos and run a short sampling test before rollout.