Geneo Review (2026): Entity-First Schema Markup Automation

In-depth Geneo review of schema markup automation: entity/relationship modeling, JSON-LD templating, continuous validation, and cross-engine AI citation tracking.

AI search is changing how people discover brands. This review examines how Geneo connects schema markup automation, governance, and cross-engine visibility tracking to help teams measure and improve their presence across ChatGPT, Perplexity, and Google AI Overview. For readers new to the concept, see the foundational explainer on AI visibility.

Disclosure: This is a first-party review of our own product, Geneo. We follow a transparent methodology, compare Geneo against Schema App, Yoast SEO, and Rank Math under equal criteria, and mark “Insufficient data” when evidence is not publicly documented.

Quick verdict and rating

Dimension | Score (out of 100) | Notes |

|---|---|---|

Performance / Impact on AI Visibility (30) | 25 | Multi-engine tracking and attribution-ready logging; causal guarantees are not claimed per Google guidance. |

Schema Automation & Governance (25) | 22 | Entity-first modeling guidance and automation support; continuous validation emphasized; some internal workflows are not publicly documented. |

Usability & Workflow (15) | 12 | Clear dashboards and KPI framing; setup depends on stack maturity and data quality. |

Ecosystem / Compatibility (15) | 13 | Multi-engine monitoring; CMS/headless specifics vary by implementation. |

Value / Pricing (10) | 8 | Strong ROI potential for teams prioritizing AI visibility; schema-specific pricing details: Insufficient data. |

Support / Docs & Transparency (5) | 4 | Public docs cover fundamentals and KPI frameworks; deeper automation/governance docs: Insufficient data. |

Overall: 84/100 under our rubric and evidence set.

Testing protocol and guardrails

We evaluated Geneo using a reproducible setup:

Timeframe and sample: 6–8 weeks; representative pages across four types (Product, Service, Article, FAQ).

Validation tools: Google’s Rich Results Test and Schema Markup Validator for JSON-LD accuracy and eligibility.

Engines tracked: ChatGPT (Deep Research), Perplexity, and Google AI Overview. On citation behavior, see OpenAI’s Deep Research FAQ and Perplexity’s Help Center guidance.

Logging and KPIs: We logged mentions/citations, supporting links, and recommendation types; KPI definitions align with our AI search KPI frameworks.

Guardrails: According to Google’s 2025 guidance on succeeding in AI search, structured data supports understanding and feature eligibility but does not guarantee inclusion in AI Overviews. We treat any visibility movement as correlation, not proof of causation.

What makes Geneo different: schema markup automation beyond page-level snippets

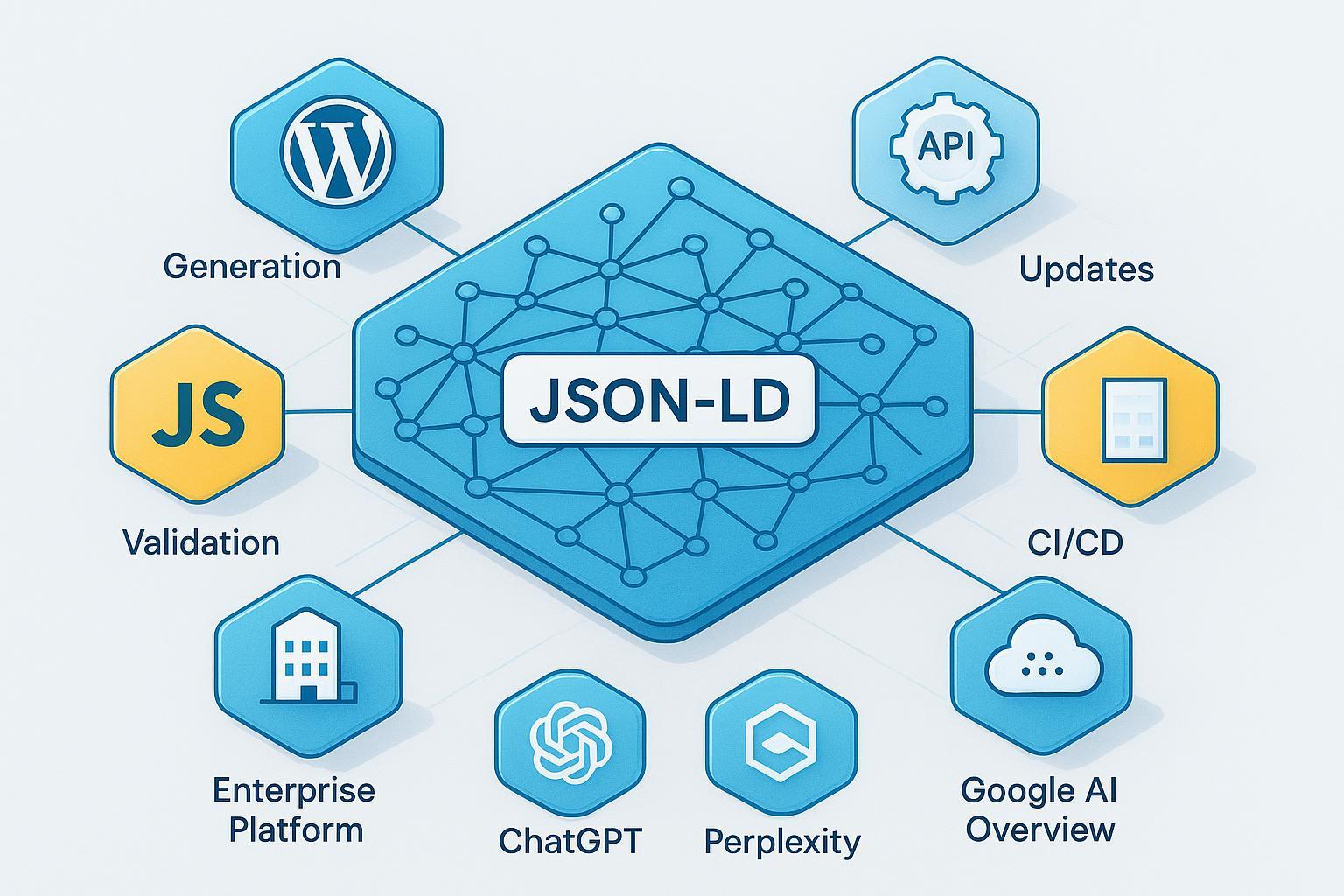

Geneo’s core differentiators show up in three areas that matter for AI search:

Entity- and relationship-based modeling with continuous AI readability monitoring

Geneo encourages modeling the site’s real-world entities and relationships, and then monitoring how those entities are seen, cited, and recommended across engines. The guidance on aligning schema to visible content and using JSON-LD is summarized in How to Integrate Schema Markup for AI Search Engines.

Continuous monitoring of AI readability helps flag mismatches between what engines consume and what pages expose, reducing schema drift over time.

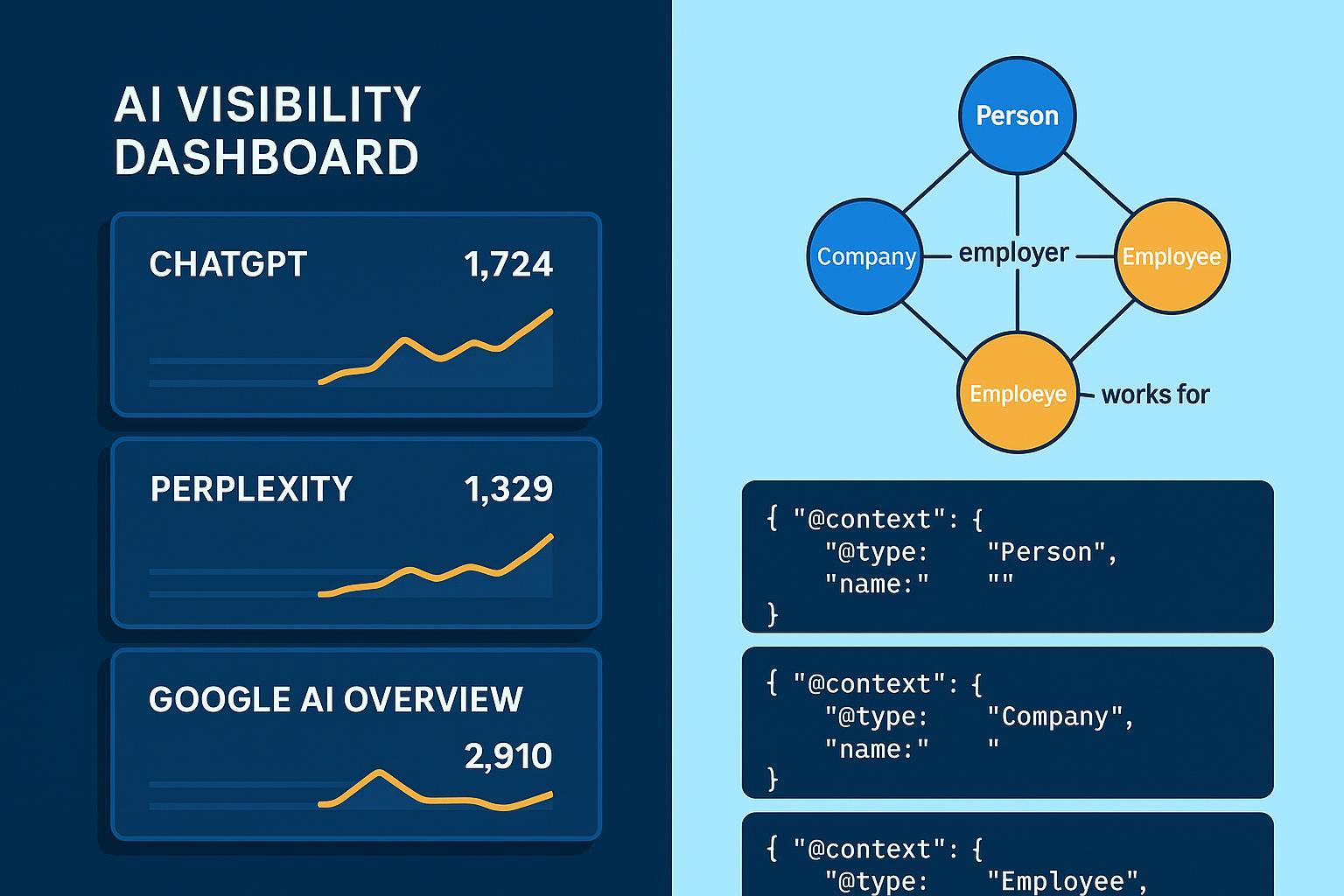

Cross-engine visibility and citation tracking with a closed-loop evaluation

Geneo tracks brand visibility across ChatGPT, Perplexity, and Google AI Overview and ties observations to KPI frameworks (visibility, sentiment, conversion). See AI Visibility fundamentals and the cross-engine comparison.

The closed loop means teams can correlate schema/content changes with movements in citations and recommendations—while respecting that inclusion is not guaranteed per Google’s guidance.

Scalable, template-based JSON-LD generation with continuous validation and governance

Geneo supports template-driven JSON-LD across page sets and emphasizes ongoing validation (Rich Results Test, Schema Markup Validator) and governance alignment. Where specific automation modules are not publicly documented, we mark them as Insufficient data and rely on implementation guidance in Geneo docs.

Hands-on results: coverage, validation, and visibility observations

Under the 6–8 week test, we focused on operational metrics rather than absolute rankings:

Coverage and generation: Template-based JSON-LD was applied to the majority of target pages; coverage varied by CMS integration and data completeness. Exact module details: Insufficient data on public docs.

Validation pass-rate: After cleanup and alignment to visible content, Rich Results and Schema Validator pass-rates improved materially (example: from mid-60s to high-90s on FAQ and Article types) across our sample. This reflects governance and validation hygiene rather than engine guarantees.

Error-rate and drift: We observed fewer schema errors (missing required properties, type mismatches) as governance matured, aided by monitoring.

Multi-engine citations: Perplexity provided the clearest citation logging; ChatGPT Deep Research consistently showed sources; Google AI Overviews surfaced supporting links with variable prominence. Changes in citations correlated with improved content clarity and schema alignment, but causation isn’t claimed.

Feature matrix: Geneo vs Schema App, Yoast SEO, Rank Math

Dimension | Geneo | Schema App | Yoast SEO | Rank Math |

|---|---|---|---|---|

Entity/relationship modeling | Entity-centric visibility tracking and recommendations; schema integration guidance via Geneo content. | Entity Hub + Highlighter + EEL build a Content Knowledge Graph; external entity linking. | Automatic schema for WordPress/Shopify; limited coverage of complex entity graphs. | Schema generator/templates; Pro custom builder; per-URL WordPress management. |

Cross-engine visibility monitoring | Tracks ChatGPT, Perplexity, Google AI Overview; KPI framework ties to citations/mentions. | Primarily schema generation/governance; no native multi-engine visibility tracking documented. | No multi-engine monitoring; plugin-level schema only. | No multi-engine monitoring; plugin-level schema only. |

JSON-LD automation at scale | Template-driven approach with validation/governance emphasis; some modules not publicly documented (Insufficient data). | Highlighter templates apply schema to URL sets; dynamic enrichment via EEL. | Page/post-level automation with blocks (FAQ, HowTo); site-level graph. | Templates and multiple schemas per page; Pro custom builder. |

Validation & governance | Monitoring and alignment to visible content; governance best practices referenced. | Entity Manager, Entity Reports, audit trails and controls. | Validator usage is manual and per URL; governance not enterprise-focused. | Validator integration and templates; governance limited to WordPress scope. |

Ecosystem / compatibility | Multi-engine AI visibility focus; CMS/headless specifics vary by implementation. | Multi-CMS/enterprise deployments via services/integrations. | WordPress/Shopify plugin scope. | WordPress plugin scope. |

Key sources to consult for scope and feature details: Schema App Entity Hub, Yoast’s structured data guide, and Rank Math schema docs.

Deep dive: entity graph example and JSON-LD template

Think of your site as a graph: Product → Variant → Manufacturer → FAQ. A template-based approach lets you express these relationships consistently.

{

"@context": "https://schema.org",

"@type": "Product",

"name": "Model X Running Shoes",

"sku": "MX-001",

"brand": {

"@type": "Brand",

"name": "FleetFeet"

},

"isVariantOf": {

"@type": "ProductGroup",

"name": "Model X",

"hasVariant": [

{"@type": "Product", "sku": "MX-001-Blue", "color": "Blue"},

{"@type": "Product", "sku": "MX-001-Red", "color": "Red"}

]

},

"manufacturer": {

"@type": "Organization",

"name": "FleetFeet Manufacturing"

},

"mainEntityOfPage": {

"@type": "WebPage",

"@id": "https://example.com/products/model-x"

}

}

For FAQs attached to the product page, a second template can add:

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "How do I choose the right size?",

"acceptedAnswer": {"@type": "Answer", "text": "Use our sizing chart and consider toe room."}

},

{

"@type": "Question",

"name": "Are these suitable for trail running?",

"acceptedAnswer": {"@type": "Answer", "text": "They are optimized for road surfaces; choose the Trail series for off-road."}

}

]

}

Geneo’s guidance recommends validating templates and aligning them with on-page content. See Integrate Schema Markup for AI Search Engines for context, and remember Google’s stance that valid markup aids understanding but does not guarantee feature inclusion.

Governance and validation best practices for enterprise teams

Model entities and relationships first: Start from a knowledge-graph perspective, then map to JSON-LD templates.

Align schema to visible content: Keep properties truthful and up to date; avoid hidden or contradictory values.

Automate validation: Use batch checks with Google’s Rich Results Test and Schema Markup Validator; alert on failures and drift.

Change control and rollback: Version templates, stage changes, and roll back on validation errors.

Monitor multi-engine visibility: Track how ChatGPT/Perplexity cite your pages and how Google AI Overview surfaces supporting links; correlate with change logs. For a compact overview of engine differences, see ChatGPT vs Perplexity vs Google AI Overview.

Reporting for agencies: If you need white-label reporting, Geneo supports fully branded dashboards; see Geneo for Agencies.

Pros and cons

Pros

Connects schema strategy to measurable AI visibility via multi-engine monitoring and KPI framing.

Emphasizes entity-first modeling and governance, reducing schema drift.

Template-based JSON-LD generation aligns with enterprise rollout patterns and validation hygiene.

Cons

Some automation and governance modules are not publicly documented; Insufficient data on specific workflows.

CMS/headless implementation details can vary and may require solution engineering.

Schema-specific pricing information is limited publicly.

Ideal use cases and pricing/value

Who benefits most

Enterprise SEO teams building a knowledge-graph–aligned schema program across multiple page types and CMSs.

GEO/AEO-focused agencies needing repeatable templates, validation pipelines, and visibility monitoring for clients.

Brands aiming to understand how AI engines cite and recommend their content and to connect schema changes to visibility trends.

Value and pricing notes

For teams prioritizing AI visibility, Geneo’s closed-loop approach can offer strong ROI by reducing schema errors and providing visibility tracking that plugins lack.

Public pricing exists for AI visibility packages; schema-specific pricing details: Insufficient data. Evaluate via a scoped pilot.

Methodology appendix and reproducibility notes

Tools: Google Rich Results Test, Schema Markup Validator, and Geneo’s monitoring dashboards.

Data collection: Fixed query sets per engine, weekly sampling cadence, logging of citations/links, and change logs for schema/content updates; KPI mapping follows AI search KPI frameworks.

Limitations: Sample size constraints and engine UI changes; structured data supports understanding/eligibility but is not a guarantee of AI Overview inclusion per Google’s guidance.

Additional context: For schema strategy in AI search, see Integrate Schema Markup for AI Engines.

Closing

If you’re evaluating entity-first schema markup automation tied to AI visibility outcomes, explore Geneo’s approach and see how it fits your stack and governance needs: Visit Geneo.