How to Fix AI Overviews Misattribution: Step-by-Step

Step-by-step guide to reduce competitor misattributions in Google AI Overviews. Learn entity inventory, canonical mapping, schema, off-site corroboration, and KPI formula.

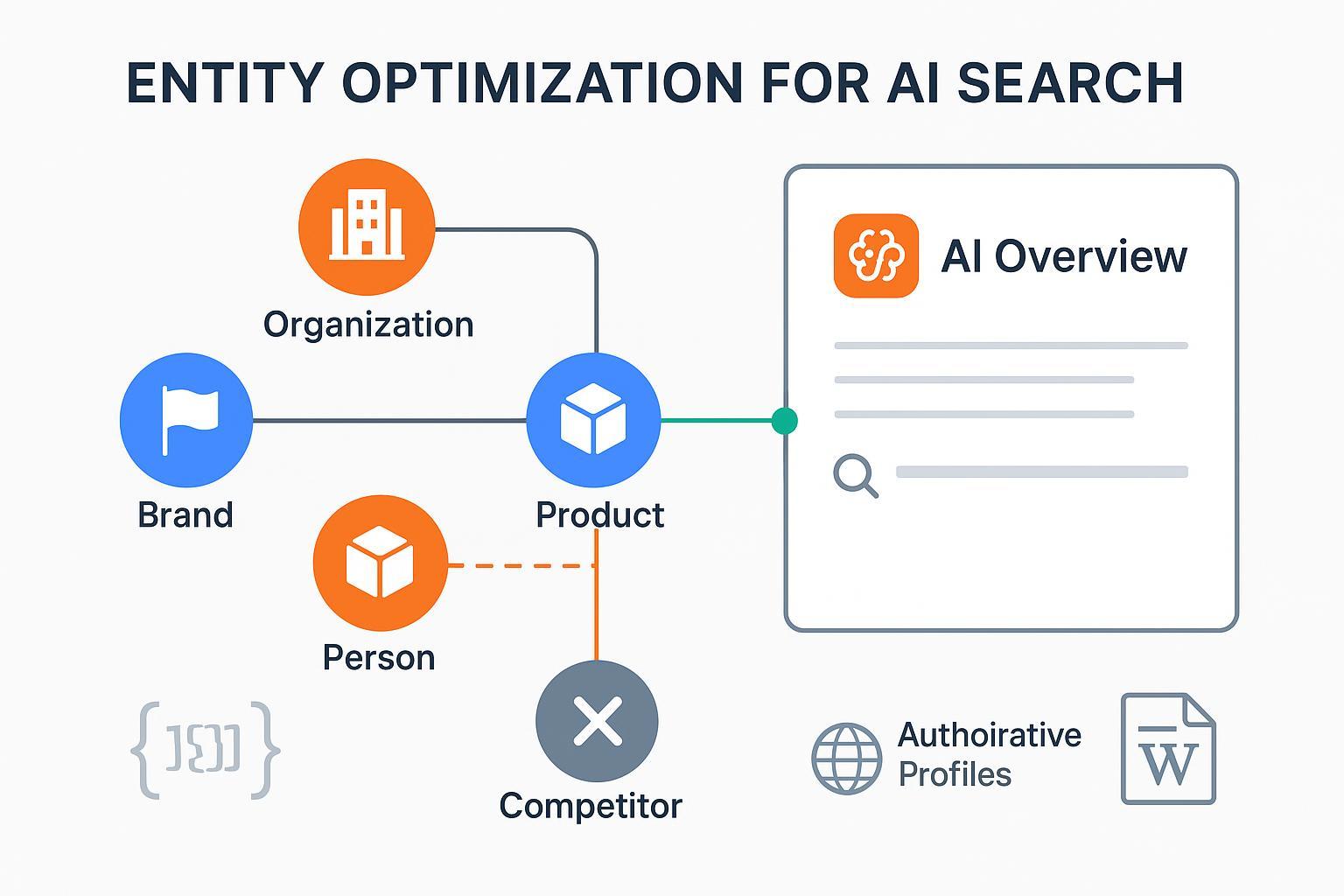

Google’s AI Overviews can cite the wrong source on branded or how-to queries. The anchor scenario we’ll solve: your competitor is cited instead of your client. The fastest path to remediation is tightening your entity model—on-site and off-site—so Google has unambiguous signals about “who” and “what” the content represents.

According to recent industry analyses, AI Overviews often pull citations from top organic results, but ambiguity and volatility can lead to errors. See the practical context in Reusser’s overview of how AI Overviews reshapes SEO (2025).

Step 1: Build your entity inventory

Before touching code, catalog every entity your site presents. Use a simple CSV or Airtable with fields for type, names and aliases, a short disambiguating description, the canonical page, a stable @id anchor, authoritative sameAs links, identifiers for products, and key relationships (for example, Product.manufacturer referencing the Organization @id). Keep one @id per entity across the entire site, keep labels consistent across on-site and off-site profiles, and avoid pages that try to represent multiple primary entities. If two entities share a name (company vs. founder), include a disambiguating description and assign separate @id and sameAs sets.

Step 2: Canonical mapping that models “who is who”

Your canonical URLs and internal identifiers are the backbone of disambiguation. Choose definitive URLs and implement rel=canonical on duplicates and variants—Google may override weak signals, so check choices via URL Inspection. See Google’s crawl budget guidance for consolidation principles. Define consistent @id patterns (site-scoped URIs), such as Organization → https://example.com/#org; Brand → https://example.com/#brand; Product → product URL + #id. Connect your internal graph (Organization ⇄ Product/Brand ⇄ Person ⇄ Article) by referencing @id values rather than repeating text labels. For multilingual or multi-regional sites, maintain complete, reciprocal hreflang sets with x-default per Google’s localized versions guidance.

Outcome: a clear, machine-readable map that keeps similarly named entities from blurring together.

Step 3: Schema implementation to reduce AI Overviews misattribution

Use schema.org types to describe entities and their relationships. While Google hasn’t published direct guidance that structured data guarantees AI Overview citations, entity-accurate schema reduces ambiguity. Reference: Organization, Product, sameAs, and mainEntityOfPage.

Copy-ready templates (validate with Google’s Rich Results Test and the Schema Markup Validator):

Example A: Organization with @id and sameAs

{

"@context": "https://schema.org",

"@id": "https://example.com/#org",

"@type": "Organization",

"name": "Example, Inc.",

"url": "https://example.com/",

"logo": "https://example.com/assets/logo.svg",

"sameAs": [

"https://www.wikidata.org/wiki/Q123456",

"https://en.wikipedia.org/wiki/Example",

"https://www.linkedin.com/company/example/",

"https://www.crunchbase.com/organization/example"

]

}

Example B: Brand linked from Product with identifiers

{

"@context": "https://schema.org",

"@graph": [

{

"@id": "https://example.com/#brand",

"@type": "Brand",

"name": "Example Widget"

},

{

"@id": "https://example.com/product/widget-123#id",

"@type": "Product",

"name": "Widget 123",

"sku": "W123",

"brand": { "@id": "https://example.com/#brand" },

"manufacturer": { "@id": "https://example.com/#org" },

"identifier": [

{ "@type": "PropertyValue", "propertyID": "mpn", "value": "MPN-123" }

],

"sameAs": [

"https://www.wikidata.org/wiki/Q987654"

]

}

]

}

Example C: Article with mainEntityOfPage

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "How to fix AI Overviews misattribution",

"mainEntityOfPage": {

"@type": "WebPage",

"url": "https://example.com/blog/fix-ai-overviews-misattribution"

},

"author": { "@type": "Person", "name": "Jane Doe" }

}

Example D: FAQPage minimal pattern

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntityOfPage": "https://example.com/faq/ai-overviews",

"mainEntity": [

{

"@type": "Question",

"name": "Why does Google cite competitors in AI Overviews?",

"acceptedAnswer": { "@type": "Answer", "text": "Entity ambiguity and ranking signals." }

}

]

}

Implementation notes: use @id anchors to avoid duplicating entities and to reference relationships; populate sameAs with authoritative profiles to disambiguate similarly named entities; ensure structured data matches visible content and passes validation. See industry summaries like ALM Corp’s 2026 schema guide for current context.

Practical workflow: validate and catch misattributions

Disclosure: Geneo is our product. For agencies running multi-client portfolios, Geneo can be used to validate entity accuracy and detect misattributions across engines, including Google AI Overviews. In practice, you’d establish a baseline list of branded/how-to queries, monitor which sources AIO cites for each query and flag cases where a competitor is cited, then compare against your entity inventory to identify gaps (missing sameAs, weak @id, off-site inconsistencies). For hands-on schemas in AI search, see our schema integration notes for AI search engines.

Step 4: Off-site corroboration that closes the loop

Strengthen identity by aligning with authoritative external references. In Wikidata, create or update the item with precise labels, descriptions, aliases, and key properties like P856 (official website) and P159 (HQ)—property docs are available on Wikidata and related pages. If notability is met, use Wikipedia carefully and follow conflict-of-interest policy and corporate notability guidelines; propose edits on talk pages when you have a COI. Normalize LinkedIn, Crunchbase, G2, Capterra, and Trustpilot names and URLs; cross-link from your site and include them in sameAs.

Outcome: when Google re-evaluates entities, it finds consistent labels and authoritative confirmations that reduce ambiguity.

Step 5: Monitoring and KPI — measure the % reduction in misattributions

Anchor KPI: % reduction in misattributions within Google AI Overviews. Start with baseline sampling: choose N branded/how-to queries where your client should be cited; for each query, record whether AIO cites your client or a competitor. Misattribution rate = misattributed_queries / N. After remediation, re-run the same set (or a matched set) and recompute. KPI = ((baseline_rate − post_fix_rate) / baseline_rate) × 100%.

Logging tips: run tests at multiple times/days due to AIO variability; store timestamps, cited sources, and entity labels; classify each result as correct or misattributed and note suspected causes (missing identifiers, off-site mismatch). Use a simple sheet or database; if you need a broader KPI framework, see AI search KPI frameworks for visibility, sentiment, and conversion.

Troubleshooting and edge cases

Conflicting @id or missing sameAs: unify @id patterns and audit sameAs entries for authoritative consistency. Product ambiguity: add identifiers (sku/mpn/gtin) and brand/manufacturer references. Author vs. company confusion: create distinct Person and Organization entities, model bylines and employer relationships, and avoid mixing credits. Canonical overrides by Google: strengthen rel=canonical, ensure unique content, and verify via URL Inspection. Localization pitfalls: complete, reciprocal hreflang sets, validate rendered HTML, and avoid broken alternates. Validation errors: use the Schema Markup Validator and Google’s Rich Results Test before shipping changes.

Next steps

Implement the inventory → canonical mapping → schema → corroboration workflow on one priority client, then scale. Set a 4–6 week cadence to measure the KPI and iterate. If you manage multiple clients and need structured monitoring/reporting, review the GEO optimization workflow for AI citations and Geneo docs for integration options.