2025 Best Practices: Turn Content Into a Profit Center With AI Automation

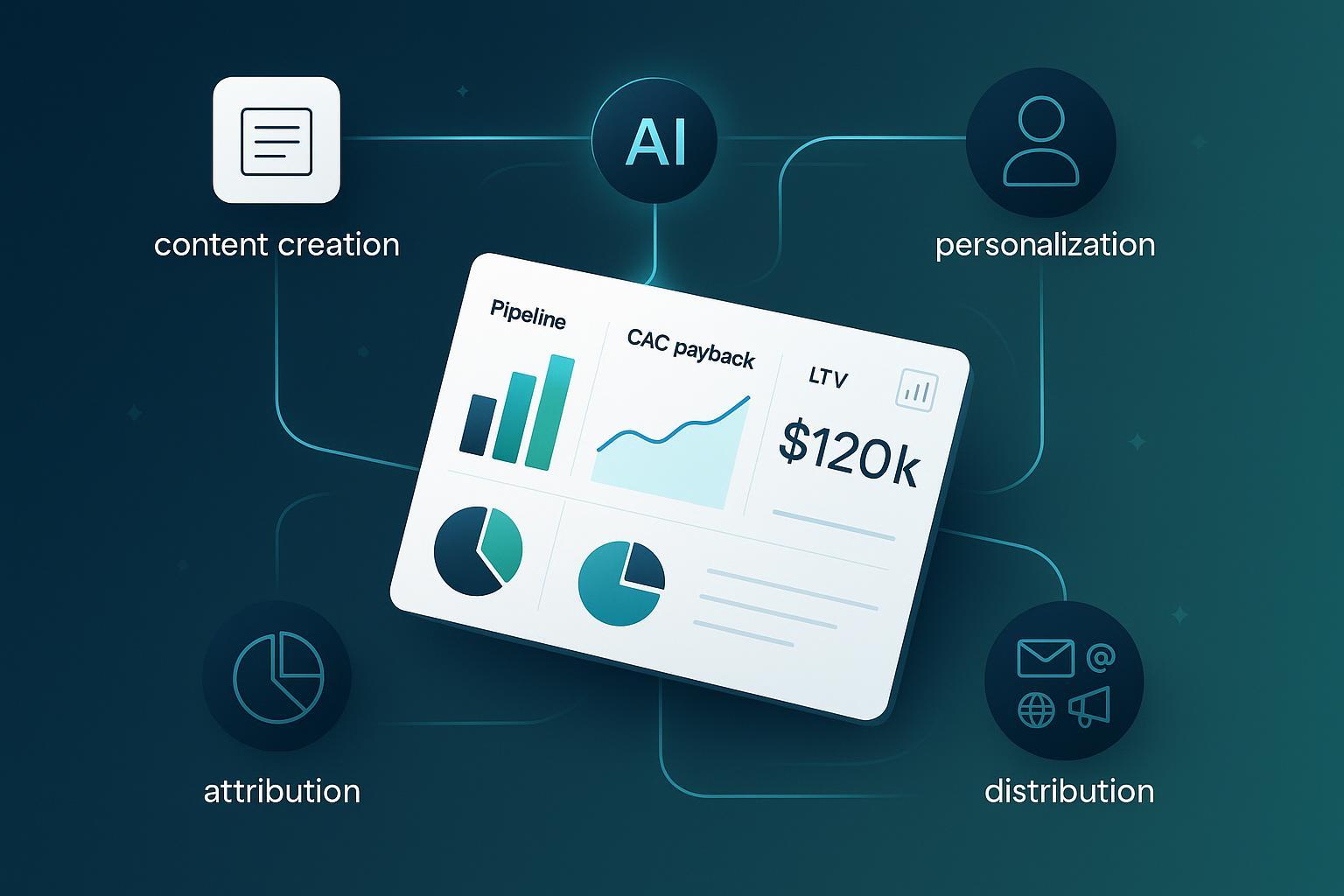

Discover actionable 2025 strategies to transform content from cost center to profit driver using AI-powered marketing automation, with real workflow examples and key profit metrics.

Content has long been treated like a necessary expense: publish regularly, chase rankings, and hope sales notice. In 2025, teams that operationalize AI-powered marketing automation are breaking that cycle and turning content into a measurable profit engine. Adoption is no longer fringe—according to the McKinsey 2025 State of AI global survey, 72% of organizations report using generative AI in at least one function, with marketing and sales among the top use cases. Revenue impact is increasingly reported at the team level; for example, Salesforce’s 2024 SMB AI trends report notes that 91% of SMBs using AI say it boosts revenue.

What follows is a practitioner playbook: precise steps, guardrails, metrics, and workflows I’ve used to redesign content ops from a cost line into a profit lever. No silver bullets—just reproducible processes that withstand scrutiny.

The Profit-Mapping Framework: 7 Steps to Operationalize Profitable Content

This framework is tool-agnostic; adapt to your stack (CRM/MAP/CDP/analytics). Each step includes actions and quality gates.

1) Data Readiness and Objective Alignment

Actions:

- Unify your customer data (CRM, MAP, CDP, web/app analytics, commerce) into a consistent identity layer. Define your single source of truth.

- Translate business outcomes into measurable content objectives: pipeline influence, paid signups, LTV uplift, CAC payback.

- Establish segmentation primitives (accounts, roles, intents) and prioritize via predictive scoring.

Quality gates:

- Revenue-linked KPIs exist for each content program (e.g., “whitepaper → MQL to SQL conversion,” “product page variant → assisted revenue”).

- Cross-functional agreement on data lineage and reporting cadence.

Reference mindset: Use model-based measurement. Think with Google guidance on ROI and AI-powered measurement (2025) emphasizes data-driven attribution (DDA) for digital optimization, complemented by marketing mix modeling (MMM) for cross-channel planning.

2) AI-Assisted Production System (with Brand Guardrails)

Actions:

- Build an AI content system for briefs, first drafts, variants, and multimedia. Standardize prompts and templates.

- Create a brand safety hub: style/tone library, terminology, compliance notes, citation policy, and editorial checklist.

- Implement continuous A/B and multivariate testing at the component level (headlines, CTAs, hero copy, schema).

Quality gates:

- Every AI-generated output passes human editorial review before publication.

- Versioning and provenance tracking are in place (who approved what, when).

For teams new to AIGC, align your definitions and governance via a foundational primer like What is AI-Generated Content (AIGC)? and clarify when humans vs. AI should lead. Also see AI vs. Human Writers: Pros and Cons for decision points in complex content.

3) Real-Time Personalization and Journey Design

Actions:

- Maintain living profiles in your CDP: behavior, content consumption, intent signals.

- Use AI lead/account scoring to trigger dynamic content across email, web, in-product, and ads.

- Design “micro-journeys” aligned to revenue milestones (e.g., “research → trial → adoption”).

Quality gates:

- Personalization logic ties directly to measurable outcomes (e.g., specific variant increases demo requests by X%).

- Suppression rules prevent over-contact and respect privacy/consent choices.

Guidance: Practitioners increasingly rely on practical approaches like Encharge’s 2025 marketing automation practices and Braze’s AI marketing automation insights to operationalize behavior-driven triggers.

4) Multichannel Orchestration and Sales Handoff

Actions:

- Automate channel selection and timing based on engagement propensity (email/SMS/push/paid/social).

- Sync sales and success handoffs: content signals → CRM tasks → sequences → playbooks.

- Deploy self-optimizing flows that reallocate budget/time toward higher-ROI segments.

Quality gates:

- Service-level agreements (SLAs) exist for content-driven handoffs (e.g., demo form → sales contact < 30 minutes).

- Orchestration rules documented in runbooks; exceptions monitored.

5) Conversion Acceleration (Reduce Friction, Recover Intent)

Actions:

- Use AI chat/assistants to clarify offers, qualify leads, and route conversations.

- Implement dynamic offers/pricing where appropriate; trigger abandonment rescue and reactivation sequences.

Quality gates:

- Measure deflection reduction (e.g., fewer drop-offs on key pages) and incremental conversions via causal tests.

Context: Case evidence continues to accumulate. Adobe’s marketing team reports an 80% increase in return on media spend and 75% growth in media’s share of digital subscriptions over five years, driven by unified data and AI decisioning per the Adobe Mix Modeler case study (2024).

6) Closed-Loop Measurement: DDA, MMM, and Incrementality

Actions:

- Use DDA (e.g., GA4/Ads) to allocate credit across digital touchpoints and guide channel/campaign optimization.

- Run MMM quarterly to capture macro effects across online/offline, seasonality, and external factors.

- Design incrementality tests (geo experiments, holdouts) to validate causal impact; pair with qualitative self-reported attribution.

- Tie content assets to CRM opportunities/subscriptions; report assisted revenue and CAC payback.

Quality gates:

- Last-click reports are deprecated for strategy decisions.

- Models refreshed with behavior shifts; transparency on assumptions documented.

Useful references include Think with Google’s overview of MMM for strategic KPIs (2025 primer) alongside the ROI measurement guidance cited earlier.

7) Governance and Risk Management (Human-in-the-Loop)

Actions:

- Adopt the NIST AI Risk Management Framework (Govern–Map–Measure–Manage) and the Generative AI profile for content systems via the NIST AI RMF official page.

- Track regulatory obligations; parts of the EU AI Act began taking effect in 2025, with unacceptable-risk systems banned as of Feb 2, 2025, per the EU Parliament’s AI Act overview.

- Maintain brand safety standards: editorial review, tone/style libraries, provenance, and post-publication monitoring.

Quality gates:

- Documented human review is mandatory before publication.

- Privacy preferences respected across channels; opt-out/consent flows regularly tested.

Metrics and Attribution: Practical 2025 Guidance

Pick the right tool for the decision you’re making:

- Use DDA when optimizing digital campaigns and budget allocation across tracked touchpoints. Ensure sufficient volume; expect improvements in bidding decisions and cross-channel ROI per Google’s 2025 guidance on AI-powered measurement.

- Use MMM for quarterly planning and upper-funnel/brand investments; isolate lagged and offline effects that DDA cannot see.

- Use Incrementality Tests to answer causal questions (“Did this content/campaign generate additional conversions?”). Combine geo experiments or holdouts with qualitative insights and post-exposure surveys.

Beware common pitfalls:

- Over-reliance on last-click or static rule-based models.

- Data sparsity leading to unstable DDA results.

- MMM opacity without proper documentation and validation.

- Ignoring qualitative context (e.g., customer voice from interviews) when interpreting model outputs.

Set thresholds and refresh cadence:

- Define success floors (e.g., minimum lift of +8–12% in target segment conversions to scale a variant).

- Refresh DDA weekly/biweekly; MMM quarterly; run incrementality tests per major program change.

Quick Wins vs. Advanced Moves

Quick wins (2–4 weeks):

- Introduce standardized AI briefs and prompt libraries; implement an editorial checklist with brand guardrails.

- Launch lightweight A/B tests on high-traffic pages and email CTAs; promote winning variants.

- Automate remix pipelines (e.g., segment-specific landing pages) and feed results back into CRM.

Advanced moves (4–12 weeks):

- Integrate a CDP to unify identities and trigger real-time personalization.

- Establish a closed-loop measurement stack (DDA + MMM + incrementality) and adopt transparent modeling documentation.

- Build governance: NIST AI RMF alignment, EU AI Act literacy training, and human-in-the-loop approvals.

For workflow acceleration templates and governance checklists, see Best Practices for 30% Faster AI Content Marketing Campaigns (2025).

Practical Workflow Example: Lead Gen to SQL (with AI Automation)

Scenario: You’re driving demo requests for a B2B product.

- Content production: AI-assisted briefs produce three landing page variants tailored to industry segments. Editorial review confirms accuracy and tone.

- Personalization: CDP segments visitors by intent; on-site content adapts (case studies, pricing notes) based on behavior and firmographic signals.

- Orchestration: Email + paid retargeting trigger when intent signals cross a threshold; chat assistant offers a tailored demo path.

- Measurement: GA4 DDA informs budget shifts toward high-ROI audiences; MMM validates brand lift; a geo holdout measures incremental demos.

Micro-example (tool use): Draft and test segment-specific landing pages using QuickCreator, then push winning variants to WordPress in one click. Editorial guardrails and version tracking reduce rework; integrated SERP/topic recommendations guide on-page optimization. Disclosure: QuickCreator is our product.

Named Success Snapshots (for Confidence and Calibration)

- Adobe (internal): 80% increase in return on media spend and 75% growth in media’s share of digital subscriptions after unifying data and employing AI decisioning—see the Adobe Mix Modeler case study (2024).

- Salesforce (internal Data Cloud story): 165% lift in web engagement and speed-to-lead cut from 20 minutes to 20 seconds with unified data and automation, as described in Salesforce’s official Data Cloud personalization story.

- HubSpot — Aerotech: Win rate rose from 15% to 25% (+66%), average deal size grew by $10,000, sales cycle shortened from 309 to 135 days, and reps saved 18 hours/week due to automation per HubSpot’s Aerotech case study.

Use these as directional confidence checks; validate lift and payback within your own data and models.

Implementation Checklist (Field-Tested)

Do this:

- Define revenue-linked KPIs for each content initiative.

- Unify identities across CRM/MAP/CDP and validate data quality.

- Standardize AI prompts and editorial guardrails; log provenance.

- Run continuous A/B tests; promote winners quickly.

- Implement DDA for digital optimization; run MMM quarterly; schedule incrementality tests for major changes.

- Establish governance: NIST AI RMF alignment, EU AI Act literacy, human review.

Avoid this:

- Treating content metrics (traffic, likes) as success without revenue linkage.

- Publishing AI outputs without human editorial review.

- Relying solely on last-click models or vanity attribution.

- Ignoring consent/opt-out preferences in personalization.

- Over-automating without brand safety and compliance checks.

Next Steps (Soft CTA)

Start with one pipeline-linked content program and instrument it end to end (DDA + MMM + incrementality). If you need a fast way to generate and test segment-specific pages with built-in guardrails, consider QuickCreator to prototype and measure quickly. Disclosure: QuickCreator is our product.

Sources and Further Reading

- Adoption and momentum: McKinsey 2025 State of AI global survey.

- Revenue impact signals: Salesforce 2024 SMB AI trends.

- Measurement frameworks: Think with Google ROI and AI-powered measurement (2025) and Think with Google MMM overview for strategic KPIs.

- Governance: NIST AI Risk Management Framework and EU Parliament EU AI Act overview.

- Case evidence: Adobe Mix Modeler case study; Salesforce Data Cloud personalization story; HubSpot Aerotech case study.