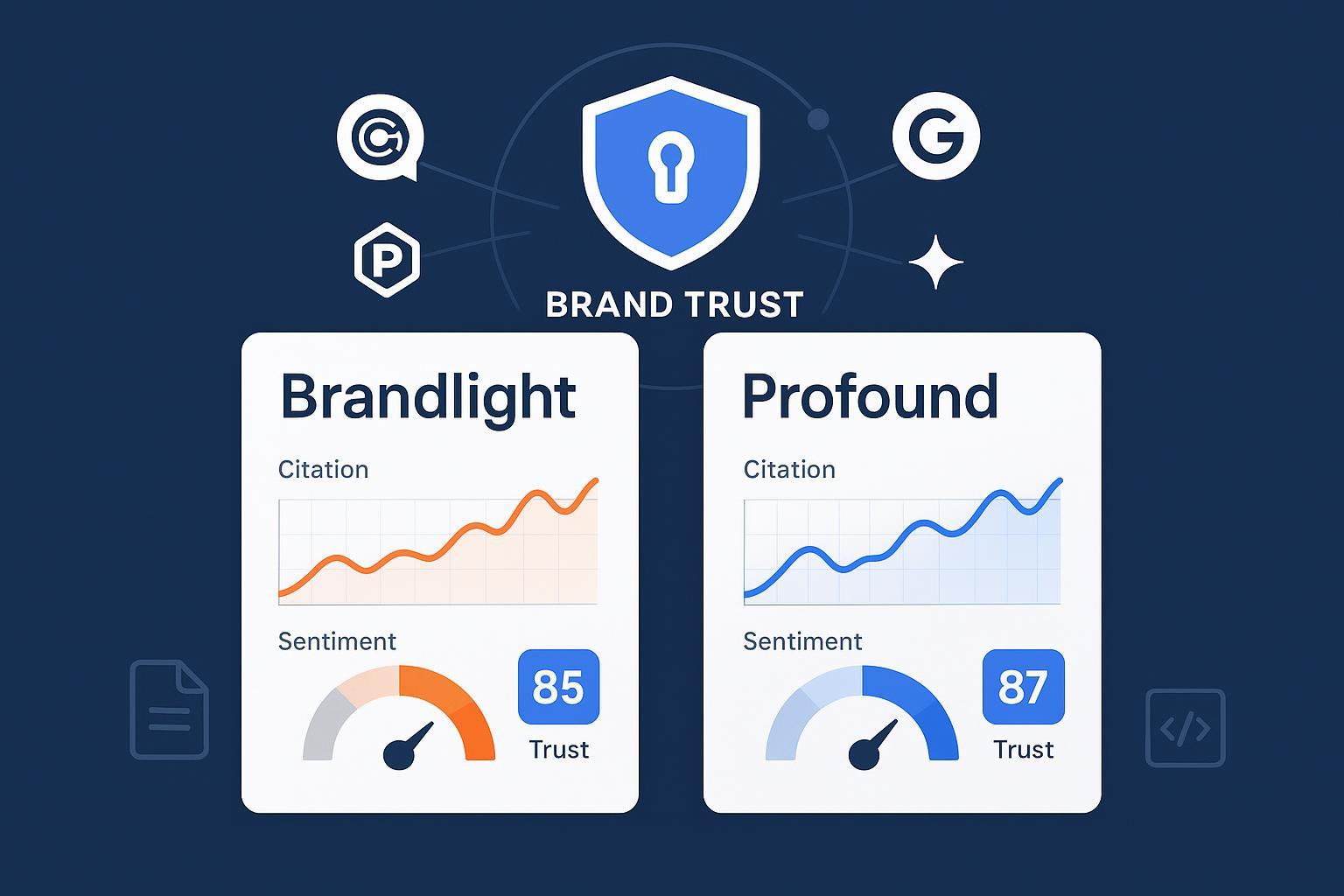

Brandlight vs Profound (2025): AI Brand Trust Signals & Comparison

Brandlight vs Profound in 2025: Compare trust signal accuracy, auditability, and AI answer reporting for agencies. Find the best fit for brand trust in generative search.

If your clients are asking, “How are we showing up in AI answers—and do people trust what they see?”, you’re not alone. In 2025, Brandlight and Profound sit on many shortlists for agencies and brand teams trying to quantify trust in generative search. The question isn’t just who “tracks mentions.” It’s who turns trust signals—citation authority and consistency, sentiment quality, narrative alignment across engines, and auditability—into decisions you can defend in a QBR.

This comparison focuses on what matters to growth-focused agencies (and the brand leaders who fund them): measurement fidelity, cross-engine coverage, reproducibility, and client-ready reporting. No hype—just what each platform documents, where the blind spots are, and when to choose one approach over the other.

What “brand trust” means inside AI answers

Think of an AI answer like a news segment about your brand. Trust rises when the segment cites authoritative sources, repeats your core story consistently across channels, uses positive (or at least fair) tone, and can be audited later. In generative search, that translates to five practical signal buckets:

Citation authority and consistency: Who gets cited, how often, and does that pattern hold across engines? Are citations coming from high-authority domains and repeating the same core claims?

Sentiment quality: Net positive vs. negative mentions and the intensity of the language used around your brand.

Narrative coherence: Whether your brand’s key messages and facts (pricing ranges, product names, differentiators) show up the same way across engines.

Cross-engine parity: Visibility and trust signals measured across multiple AI engines to avoid “pseudo trust” created by a single-platform bias.

Auditability and governance: The ability to trace how an answer was captured, who changed what, and how a recommendation was derived—so you can reproduce results and pass legal review.

Brandlight at a glance (2025)

Brandlight positions itself as a governance-first AI brand visibility and intelligence platform with a signals hub that aggregates AI Presence, Share of Voice, Sentiment, and Narrative Consistency across what it claims are 11 generative engines (e.g., Google AI Overviews, Gemini, ChatGPT, Perplexity). Its support articles emphasize role-based access control, audit trails, and metadata capture for traceability. For example, Brandlight documents team permissions and RBAC in its help center and describes metadata capture for provenance and audit trails, which points to an enterprise posture around reproducibility and legal review. See Brandlight’s documentation on team permissions and RBAC in 2025 in “What permissions does Brandlight offer for team setup” and its traceability guidance in “What metadata Brandlight captures for traceability.”

What it measures and how: A centralized “signals” model (AI presence, share of voice, sentiment, narrative consistency) with governance workflows layered in. Articles also describe “How are Brandlight integrations monitored over time” and compliance-friendly approvals.

Strengths for trust work: Governance-first framing; role/permission controls and auditability; emphasis on consistent narratives across engines; useful for regulated teams that must prove process, not just outcomes.

Constraints to note: As of 2025, Brandlight lacks a public-facing trust/compliance center with third-party certifications; claims are vendor-asserted in support articles. Pricing is not publicly published—expect “contact sales.” Public API/export specifics are sparse on the main site; confirm reporting/BI pathways in a demo.

Who it’s for: Enterprise or compliance-sensitive teams that value traceability and narrative control. Agencies supporting regulated clients may find the governance templates appealing.

Profound at a glance (2025)

Profound is an enterprise GEO/AEO platform with real-time monitoring across 10+ engines (e.g., ChatGPT, Perplexity, Google AI Overviews/AI mode, Claude, Copilot). It publishes research on cross-engine citation overlap and volatility, and it exposes a documented REST API and SDKs for programmatic reporting. In 2025, Profound also states SOC 2 Type II compliance on its official pages (report access typically “upon request”). See Profound’s 2025 “Pricing” and plan notes on its site and its “REST API Introduction” for technical details; for trust-signal research, Profound discusses cross-engine “citation overlap strategy” and “AI search volatility” in 2025 blog posts.

What it measures and how: Large-scale citation tracking (hundreds of millions) with overlap/volatility analysis; sentiment monitoring; competitive benchmarking; platform-specific modules (e.g., ChatGPT Shopping); and export options via API/SDKs.

Strengths for trust work: Quantified citation behavior across engines; programmatic access for BI; research-backed understanding of how often engines agree or drift—useful when your goal is to harden citation authority and consistency.

Constraints to note: Although SOC 2 Type II is stated, a dedicated public trust center URL wasn’t located in 2025; request validation. Some methodology details live in blogs rather than consolidated docs.

Who it’s for: Agencies and data-savvy brand teams that want to instrument trust measurement at scale, feed dashboards via API, and run experiments tied to citation patterns.

Side-by-side snapshot

Dimension | Brandlight | Profound |

|---|---|---|

Cross-engine coverage (2025) | Vendor-asserted coverage of 11 engines; confirm list and capture cadence in demo. | 10+ engines documented across site and blogs; real-time monitoring emphasized. |

Trust signals depth | Signals hub: AI Presence, Share of Voice, Sentiment, Narrative Consistency; strong on governance framing. | Citation datasets, overlap/volatility research, sentiment, competitive benchmarking; strong on quantified patterns. |

Auditability & governance | RBAC, audit trails, metadata for traceability described in support docs; compliance posture implied. | Enterprise features, SSO/RBAC; SOC 2 Type II stated on-site; ask for report and data lineage details. |

Reporting / Exports / API | Articles imply dashboards/exports; public API specifics less visible—confirm BI/white-label paths in demo. | REST API + SDKs publicly documented; CSV/BI exports and agency workflows described. |

Pricing transparency (2025) | Not publicly listed; contact sales. | Pricing page with plan outlines; details vary by tier. |

Best-fit scenario | Governance/audit-first teams in regulated contexts prioritizing reproducibility. | Agencies prioritizing quantified citation authority and programmatic reporting. |

Which one strengthens trust faster? Scenario guidance

Governance/audit priority: If your client’s legal or compliance team leads the conversation, Brandlight’s governance-first approach can accelerate approvals. RBAC, audit trails, and metadata capture help you prove how trust signals were collected and transformed. The trade-off is you’ll need to validate API/export breadth and any white-label pathways if reporting velocity is a must.

Citation authority and cross-engine patterns: If your mandate is “make our brand the cited source more often—and consistently across engines,” Profound’s published overlap/volatility lens and programmatic access will likely get you testing faster. You can measure whether a playbook (author pages, citations seeding, source consolidation) actually shifts cross-engine agreement and reduces drift.

Agency reporting velocity: Teams that need to spin up dashboards, run comparative views across many clients, and ship repeatable scorecards may find Profound’s documented API/SDKs faster to wire into BI. Brandlight may still be the right fit where the reporting story must begin with governance proof before outcome charts.

Here’s the deal: “Faster” depends on your blocker. If approvals are slow, governance wins time. If measurement plumbing is slow, an open API wins time. If cross-engine inconsistency is the pain, work where citation overlap can be tracked and influenced.

What to validate in your demo

Reproducibility: Ask both vendors to trace a specific AI answer back to capture details (engine, date/time, prompt/pattern used, and any post-processing) and show the audit trail.

Cross-engine baseline: Bring 5–10 queries that matter to your brand; confirm capture for each engine you care about and how often the system refreshes.

Citation authority: For one query, rank the top 10 citations observed per engine; ask for the system’s logic for authority scoring and how it handles short-lived sources.

Sentiment and narrative: Review how sentiment is calculated (lexicon, model, or hybrid) and how narrative consistency is scored across engines and time windows.

API/exports: If you need BI, request a live pull from the API and a sample schema. If you need white-label reports, ask for a templated export to match your client deck.

Governance: For regulated brands, inspect RBAC configurations, change logs, approval workflows, and any metadata captured for legal review.

Also consider: agency-first scorecards and white-labeling

Disclosure: Geneo is our product. Agencies that want a single, client-facing KPI that turns trust signals (citations, sentiment, E‑E‑A‑T proxies) into a reportable metric often standardize on a Brand Visibility Score with white-label scorecards. For a quick sense of how scorecards read in practice, see Geneo’s query report example and our measurement framework for LLM/answer quality.

Explore a scored report example: Geneo Query Report on an “authentic Belizean island vacation experience” query (illustrates scorecard structure) — see the report at Geneo’s query report example.

Learn the measurement lens behind GEO: Our primer on LLMO metrics (accuracy, relevance, personalization) connects to how agencies report beyond traditional SEO — see LLMO metrics: measuring accuracy, relevance, personalization.

Ready to see your trust signals on one scorecard?

Book a Geneo demo to validate measurement and visualization differences for your brand’s AI trust signals and walk out with a client-ready scorecard.