Brandlight vs Profound 2026: AI Brand Trust & Repair Speed Comparison

Compare Brandlight vs Profound (2026) for AI brand trust. See negative narrative detection, repair speed, engine coverage, and actionable guidance to choose the best fit.

Generative answers can sway buyer perception before anyone lands on your site. If an AI says your product is outdated or cites a dubious source, trust erodes fast. This how‑to guide focuses on the single most important operational capability for today’s teams: monitoring negative narratives and repairing them quickly across ChatGPT, Google AI Overviews/Gemini, Perplexity, and other answer engines. For readers new to the concept, see the definition of AI visibility in Geneo’s blog: AI visibility explained.

The fast‑response loop for negative narratives

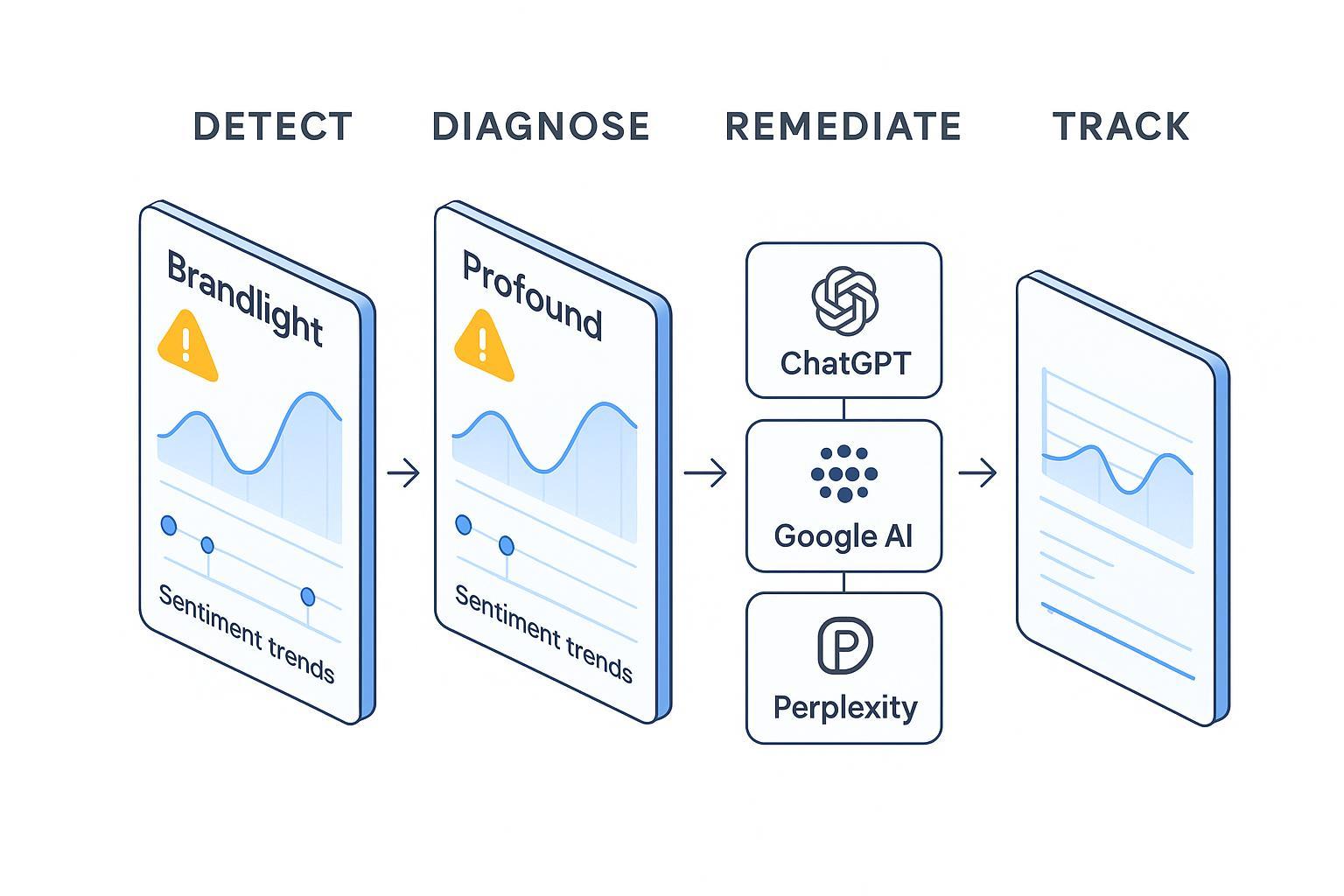

Here’s the deal: to protect brand trust in AI search, run a repeatable loop—detect → diagnose → remediate → track.

Detect

Maintain watchlists for sensitive queries (brand + product, comparisons, “is it safe,” “is it a scam,” etc.).

Poll engines on a fixed cadence (e.g., daily for core queries, hourly during incidents). Track sentiment drift and narrative bias flags.

Incorporate early‑warning sources that frequently seed AI answers: Reddit communities, Wikipedia, niche industry blogs, and government/regulatory pages.

Diagnose

Capture evidence trails: which sources are being cited, how frequently, and by which engines. Map citation clusters (e.g., Reddit → tech subs; Wikipedia → outdated sections).

Record output diffs across engines: Are Perplexity answers more negative than ChatGPT? Does Google AI Overviews skip authoritative sources?

Identify the root cause: missing facts, weak corroboration, skewed community threads, or outdated structured data.

Remediate

Fix source hygiene: update or create authoritative explainer pages; add expert citations and clear facts; improve structured data (schema.org), FAQs, and product specs.

Engage credible communities: correct misinformation on Reddit with transparent, well‑sourced replies; publish clarifications on your site and link them.

Strengthen corroboration: publish third‑party endorsements, research, or regulatory references that answer engines can pick up.

Track

Snapshot before/after outputs by engine; note latency to improvement (hours/days/weeks).

Maintain executive‑grade reporting: trend sentiment, citation quality, and answer share. Tie insights to actions taken.

Validate durability: do fixes hold through model updates and algorithm changes?

What Brandlight and Profound bring to detection and repair (as of Jan 2026)

Below, we summarize each platform’s public positioning and evidence. The focus is negative narrative monitoring and repair speed. Where public documentation is silent, we call it out so buyers can ask the right questions.

Brandlight

Brandlight presents AI brand visibility and reputation workflows with multi‑engine tracking, incident response, and remediation recommendations. The Solutions page (accessed Jan 2026) says teams can “monitor brand mentions … track sentiment … identify key publishers” and receive “real‑time alerts for negative or unauthorized brand references,” with “recommended actions” for remediation: Brandlight Solutions. A strategic partnership with Data Axle adds market validation: PR Newswire: Data Axle and Brandlight.ai partnership (Nov 4, 2025). An anomaly/spike detection article on a Brandlight subdomain suggests autonomous baselining and real‑time anomaly detection: spike detection overview.

Strengths (evidence‑backed)

Incident response and remediation language are explicit on public pages; multi‑engine tracking is repeatedly stated.

Partnership with Data Axle signals enterprise positioning and credibility.

Constraints/gaps (as of Jan 2026)

Public pages do not document alert delivery channels (Slack, email, webhooks) or configurable thresholds.

No public pricing or technical docs were found; engine list is not fully enumerated.

Profound

Profound positions itself with productized modules—Conversation Explorer/Prompt Volumes and Answer Engine Insights—showing cross‑engine visibility, sentiment, and citation behaviors. The Answer Engine Insights page (updated Jan 5, 2026) references monitoring responses from RAG‑based search approaches across engines such as ChatGPT, Perplexity, Microsoft Copilot, and Google AI Overviews. Profound publishes frequent research on citation patterns and sentiment, including quantified analyses of Reddit’s role in AI answers: the data on Reddit and AI search. Pricing is public: Profound pricing.

Strengths (evidence‑backed)

Rich, engine‑specific research corpus with quantified citation behaviors; clear evidence tracing.

Public pricing and case narratives; Conversation Explorer data adds context for monitoring prompt demand.

Constraints/gaps (as of Jan 2026)

Public pages do not list alert notification channels (Slack, email, webhooks) or provide SLA/lateness benchmarks.

Automation specifics for remediation steps are not exposed.

Table: Brandlight vs Profound — negative narrative monitoring and repair (as of Jan 2026)

Dimension | Brandlight | Profound |

|---|---|---|

Detection & alerting posture | Public copy references “real‑time alerts” for negative mentions; anomaly/spike detection article suggests autonomous baselining. Alert channels/thresholds not documented. Sources: Solutions; spike detection. | Demonstrates cross‑engine monitoring and sentiment/citation analyses; frequent research surfaces negativity patterns. Alert channels/SLA details not public. Sources: Answer Engine Insights. |

Diagnosis: citation/evidence tracing | Mentions “source attribution and content traceability,” but limited public samples. Source: Solutions. | Detailed, engine‑specific citation pattern research and RAG‑answer monitoring. Source: Answer Engine Insights. |

Remediation guidance | Public language on “recommended actions” and brand safety workflows; technical implementation depth not exposed. Source: Solutions. | Research‑backed remediation directions (e.g., addressing Reddit citation balance); automation specifics not exposed. Source: Reddit study. |

Reporting & actionability | Executive framing and incident response narratives; few public reporting samples. Source: Solutions. | Product modules (Prompt Volumes, Answer Engine Insights) plus case pages; dashboards emphasized. Source: Profound pricing. |

Pricing transparency | No public pricing (as of Jan 2026). | Public pricing page. Source: Pricing. |

Scenario‑fit recommendations

Rather than a single winner, use scenario logic. Which scenario describes your current need?

Fast crisis triage (hours)

If you need explicit incident response framing and “real‑time alerts” language, Brandlight’s positioning may align with rapid triage. However, ask about alert channels and thresholds to ensure notifications reach the right responders.

If your team relies on deep root‑cause analysis to prioritize fixes, Profound’s research and citation diagnostics can accelerate clarity on where negativity originates (e.g., Reddit threads, outdated Wikipedia sections).

Slow‑burn sentiment drift (weeks)

Profound’s engine‑specific citation and sentiment patterns help you measure drift and plan source‑ecosystem remediation over time.

Brandlight’s remediation recommendations may support programmatic cleanup; validate how those recommendations translate into specific source fixes.

Cross‑engine asymmetry (ChatGPT vs Perplexity vs Google AI Overviews)

Profound’s comparative outputs and RAG‑monitoring lens are useful for mapping differences across engines.

Brandlight’s multi‑engine visibility framing can help keep executives aligned on which engines are trending negative.

Agency/executive reporting across brands

Profound’s modules and public pricing can simplify scoping.

Brandlight’s executive narrative may resonate for leadership updates; confirm reporting exports and white‑label options.

Buyer validation checklist: questions that prove repair speed

When vendors don’t publish alert plumbing or SLAs, you should ask:

Alerting channels and routing

Which outbound channels are supported (Slack, email, Teams, webhooks), and can alerts be routed by query, entity, or engine?

Can you configure thresholds for sentiment drift, citation changes, or engine‑specific anomalies?

Detection cadence and latency

What is the polling cadence by engine, and can we spike polling during incidents?

What’s the typical latency from negative narrative emergence to detection and alert delivery?

Evidence logs and traceability

Do you store per‑engine evidence snapshots with timestamps and citation lists? How long is history retained?

Can we export diffs of answer text and source lists for before/after comparisons?

Remediation mapping and integrations

Do recommendations tie to specific source fixes (e.g., schema additions, page updates, expert citations)?

Are there integrations or workflows (tickets, webhooks) to push actions to CMS/issue trackers?

Reporting cadence and outcome tracking

Can we schedule weekly/monthly executive reports with sentiment trends, citation quality scores, and recovery timelines?

Do dashboards show durability across model updates?

Run the remediation playbook (step‑by‑step)

Use this text checklist to move from crisis to recovery.

Spin up monitoring

Define a “negative‑risk” query set and polling cadence by engine; include community sources. For more background on GEO versus traditional SEO, see Geneo’s primer: Traditional SEO vs GEO.

Detect and log

When a negative narrative appears, capture answer text, sentiment score, and all citations by engine. Tag the incident and route to the right owner.

Diagnose the root cause

Cluster citations; identify weak or biased sources. Note missing facts, outdated pages, or schema gaps. If you need conceptual workflows for remediation planning, consult Geneo’s documentation overview: Geneo docs.

Plan remediation actions

Draft concrete fixes: update product pages with verified specs, add FAQ entries, implement schema.org Product/FAQ, cite third‑party research, and address community threads with transparent corrections.

Execute and publish

Push updates through CMS; engage communities; request expert quotes and third‑party validations. Consider structured data tests and crawlability checks.

Track outcomes

Re‑poll engines; compare before/after; report latency to improvement and durability across updates. Adjust the playbook based on what moved the needle.

Also consider

Disclosure: Geneo is our product. If you’re building GEO/AEO operations and need competitive visibility scoring, multi‑engine monitoring, and white‑label reporting for clients, see Geneo’s conceptual overview of AI visibility: AI visibility explained on Geneo’s blog.

Next steps checklist

Shortlist vendors and run a 2–4 week pilot on your highest‑risk queries.

Instrument alert routing and validate detection latency in practice.

Execute at least one remediation cycle end‑to‑end and measure durability.

Build a lightweight playbook that your team can run on repeat—because the next narrative turn might be one question away.