Agency Playbook: Boost Brand Visibility in AI Search Results

Practical SOPs, tool comparisons, and KPI templates for UK agencies to improve brand visibility in AI search results with white‑label reporting and automation.

When clients ask why their traffic fell even though rankings look fine, the short answer is AI answers. Google’s AI Overviews, Bing’s Copilot, and Perplexity surface summaries that satisfy intent faster—often without a click. For UK agency owners, winning inside these answers is now table stakes. This article compares practical tools and lays out best‑practice workflows to improve operational efficiency while increasing brand visibility in AI search results.

Key takeaways

AI answers change user behavior; prioritize inclusion and citations to protect discoverability and brand visibility in AI search results.

Focus on eligibility: crawlability, helpful content, structured formats, and freshness—no “AI‑only” hacks.

Build an agency SOP that standardizes onboarding, monitoring, optimization, and white‑label reporting.

Track AI‑specific KPIs (Answer Inclusion Rate, AI Citation Share) and automate alerts to reduce manual work.

Treat compliance (GDPR, robots.txt for AI crawlers) as part of delivery, not an afterthought.

Why AI Overviews (and LLM answers) matter for agencies

On informational queries that trigger AI summaries, studies show clicks fall—especially for brands excluded from citations. Search Engine Land reported pronounced CTR declines on AIO pages across 2024–2025 cohorts; cited sources tend to fare better than uncited peers. See the operational context in the 2025 and 2024 coverage from Search Engine Land’s AI Overviews impact reports and Semrush’s AI Overviews study.

Operationally, this means two things: you need systematic monitoring of inclusion and citations, and you should modernize reporting so clients see visibility inside AI answers—not just classic SERPs. Improving brand visibility in AI search results turns into a repeatable process when it’s measured and automated.

Signals that drive inclusion and citations

There’s no secret markup for AI features. Platforms emphasize the same fundamentals that good SEO teams already practice:

Helpful, people‑first content that demonstrates E‑E‑A‑T

Crawlability and index eligibility (Googlebot/Bingbot allowed, correct canonicals, no accidental noindex)

Clear, structured formats (FAQ, HowTo, Lists, descriptive headings)

Freshness: up‑to‑date copy, sitemaps, IndexNow (for Bing)

Robust internal linking and accessible page performance

Google explicitly states that AI features surface links from high‑quality pages and that traffic from AI features is included under “Web” in Search Console. See Google’s AI features guidance. Microsoft’s recommendations echo structured data and technical hygiene for better comprehension in AI answers; see Microsoft Advertising’s AI answers guidance.

A quick schema example to reinforce clarity:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "HowTo",

"name": "Set up weekly AI visibility monitoring",

"step": [

{"@type": "HowToStep", "name": "Define query set", "text": "List 50–100 industry queries across Google, Bing, and Perplexity."},

{"@type": "HowToStep", "name": "Validate crawlability", "text": "Check robots.txt, canonicals, indexing status."},

{"@type": "HowToStep", "name": "Schedule reports", "text": "Automate weekly alerts and monthly PDFs."}

]

}

</script>

Agency SOP for brand visibility in AI search results: Onboard → Monitor → Optimize → Report

Standardize delivery so your team spends more time improving pages and less time manually checking engines.

Onboard set (4–6 hours):

Define a reproducible query set per client (by product/service, UK/EU variants, intent tiers).

Confirm crawl/index eligibility; fix canonicals/noindex; allow Googlebot/Bingbot. Decide stance on AI crawlers.

Map content gaps to structured formats (FAQ/HowTo/Article). Establish update cadence and owners.

Monitor cadence (1–2 hours/week):

Track inclusion and citations across engines; note domains cited.

Watch freshness signals (IndexNow, sitemaps, internal linking). Log changes.

Optimize content/tech (varies: 4–10 hours/month):

Improve headings, summaries, and answers sections; add validated schema.

Strengthen topical coverage and internal links; update stale pages.

Resolve accessibility and performance issues impacting comprehension.

Report and automate (1–2 hours/month):

Produce a white‑label PDF and dashboard that highlights AI visibility alongside SEO metrics.

Schedule email delivery; set Slack/Zapier alerts for drops in key KPIs.

Implementation checklist (operational efficiency)

Define query set and competitor cohort

Validate crawl/index eligibility; review robots.txt

Implement and test schema (FAQ/HowTo/Article/Organization)

Enable IndexNow (Bing) and keep sitemaps fresh

Build GA4 + GSC + Looker Studio dashboard; schedule PDFs

Set Slack alerts for KPI thresholds (e.g., 10% MoM AIR drop)

Document retention and DPIA where needed (ICO guidance)

Measurement framework and client‑ready templates

Clients need numbers they can understand and that your team can reproduce. Use clear definitions and simple formulas to quantify brand visibility in AI search results.

Answer Inclusion Rate (AIR) = cited_queries / total_monitored_queries

AI Citation Share = client_citations / total_citations_in_category

Share of AI Mentions = brand_mentions_in_AI / total_AI_answers_monitored

Visibility‑to‑Clicks (est.) = estimated_clicks_from_AI / estimated_AI_impressions

Context and tooling: Google confirms AI features traffic is counted within Search Console’s Web report, which helps triangulate patterns; see Google’s AI features doc. For CTR trends and modeling assumptions, industry aggregates like Semrush and Search Engine Land provide directional context.

Client‑ready snippets you can adapt for monthly PDFs:

Executive summary: “Your brand’s Answer Inclusion Rate rose to X% this month. Competitor citations increased in category Y; we prioritized updates to FAQ pages and refreshed two guides.”

Trends & actions: “We flagged a 12% drop in AI Citation Share for ‘tax advisory’ queries; actions: add HowTo schema, update service page summaries, request re‑crawl via sitemaps.”

Next month plan: “Focus on three pages with high impressions but low AI citations; add illustrative examples and anchor links to sub‑answers.”

For extended KPI definitions and reporting examples, see our KPI frameworks explainer.

Tools & workflows for brand visibility in AI search results (compare + best practice)

Below is a concise, operations‑first view of common tools and where they fit. Pair them into simple workflows to minimize manual work.

Category | Tools (examples) | Where it helps | Agency notes |

|---|---|---|---|

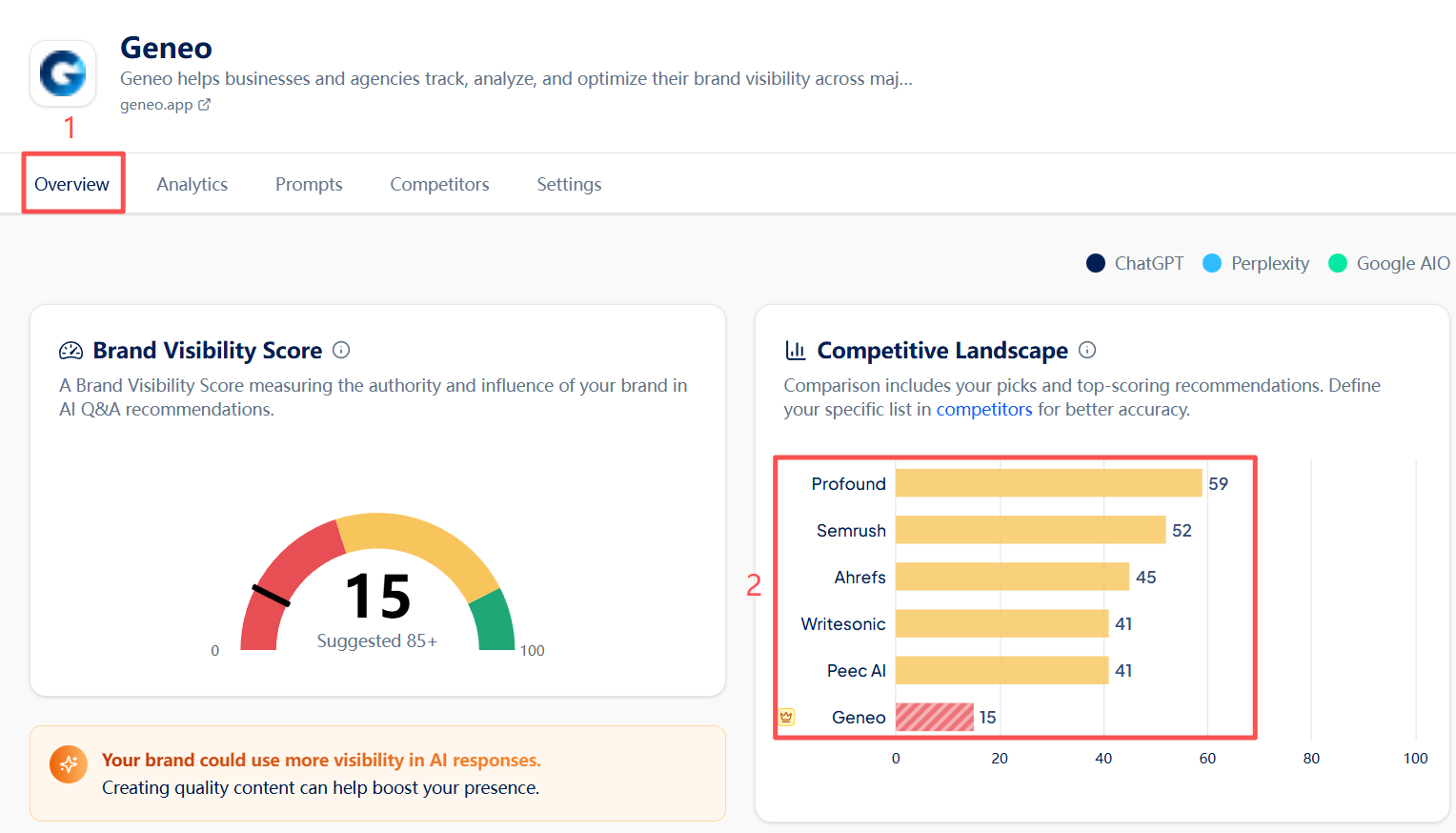

Monitoring & visibility | Geneo; Semrush | Track inclusion, citations, competitor references | White‑label options; validate claims via reproducible query sets |

Structured data & validation | Schema App; Google Rich Results Test | Implement/verify FAQ/HowTo/Article, org/entity clarity | Bake schema into CMS; maintain templates |

Reporting & automation | GA4; GSC; Looker Studio; Slack/Zapier; BigQuery | Dashboards, scheduled PDFs, anomaly alerts | Isolate client data; set alert thresholds; document retention |

Best‑practice pairing recipes:

Monitoring → Reporting: Track inclusion weekly; pipe summary to Looker Studio; email monthly PDF.

Schema → Monitoring: Update templates; validate; watch AIR changes next cycle.

Alerts → Incident workflow: If AIR drops ≥10% MoM, trigger an optimization sprint and a client heads‑up.

Compliance and client communication

UK agencies should embed GDPR into GEO delivery. Establish lawful basis (often legitimate interests, supported by a Legitimate Interests Assessment), minimize data (store summary outputs, not raw chats), and define retention in contracts and privacy notices. See the ICO’s guidance on lawfulness in AI: How do we ensure lawfulness in AI?.

Decide your stance on AI crawlers. OpenAI documents robots.txt‑based controls for GPTBot; allowing crawlers can increase inclusion in AI answers, while blocking may reduce it. Review and log bot activity. See OpenAI’s approach to data and AI.

Client disclosure template (adapt as needed): “We monitor how your brand appears in AI answers (Google, Bing, Perplexity). We collect summary outputs (no personal data) to report inclusion/citations and trends. Processing is based on legitimate interests, with retention limited to 12 months.”

Practical example — a white‑label monthly snapshot with Geneo

Disclosure: Geneo is our product.

A pragmatic way to reduce manual checks is to standardize a monthly visibility snapshot. Using Geneo, an agency can configure a reproducible query set (e.g., 80 category questions across Google AI Overviews, Bing/Copilot, and Perplexity) and monitor whether the client is cited and which domains are referenced. The snapshot highlights Answer Inclusion Rate, AI Citation Share, and domain‑level references so teams know where to focus.

From there, export a white‑label PDF under the agency’s brand and attach commentary: what changed, which pages were updated, and the plan for next month. Agencies often pair this with a Looker Studio dashboard for ongoing trends. If a drop exceeds your alert threshold (say, a 10% AIR decrease month‑over‑month), trigger the incident workflow: refresh structured content (FAQ/HowTo), fix crawl issues, and request re‑indexing. For setup guides and methodology, see Geneo docs.

Next steps

Want to streamline white‑label AI visibility reporting across clients? Explore the agency features and workflow options at Geneo for Agencies.

Appendix: Incident playbook (condensed)

If visibility drops beyond your threshold, run a tight 5‑step sprint:

Verify: Confirm the drop across engines and rule out measurement noise.

Diagnose: Check crawlability, recent content changes, and competitor citations.

Act: Update structured content, add schema, improve headings/answers, fix technical issues.

Re‑submit: Refresh sitemaps and use IndexNow (Bing) where appropriate.

Communicate: Send a brief client note with actions taken and the follow‑up plan.