Best Answer Engine Optimization Tools Checklist 2025

Comprehensive AEO/GEO tools checklist for agencies: covers multi-engine monitoring, actionable recommendations, competitive benchmarking, compliance and transparent pricing.

What Makes an Answer Engine Optimization Tool the Best? Key Criteria and How Geneo Stands Out

Picking an Answer Engine Optimization (AEO) or Generative Engine Optimization (GEO) platform isn’t just about shiny dashboards. Agencies need to prove AI visibility to clients, act on opportunities, and scale reporting across brands without manual firefighting. The right tool should tell you where you show up in AI answers today, how to improve that presence, and make those improvements repeatable across clients.

Mini glossary: keep terms straight

AI Overviews vs AI Mode: AI Overviews are AI-generated summaries embedded in standard Google Search results. AI Mode is a separate, opt-in conversational search experience with deeper reasoning and follow-ups. Google outlined this distinction in its May 20, 2025 product update; see the official Google Search AI Mode update.

Citations vs mentions: In AI answers, a citation typically includes a clickable source; a mention references a brand or entity without necessarily linking. Your visibility strategy should track both.

Core criteria for evaluating AEO/GEO tools

1) Engine coverage and methodology

Your short list should start with multi-engine monitoring. At a minimum, confirm coverage across ChatGPT, Perplexity, and Google’s AI surfaces (AI Overviews and AI Mode where available). Then dig into how the vendor measures each surface:

Sampling and cadence: Ask for the prompt templates, query clusters, and re-sampling frequency. Tools commonly rely on prompt sampling and interface capture; industry roundups describe varied cadences. For context, Uproer’s overview of AI visibility tools highlights differences in update cycles and methods; see Uproer’s monitoring tools overview.

Cache and variance handling: Because LLM responses can change, verify session isolation, multi-run sampling, and model/version tracking.

Geo/device coverage: Ensure region-aware sampling (US, UK, EU, AU, APAC) and device parity (desktop/mobile). Google’s own notes reflect rollout differences for AI Mode; reference the Google Search AI Mode update for background.

Validation tip: run manual spot-checks for 10–20 queries across engines and regions during a trial to corroborate tool outputs.

2) Metrics depth and insight

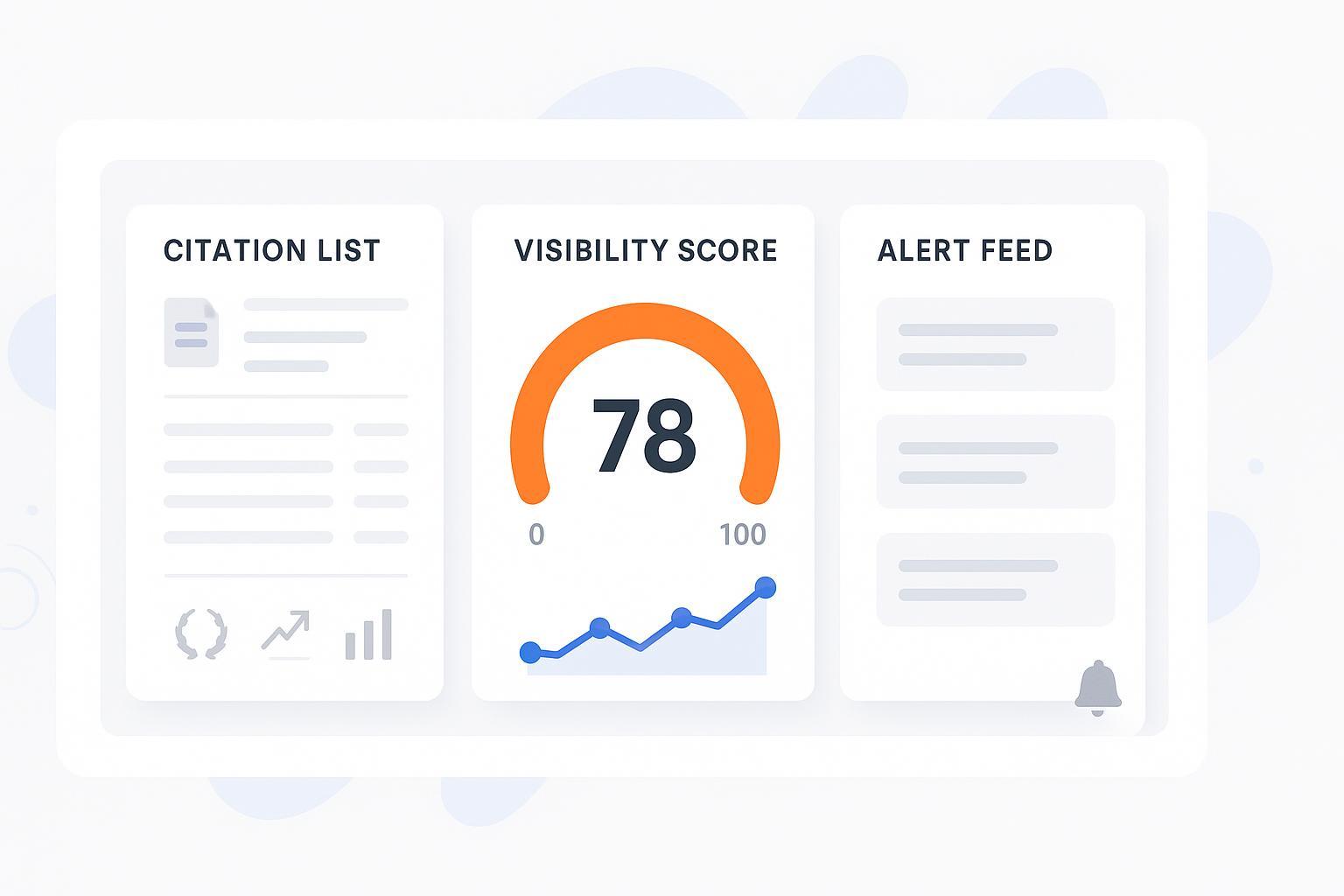

A good platform goes beyond “are you cited?” to “how strongly and where.” Look for:

Visibility/Share of Voice by engine, citation count and positions, volatility trends.

Cohort benchmarking across branded, category, and competitor terms.

KPI frameworks aligned to AI answer quality, such as accuracy, relevance, and personalization. For a primer, see LLMO metrics: measuring accuracy, relevance, personalization.

Ask vendors to show historical trend lines and cohort comparisons that make shifts obvious to clients.

3) Actionable recommendations (not just data)

Dashboards don’t move rankings; actions do. Your tool should translate findings into prioritized tasks—e.g., entity disambiguation, schema updates, FAQ expansions, author E‑E‑A‑T signals, and source improvements—with clear ties to affected queries and engines.

Workflow integration: Check if tasks can be exported to your PM stack and if recommendations reflect engine-specific evidence.

Prioritization logic: High-impact opportunities should surface first, with rationale you can present to clients.

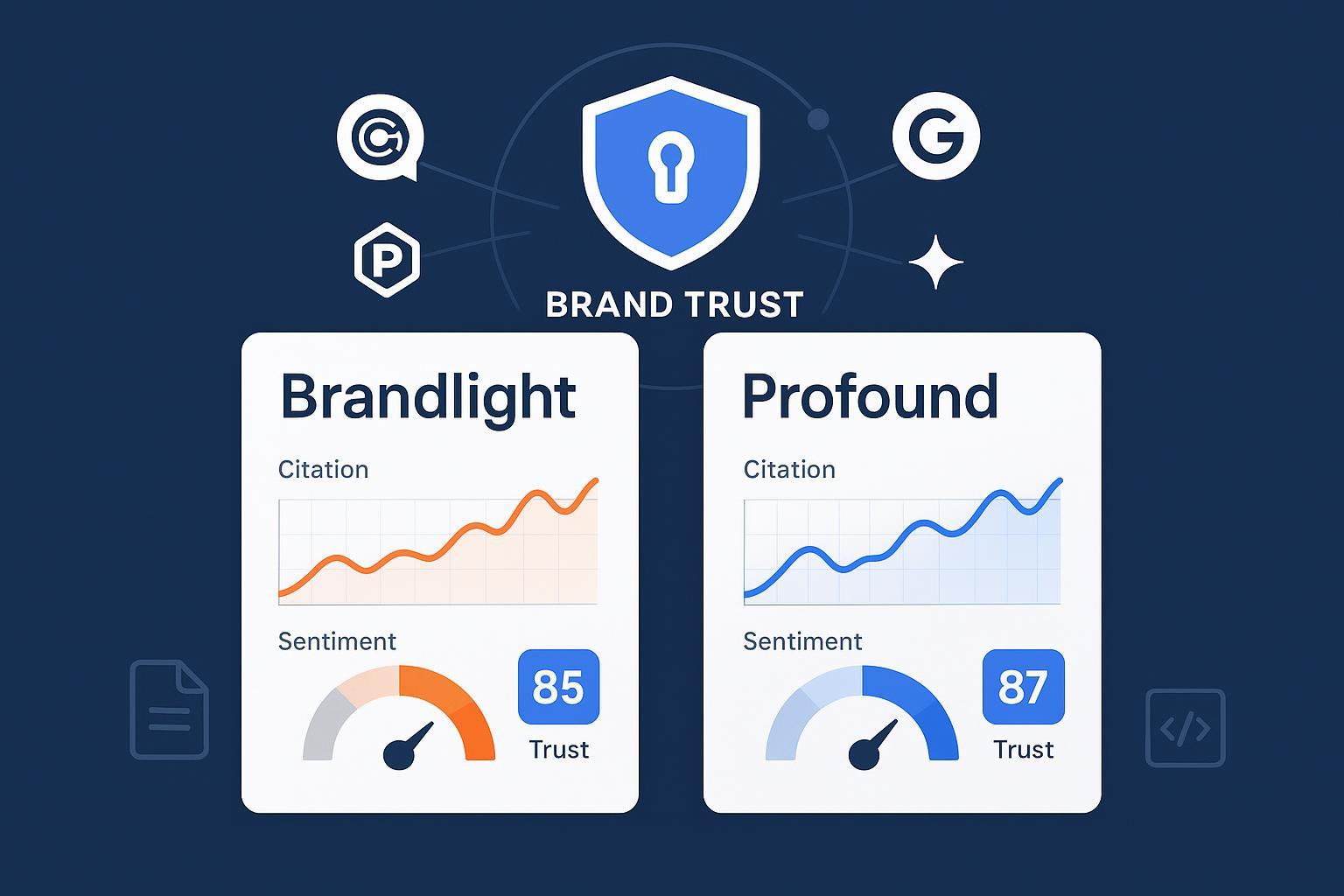

4) Competitive benchmarking and absence alerts

You’re not optimizing in a vacuum. Agencies need to know when competitors overtake citations—and when your brand disappears.

Competitive matrices: Compare cross-engine presence and relative share of voice.

Absence alerts: Tools should alert when a brand stops being cited for target queries, not just when it appears.

Root-cause analysis: Expect guidance on likely causes (e.g., schema regressions, entity confusion, competitor updates) and suggested fixes.

5) Governance and scale for agencies

If you run dozens of clients, governance is non-negotiable.

White-label and CNAME: Verify branded domains, template flexibility, and sender domain customization for client-facing emails. See agency-focused features on Geneo’s agency page.

Multi-tenant controls: RBAC, seat management, and client partitions.

Export/API: Full data export (CSV/JSON) with timestamps, plus APIs with sensible rate limits.

SSO and audit logs: Enterprise-grade access controls and traceability.

6) Compliance and security

For regulated or privacy-sensitive brands, demand proofs—not promises.

SOC 2 Type II, GDPR DPA, data residency options, encryption in transit/at rest.

Clear privacy policies, breach notification SLAs, configurable retention.

Trust portals and third-party attestations. As a reference for what good looks like, see Conductor’s security portal.

7) Pricing transparency and total cost of ownership

Agencies need to forecast margins. Look for public pricing with clear inclusions (seats, credits, exports, API limits), overage policies, and any professional services.

Public tiers: Transparent plans simplify procurement and renewal planning.

Hidden costs: Ask about add-ons, regional sampling, or volume limits that could inflate TCO.

Step-by-step evaluation workflow (agency-ready)

Define scope and success criteria

Align on target query clusters (branded, category, competitor) and engines. Decide which KPIs matter most (visibility/SoV, citation positions, volatility).

Verify engine list and measurement method

Request documentation on sampling templates, cadence, cache handling, and geo/device coverage. Confirm differences between AI Overviews and AI Mode reporting contexts.

Run a 4–6 week PoC sampling plan

Test 50+ queries across regions. Log timestamps, engines, and response excerpts. Spot-check manually to validate citations and positions.

Validate geo/device parity and rollout differences

Use VPNs for US, UK, EU, AU, APAC. Test desktop vs mobile for AI Overviews and AI Mode. Document where behavior diverges.

Stress-test absence alerting and root-cause guidance

Simulate content changes on a few pages. Measure alert timeliness and recommended fixes.

Examine recommendations and workflow fit

Ensure tasks map to affected queries and engines, include priority and effort, and export cleanly to your PM tools.

Confirm governance and data portability

Provision a white-label/CNAME demo. Check RBAC, SSO, audit logs, and a 1,000-row export with timestamps, engine, query, citation list.

Review security, compliance, and pricing clarity

Request SOC 2/GDPR docs, rate limits, overage policies, and any add-on costs. Forecast margins for your client portfolio.

Comparison matrix: typical tool approaches

Approach | Coverage focus | Method transparency | Exports/API | White-label depth | Recommendations |

|---|---|---|---|---|---|

Scrape-first trackers | Strong on visual capture of AI cards/answers | Often partial (interface-based) | Usually CSV; API varies | Basic branding | Limited or none |

Hybrid monitoring platforms | Balanced cross-engine coverage with mixed sampling | Moderate to high (cadence + cache notes) | CSV/JSON; stable APIs | Templates + CNAME options | Task queues with priorities |

All-in-one agency suites | Broader martech integrations; AEO/GEO as a module | Varies; depends on suite | Rich exports + connectors | Deep white-label, multi-tenant | Playbooks tied to KPIs |

Practical example: how agencies operationalize cross-engine visibility

Disclosure: Geneo is our product.

An agency tracks a “best [product] for [use case]” query cluster across ChatGPT, Perplexity, and Google AI Overviews. They monitor visibility and share of voice, then use a visibility score to prioritize fixes: tighten schema on weak pages, add FAQs to capture conversational intents, and strengthen entity signals for ambiguous topics. Recommendations are exported into the team’s PM tool, and a white-label report compiles changes, trends, and competitive shifts for the client. For agency-grade features like CNAME and public pricing tiers, see Geneo’s agency page.

Pitfalls and validation tips

Don’t rely on Search Console alone for AI surfaces. As of mid‑2025, Google blends AI Mode data into standard Web totals without a dedicated filter. See Search Engine Land’s coverage (June 16, 2025).

Expect prevalence differences by source and timeframe. Studies range from around one‑in‑five searches showing AI summaries in March 2025 (Pew) to much higher prevalence later in the year in specific cohorts. For context, review Pew’s July 2025 short read.

Beware “real-time” claims without audit logs. Require timestamps, engine details, and response excerpts in exports.

Validate absence alerts and root-cause guidance during your PoC; they matter more than pretty charts.

Wrap-up and next step

If you’re evaluating AEO/GEO platforms for multi-engine monitoring, actionable recommendations, agency-grade governance, and pricing clarity, use the workflow above to separate signal from noise. Ready to see your AI visibility baseline and where to improve? Start Free Analysis.