AI Visibility Ultimate Guide for Marketing Agencies

Discover the complete guide to AI visibility—learn actionable strategies for agencies to measure, improve, and report cross-platform AI search exposure. Book a demo now!

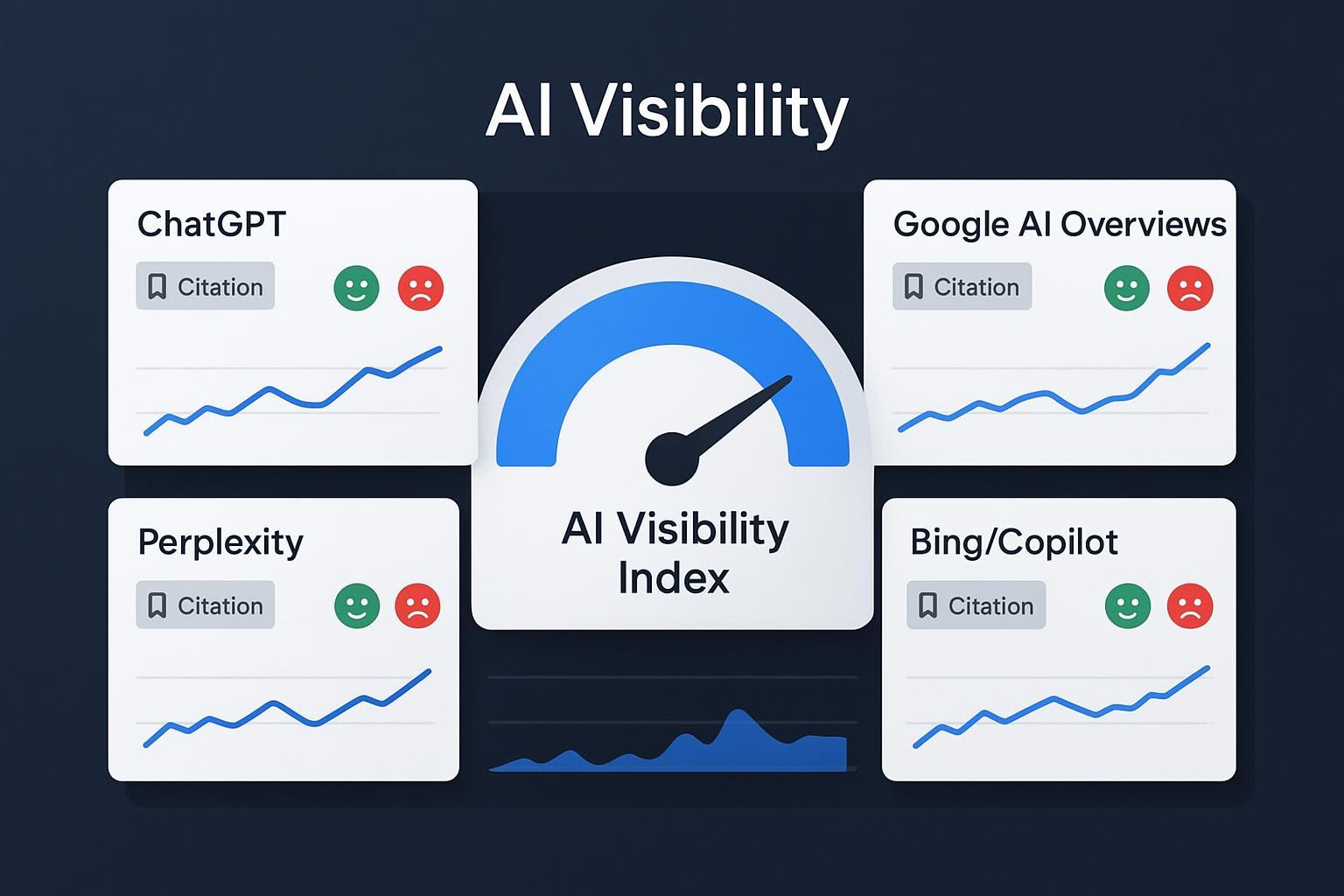

Your client asks two questions you can’t dodge anymore: Why did organic traffic dip even though we held rankings, and how will you prove ROI from AI search? Here’s the short answer: rankings aren’t the only game now. AI answers—on ChatGPT, Perplexity, Google’s AI Overviews/Gemini, and Bing/Copilot—are siphoning attention. Your brand’s presence inside those answers is your new visibility lever.

This guide defines AI visibility in practical terms, shows how to measure it across engines, and gives you an agency-friendly workflow to improve it. Use it to explain traffic changes with evidence, focus your optimization roadmap, and set up reporting clients can trust.

What “AI Visibility” Means (and What It’s Not)

AI visibility is the frequency, prominence, accuracy, and tone of your brand’s inclusion or citation inside AI-generated answers across ChatGPT, Perplexity, Google AI Overviews/Gemini, and Bing/Copilot. Think of it as “share of recommendation” when an answer engine composes a summarized response.

It’s not the same as traditional SEO visibility, which is dominated by position-based rankings and SERP clicks. We’re moving from an index-and-rank paradigm to retrieve-and-generate, where citation and provenance signals matter as much as “position.” If you want a deeper conceptual dive, see our explainer on brand exposure in AI search: What Is AI Visibility? Brand Exposure in AI Search Explained.

Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) are the practices that improve AI visibility by making content and entities easy for models to retrieve, trust, and cite. For a broader industry context, Amsive outlines how AEO is evolving in the AI era in their guide, Answer Engine Optimization in the age of AI search (2025).

Why AI Visibility Matters: The Traffic and CTR Evidence

Across multiple 2024–2025 datasets, queries that trigger Google AI Overviews (AIO) show reduced click-through rates. Brands cited in those AI answers often retain more clicks than those omitted—call it the “citation effect.”

Study (year) | Scope | Finding on AIO queries | Notable effect for cited brands |

|---|---|---|---|

Seer Interactive (Sept 2025) | 3,119 informational queries across 42 orgs | Overall organic CTR fell from 1.76% to 0.61% (−61%) | Cited brands saw ~35% more organic clicks and ~91% more paid clicks vs non-cited brands; see the analysis in Seer’s September 2025 update and Search Engine Land’s coverage. |

Digital Content Next (May 2025) | Publisher cohort | Desktop CTR for the #1 result dropped from 7.3% (Mar 2024) to 2.6% (Mar 2025) when AIO appeared | Directional support for significant top-slot CTR compression; see DCN’s report. |

Semrush (Dec 2025) | 10M+ keywords | Substantial CTR reductions and more zero-click behavior where AIO appears (varies by vertical) | Vertical-specific differences matter; see Semrush’s AI Overviews study. |

What this means for your client conversations: A page-one rank can coexist with lower clicks if an AIO steals attention. When you’re cited under the AIO, residual clicks and brand lift improve relative to being excluded. Your goal isn’t only “rank”—it’s “be the source.” For a forward view on planning under uncertainty, see our guidance on scenario planning: How to Prepare for a 50% Organic Search Traffic Drop by 2028.

How to Measure AI Visibility Across Engines

You can—and should—measure AI visibility with a reproducible framework. Below is a method agencies can run monthly or quarterly.

Define scope and queries Pick engines (e.g., ChatGPT, Perplexity, Google AIO/Gemini, Bing/Copilot) and locales. Build a balanced prompt/query set that reflects key intents and funnel stages.

Capture answers consistently Use compliant APIs or controlled front-end methods to capture answers, citations, and metadata. Store raw outputs and timestamps for audits.

Extract structured signals Parse for brand mentions, citation URLs, presence of links, and position in the answer. Score prominence (primary recommendation vs supporting cite). Evaluate sentiment around your brand, and flag hallucinations/misattributions. Track “grounding rate” (answers with valid sources vs ungrounded).

Build composite metrics and benchmark competitors Compute AI Share of Voice (AI SOV: % of tested prompts where your brand is cited or mentioned), citation frequency and average citation position, a prominence-weighted citation score, sentiment/representation score, and grounding accuracy rate. Repeat identical steps for 3–5 key competitors and calculate relative SOV and trend deltas. Finally, link visibility changes to GA4/GSC and CRM/marketing automation data to identify likely lift windows. For a practical approach to readiness and monitoring, see Conductor’s 2025 guidance on measuring AI search readiness.

Platform Differences You Should Plan Around

Answer engines vary in how they select, display, and refresh sources—and how transparent they are about it. Google (AI Overviews/AI Mode) lets site owners influence display with preview controls such as nosnippet, data-nosnippet, max-snippet, and noindex. See Google’s May 2025 guidance in AI features and your website and their companion blog post on succeeding in AI search. OpenAI’s Introducing ChatGPT Search describes timely answers with visible links and a Sources sidebar so users can go straight to the source. Perplexity is designed for retrieval with inline citations and multi-pass “Deep Research” that emphasizes verifiable sources; a professional overview is summarized here: Deep Research and retrieval.

Industry testing also notes inconsistencies in attribution quality across AI search tools. The Tow Center at Columbia Journalism Review compared eight engines and found poor citation accuracy for news queries; see the March 2025 analysis, We compared eight AI search engines; they’re all bad at citing news. The implication for agencies: reinforce provenance and freshness, monitor for misattribution, and document incident response. If you want a closer look at engine-by-engine differences and monitoring tactics, we break them down here: ChatGPT vs Perplexity vs Gemini vs Bing: AI Search Monitoring Comparison.

The 80/20 Optimization Checklist for AEO/GEO

If you only do a handful of things, prioritize these levers. They consistently move the needle across engines.

Answer-first content shape: Lead pages with a crisp 50–80-word direct answer, then support with evidence.

Structured data (JSON-LD): Implement FAQPage, HowTo, Organization, Person, Product, Article/NewsArticle, Dataset, BreadcrumbList, and Speakable where appropriate.

Authority and provenance: Show author credentials and expertise; cite primary sources; publish original data. For why some brands get named more often, see: Why ChatGPT Mentions Certain Brands.

Extraction-friendly formatting: Use question-based H2/H3s, short paragraphs, and tidy tables for facts.

Freshness and change logs: Maintain visible dates and revision notes on time-sensitive pages.

Technical accessibility: Ensure fast loads, schema validity, and crawlability; avoid JS-only critical text.

A Practical Agency Workflow (With a Neutral Tool Example)

Here’s an end-to-end process you can adopt and scale, whether you prefer internal scripts, enterprise suites, or specialized tools.

Scope and governance Define use cases, topics, engines, locales, and a risk policy for hallucinations and sensitive content.

Synthetic sweeps and capture Run controlled weekly or monthly sweeps across your query set. Store answers, citations, and metadata in a repository.

Parsing and scoring Extract mentions and URLs; score prominence, sentiment, and grounding. Generate an AI Visibility Index combining AI SOV, prominence-weighted scores, sentiment share, and grounding rate. Replicate steps for 3–5 competitors to identify gaps by topic, engine, and intent. Convert findings into a prioritized content backlog—target topics where visibility is weak, rework pages that appear but lack prominence, and pitch net-new assets to fill gaps.

Optimization, implementation, and measurement Apply the 80/20 checklist (answer-first, schema, authority signals, extraction-friendly structure). Update or publish, then re-measure on the next sweep. Correlate index changes with GA4/GSC and CRM metrics. Use branded search lift and citation-to-CTR parameters to estimate impact ranges. Monitor for hallucinations/misattributions and escalate with evidence when needed.

Neutral example: You can operationalize this with traditional SEO stacks, homegrown scripts, or dedicated platforms. One option is Geneo (Disclosure: Geneo is our product), which can be used to monitor multi-engine AI visibility (e.g., ChatGPT, Google AI Overviews, Perplexity), benchmark competitors, analyze sentiment, and generate a prioritized content roadmap with rewrite suggestions. Alternatives include suites like Semrush’s AI tooling, enterprise platforms like SEOClarity/ArcAI, knowledge-graph-centric approaches like Yext, or fully in-house crawlers when engineering resources allow.

Reporting, ROI, and Risk Governance

Clients don’t just want dashboards; they want a narrative that ties exposure to outcomes. A pragmatic monthly report covers baseline vs current AI Visibility Index by engine/topic, competitive AI Share of Voice and momentum, top cited pages and queries, gaps by engine and intent, sentiment/representation quality, and downstream changes in branded search, direct traffic, and conversions.

Two attribution approaches help connect visibility to business value. First, Branded Search Lift Attribution: track changes in AI citation share and correlate with branded search volume and revenue/leads over a defined window (e.g., 7–28 days). A practical formula is: Branded Search Lift Revenue = (Current Branded Search Revenue − Baseline Branded Search Revenue) × AI Attribution Factor. Choose the factor after you assess correlation strength and triangulate with other signals. Industry practice and measurement roundups discuss this pattern; see presenceAI’s overview on AI search attribution models and NAV43’s guide to measuring AI SEO and visibility.

Second, Citation-to-CTR Lift: parameterize expected differences where you’re cited vs not cited on AIO-triggering queries. For directional inputs, Seer’s 2025 update quantifies CTR compression and a relative advantage for cited brands; reference it judiciously using the study cited earlier.

Treat AI visibility like a new channel with real brand risk. Build a lightweight governance loop: form a small governance group (SEO, legal, PR, product, security) with clear incident SLAs; make provenance obvious with valid schema, expert bylines, and citations to primary sources; use Google preview controls (nosnippet, data-nosnippet, max-snippet, noindex) for sensitive or easily misquoted content; instrument synthetic sweeps on high-risk topics; and follow an incident path of evidence capture → verification → on-site correction → platform feedback/legal escalation as needed → verification sweep → post-incident review.

Next Steps for Agencies

You don’t need to overhaul everything at once. Start with a tight rollout:

Week 1: Align on engines, locales, and a 100–200 prompt set. Define governance basics.

Week 2: Run a baseline sweep and compute your AI Visibility Index. Identify three priority topics per engine.

Week 3–4: Ship optimizations and one net-new asset per priority. Set up dashboards for AI SOV, sentiment, and grounding rate.

Month 2: Refresh sweeps, measure deltas, and expand competitive benchmarking.

If you want to accelerate setup and get an agency-ready measurement and content roadmap out of the box, book a short product demo. We’ll show how multi-engine monitoring, sentiment, and prioritized rewrite suggestions slot into your existing SEO/reporting stack.

Ready to turn AI answers into your next growth channel? Book a demo.