AI Visibility Audit for Brands: The Ultimate Guide

Master AI visibility audits for brands with key definitions, metrics, and a step-by-step checklist. Learn GEO/AEO frameworks and competitive benchmarking. Start your audit now!

Why your brand’s AI visibility now determines discovery

If an answer engine gives people everything they need, they click less. That’s not a hunch—it’s visible in user behavior when Google shows AI Overviews. In July 2025, the Pew Research Center reported that users who see an AI summary are less likely to click through to links compared with those who don’t, raising the stakes for being cited or mentioned inside the summary itself; see Google users are less likely to click on links when an AI summary appears (2025).

At the same time, measurement thinking has shifted. Rankings are no longer a sufficient proxy for exposure in AI-synthesized results. Industry guidance emphasizes tracking mentions, citations, and prominence across engines and mapping those signals to business outcomes; Search Engine Land’s 2025 guidance on how to measure brand visibility in AI search lays out this perspective.

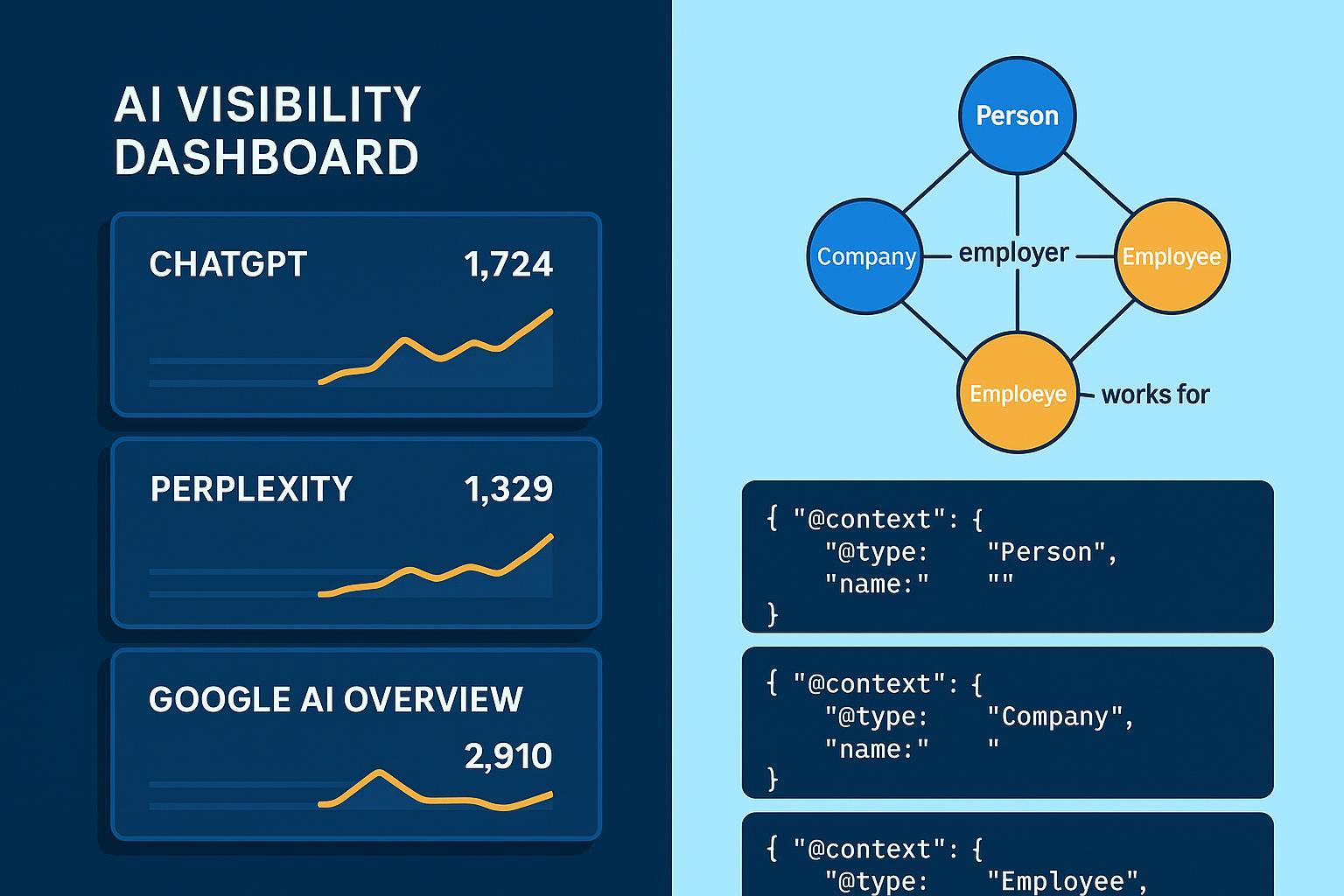

Here’s the deal: an AI visibility audit shows whether your brand is present, credited, and competitively positioned inside AI answers on ChatGPT, Perplexity, Google AI Overviews, and Bing Copilot—and what to fix next.

Glossary: GEO, AEO, and how engines attribute sources

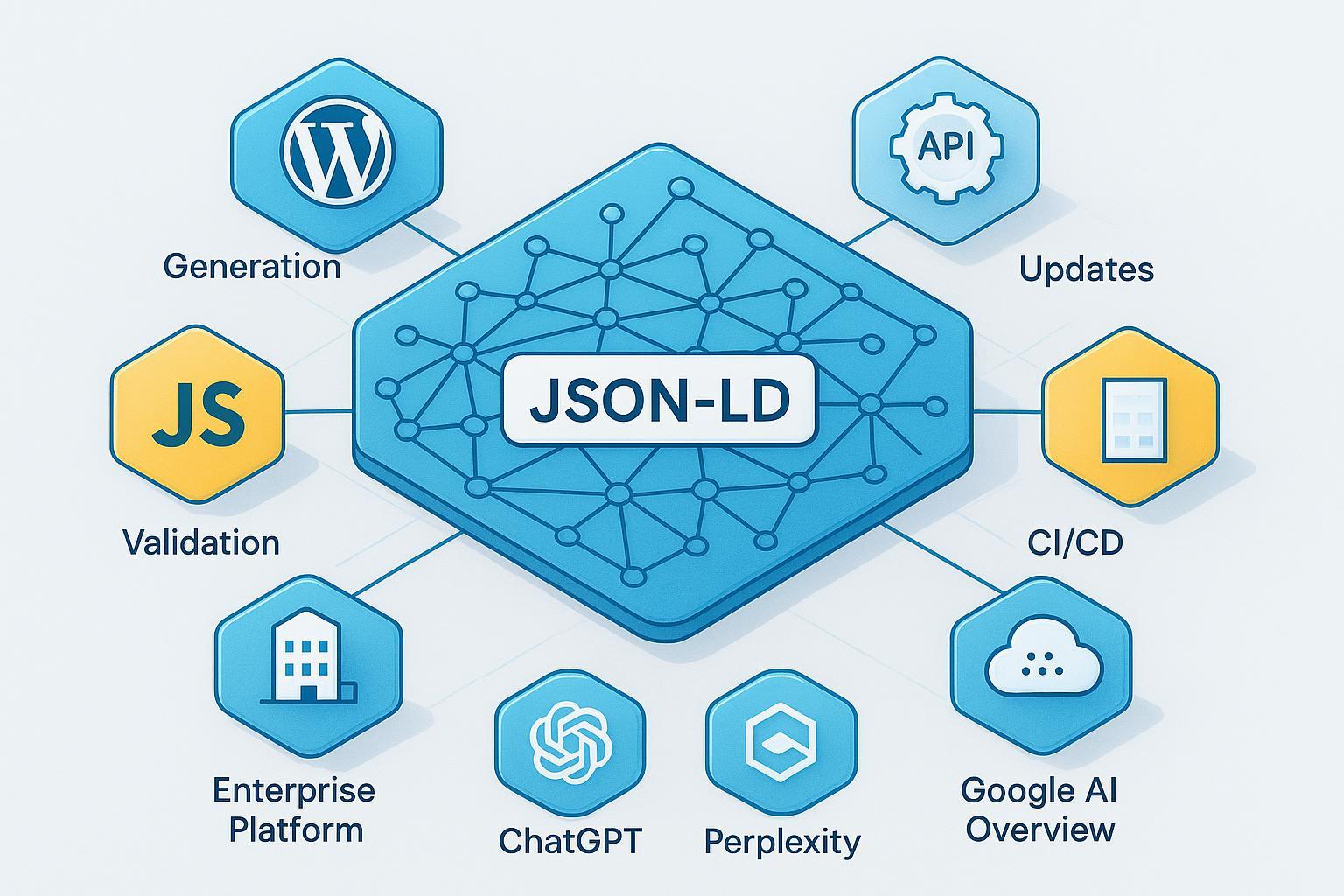

Generative Engine Optimization (GEO): The practice of aligning your content, entities, and brand signals so your brand appears and is cited within synthesized AI answers across engines. It emphasizes entity clarity, evidence, and iterative testing across engines. For a deeper conceptual primer, see our explainer on AI visibility and brand exposure in AI search.

Answer Engine Optimization (AEO): Focused on making content extraction-friendly for answer engines—structured Q&A, schema, and evidence that supports concise, direct answers. Several authoritative guides frame AEO as a stepping stone toward broader GEO practices. Conductor’s academy definition of answer engine optimization (2025) provides a balanced overview of AEO’s scope.

Platform behaviors that shape what you can measure:

ChatGPT: Citation display varies by mode; in browsing modes, sources may appear inline or as references, but consistency can fluctuate across sessions. Practical testing summaries note a strong presence of encyclopedic and community sources.

Perplexity: Consistently exposes a sources panel and provides a structured search_results array in the API, with titles, URLs, and dates—ideal for verifiable audits. See Perplexity’s documentation on the chat completions guide and search control (2025–2026).

Google AI Overviews (AIO): Third-party tracking shows AIO appearances are significant, and visibility correlates with authority, structure, and freshness. SISTRIX’s analysis of the most-cited websites in AIO (2025) highlights patterns in what gets cited.

Bing Copilot: Microsoft positions citations prominently to support publisher attribution and verifiability. Their 2025 announcement Introducing Copilot Search in Bing discusses design intent around source linking.

If you think of traditional SEO as competing for ranked blue links, GEO/AEO is competing for narrative inclusion and credit inside the answer. To understand how this relates to old-school SEO practices, here’s a side-by-side discussion of traditional SEO vs. GEO.

The metrics that matter (and how to measure them)

Below are the core KPIs practitioners use to evaluate AI visibility. They prioritize cross-engine share of mentions, citation/link visibility and authority, and gaps vs. top competitors. We add intent coverage to ensure your audit sees the full funnel—brand, category, and non-brand scenarios.

Metric | What it means | How to measure | When it matters |

|---|---|---|---|

Brand visibility score | Percent of AI answers in your tracked space that include your brand | Answers mentioning your brand ÷ Total answers in your prompt set; compute per engine and overall | Baseline presence; weekly trend tracking; executive rollups |

Share of voice (SOV) | Your mentions vs. peers across engines | Your mentions ÷ Mentions of you + 3–5 key competitors | Competitive positioning and gap analysis |

Citation rate | % of answers that link to your domain/content | Count answers that include a link to your domain ÷ total answers mentioning you | Demonstrating credit/attribution, not just mentions |

Prominence | The placement and emphasis of your brand within the answer | Score top-of-answer vs. bottom, headline vs. footnote, list position | Prioritizing fixes for “buried” mentions |

Sentiment/stance | Polarity of how your brand is framed | Manual or model-assisted labeling of answer tone | Risk monitoring and messaging adjustments |

Intent coverage | Presence across brand, category, and non-brand scenarios | Coverage rate per intent cluster; add quality (prominence) and authority | Ensures you don’t only win on branded queries |

Citation authority mix | Reputation profile of domains that cite you (news, .gov/.edu, expert blogs) | Classify citing sources; monitor recency and bylines | Guides PR/earned media to raise citation eligibility |

Trend velocity/volatility | Speed and variability of change week over week | Week-over-week deltas in the above metrics per engine | Resource allocation and experiment cadence |

Two practical notes: First, Perplexity’s API sources offer the cleanest ground truth for citations; second, ranking dashboards don’t substitute for visibility in synthesized answers. As Search Engine Land argues in How to measure brand visibility in AI search (2025), map the above KPIs to pipeline metrics to make the story resonate.

A beginner-friendly AI visibility audit checklist

Use this single sequence to complete your first audit in one to two working days:

Define objectives: what stakeholders need to learn (e.g., baseline presence vs. three competitors; 90‑day plan to close gaps).

Select engines and markets: ChatGPT, Perplexity, Google AI Overviews, Bing Copilot; choose the country/language you operate in first.

Build a prompt set: 50–100 prompts spanning brand, category, and non‑brand scenarios; include comparative queries (“best…”, “vs”, “top X…”). Keep structure consistent across engines.

Create a data schema: fields for engine, timestamp, prompt ID, brand/competitor mentions, cited domains/URLs, sentiment, prominence, and notes.

Execute runs: use fresh sessions; capture screenshots or raw JSON if available (Perplexity search_results); note modes (e.g., ChatGPT browsing on/off; AIO present or absent).

Compute metrics: visibility score, SOV, citation rate, prominence, sentiment, intent coverage; segment by engine and intent.

Benchmark vs. 3–5 competitors: compare SOV, citation density, authority mix of citing domains, and coverage by intent cluster.

Diagnose gaps: find missing intents, low‑authority citation patterns, and instances where you’re mentioned but not linked.

Recommend fixes: strengthen structured content (FAQs, “how‑to” and “best” pages), clarify entities, add schema, and publish evidence pages (pricing, methodology, credentials) that engines can cite.

Report and schedule: produce an executive one‑pager with KPIs and a 90‑day plan; set a weekly cadence for re‑runs to track volatility.

Example workflow: from prompt bank to first report

Let’s say you run a 10‑query pilot across four engines to validate the process before scaling to 50–100 prompts. You choose three brand queries, four category queries, and three non‑brand scenario queries. You capture each answer, record mentions for you and three competitors, log citations with URLs, and score prominence (top/middle/bottom and list position when applicable).

Within a day, you’ll have a small but directional matrix: perhaps your brand appears in 40% of category answers on Perplexity with two direct links, but only shows as a bottom‑of‑answer mention in ChatGPT for brand queries, and isn’t cited in Google AIO despite being mentioned. That’s enough signal to set hypotheses: improve evidence pages for category topics; strengthen bylines and recency; develop a clean FAQ page targeting recurring non‑brand questions.

For a tangible artifact of what an audit output can look like, see this example AI visibility query report page that captures sources and synthesis logic.

Disclosure: Geneo is our product. In a full audit, some teams prefer to automate cross‑engine monitoring and reporting. A platform like Geneo can be used to aggregate mentions across ChatGPT, Perplexity, and Google AI Overviews, compute a brand visibility score, benchmark competitors, and export white‑label reports—handy for agencies or stakeholders who want a consistent weekly view. Alternatives include building an internal sheet + script workflow using Perplexity’s API for citations and manual sampling on other engines, or evaluating third‑party monitoring tools that cover your target engines and support clean exports. Choose based on engine coverage, verifiable citations, export flexibility, and cost—parity-based criteria that keep you objective.

Competitive benchmarking: quantify the gap you need to close

To translate raw mentions into strategy, benchmark against three to five direct competitors. Start with intent coverage: compute presence and prominence across brand, category, and non‑brand scenarios per engine. A simple heatmap (engines on rows, intents on columns) will immediately show where you’re absent or buried.

Next, examine the authority mix of domains that get cited in answers where your category appears. Classify citing sources (e.g., top-tier news, .gov/.edu, industry analysts, expert blogs) and note recency, named authors, and credentialed bylines. This guides your PR and content strategy toward sources that engines already favor. External studies have analyzed AI Overviews’ citation patterns; SISTRIX’s review of what the most-cited sites are doing right (2025) is a useful orientation on structure and recency factors.

Finally, profile volatility. Some intents are stable week to week; others swing wildly as models and indexes refresh. Logging deltas in SOV, citation rate, and prominence by engine helps you decide where to double down and where to wait for steadier signals before investing heavily.

Turning audit data into an executive-ready brief

Executives don’t need every screenshot; they need proof that visibility is moving and that it ties to outcomes. Structure your brief in three layers:

Dashboard: a single page with brand visibility score, SOV, citation rate, prominence, and sentiment, broken out by engine and intent, with month-over-month deltas. If you want to go deeper on connecting AI visibility to traffic and demand, see our guide to AI traffic tracking best practices.

Pipeline linkage: overlay visibility trends with demo requests, qualified leads, and sourced opportunities. Industry guidance recommends connecting AI visibility to revenue to justify investment; see Search Engine Land’s guidance on measuring brand visibility and proving business impact (2025).

90‑day plan: pick a small slate of experiments tied to the gaps you found—e.g., publish three evidence pages (methodology, pricing, security), refresh two evergreen listicles with clear bylines and updated references, and pitch one analyst or top-tier media placement per month. Include a risk log and milestones.

To contextualize the stakes, frame the narrative with two external perspectives:

Discovery shift: McKinsey describes AI assistants and AI search as the new front door to the internet (2025), implying that brands missing from AI answers risk material loss of discovery.

Trust as an adoption driver: Edelman’s Trust Barometer and 2025 flash polling show that higher trust correlates with willingness to use AI experiences—relevant when you consider bylines, citations, and the credibility signals that answers tend to reward.

For stakeholders who need a conceptual bridge from SEO to GEO, here’s a comparison of traditional SEO vs. GEO. And if you’re preparing a proposal to leadership or clients, this guide to pitching GEO services to non‑technical decision makers outlines how to translate the audit into an outcomes-focused plan.

Next steps

Run the full 50–100 prompt audit across your priority engines and markets. Schedule a weekly cadence; track volatility and revisit your prompt set quarterly. If you prefer a turnkey approach with aggregation and white‑label reporting, Geneo can help streamline monitoring and competitive benchmarking without locking you into any single engine’s dashboard.

For background and concept alignment, review the definition guide on AI visibility and brand exposure in AI search, and the comparison of traditional SEO vs. GEO to align teams on terminology.

For analysts, adopt Perplexity’s API for consistent citation capture, and follow OpenAI’s GPT‑4.1 prompting guidance to keep your prompt set structured and replicable for audits.

For ongoing measurement hygiene, standardize data schemas and keep a living register of your evidence pages (methodology, pricing, credentials) so they’re easy to update and cite.

AI visibility and brand exposure in AI search

AI traffic tracking best practices

Pitching GEO services to non-technical decision makers

Perplexity chat completions guide

Perplexity search control guide

Introducing Copilot Search in Bing

SISTRIX on most-cited AI Overview sites

Search Engine Land on measuring AI visibility

Pew Research on AI Overviews and link clicks