12 Essential Reasons You Need an AI Search Monitoring Dashboard

Discover 12 actionable reasons to adopt an AI search monitoring dashboard. Boost brand safety, measure impact, and gain real KPIs. Start optimizing now!

When answer engines synthesize a single response, your links often get pushed down—or skipped entirely. That shift isn’t theoretical anymore. Semrush’s 2025 tracking shows Google AI Overviews (AIO) grew quickly through the year, with coverage summarized as “nearly 20% of queries” by May 2025 in a CACM recap. See the longitudinal data in the Semrush study and the broader context in the CACM piece: Semrush’s AI Overviews study (2025) and CACM’s ‘Answer Engines Redefine Search’ (2025). And those synthesized answers correlate with fewer clicks to traditional results; Seer Interactive’s multi-org analysis in September 2025 reported large CTR declines when AIO appears: AIO impact on Google CTR (Seer Interactive, 2025).

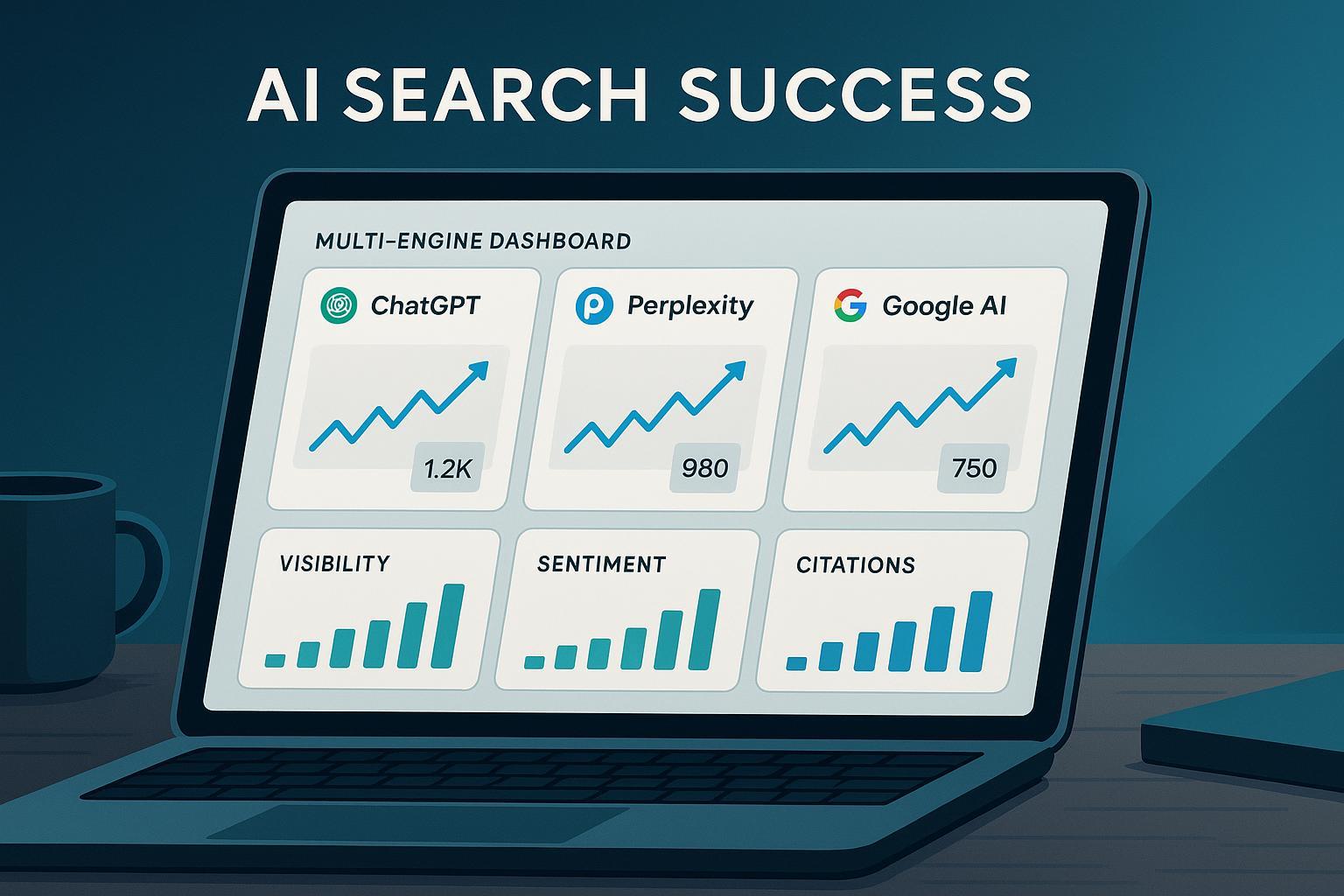

That’s why AI visibility deserves its own dashboard. Rank tracking alone can’t tell you whether your brand is cited inside AI answers, whether the sentiment is positive, or which competitors are winning the “share of answer.” For a quick primer on the concept, see AI Visibility explained.

Why rank tracking isn’t enough

Traditional rank trackers measure where your page appears among blue links. But answer engines often (a) compress the need to click, (b) ground their summaries with a handful of citations, and (c) vary by query type, device, and locale. Ahrefs’ explainer walks through how AIO behaves and where links surface: Ahrefs’ Google AI Overviews explainer. If you’re only watching positions, you’ll miss whether you’re actually referenced, how your brand is framed, and whether competitors are being recommended instead.

Below are twelve practical, measurable reasons to stand up an AI search monitoring dashboard now.

1) Quantify AIO exposure to forecast CTR risk

- Value: See how much of your keyword set triggers AIO so you can model likely CTR and traffic deltas.

- KPIs: AIO inclusion rate on tracked keywords; organic CTR delta (AIO vs. non‑AIO); impressions at risk; intent mix.

- Evidence: Semrush’s 2025 study and CACM’s summary of near-20% prevalence; Seer Interactive’s documented CTR declines.

- Action: Segment your portfolio by informational vs. transactional intent and by device; update monthly.

2) Track brand citations and links inside AI answers

- Value: Ensure your brand is actually named and linked when AI summarizes your category.

- KPIs: Citation presence/absence; link position inside the answer; “share of answer” versus competitors; engine coverage (Google, Perplexity, ChatGPT where applicable).

- Evidence: Ahrefs’ documentation of grounding links and panel behavior.

- Action: Capture and archive screenshots/text, tagged by query, engine, and locale. Trend weekly.

3) Detect misinformation and sentiment shifts before they snowball

- Value: Protect brand trust by catching inaccuracies, outdated facts, or negative framing early.

- KPIs: Accuracy rate; hallucination incidents per 100 answers; sentiment score and trend; time-to-correction.

- Evidence methods: Align with structured evaluation approaches and human-in-the-loop QA. For evaluation concepts, see LLMO Metrics for AI Answers.

- Action: Define an escalation path for factual errors; prewrite correction language; monitor spikes with alerts.

4) Benchmark competitors’ “share of answer”

- Value: Understand who the answer engines recommend—and why.

- KPIs: Competitor citation share; domain diversity inside answers; net gains/losses over time.

- Action: Build a monthly scorecard of head terms and top long-tail clusters; pair with content and PR priorities.

5) Attribute AI-influenced discovery to traffic and conversions

- Value: Move beyond impressions to quantify impact on sessions and pipeline.

- KPIs: AI referral sessions (where available, e.g., Perplexity); engaged sessions; assisted conversions; conversion rate deltas versus other discovery channels.

- Evidence practice: GA4 channel groupings and referrer tracking can capture some AI referrals; where links don’t pass referrers, use controlled UTMs and proxy tests.

- Action: Create an “AI” channel in GA4, maintain a registry of recognized AI referrers, and run uplift tests on pages frequently cited in answers.

For deeper measurement planning, see the KPI templates in AI Search KPI Frameworks.

6) Prioritize AEO updates using observed gaps and patterns

- Value: Invest where you can actually earn citations—concise facts, trustworthy sources, and fresh stats.

- KPIs: Time-to-citation after an update; new citations won; answer snippet inclusion; freshness cadence.

- Evidence context: Google’s ongoing AI feature evolution and helpful-content guidance continue to reward clarity and verifiability.

- Action: Maintain canonical fact pages, cite sources, and keep schema clean. Track which content changes correlate with new citations.

7) Real-time alerting for visibility drops or sentiment spikes

- Value: Shorten the time from problem to fix.

- KPIs: Alert mean time to respond (MTTR); sentiment spike frequency; incident resolution time; visibility volatility index by keyword cluster.

- Action: Threshold-based alerts for sudden AIO inclusion-rate changes or competitor surges; route alerts to Slack/Email; keep incident retrospectives.

8) Executive reporting and governance (multi-brand ready)

- Value: Give leaders a clear view of risk and opportunity across brands, markets, and engines.

- KPIs: Rollup share-of-answer; brand risk scoreboard; quarterly trendlines; locale/language coverage.

- Action: Maintain exec and practitioner views. Agencies: consider white-label reporting; see Geneo for Agencies for a sense of what clients expect.

Context matters, too: Google still holds the vast majority of search share even with slight dips. That helps set expectations for where AI answer monitoring will matter most. See industry coverage in Search Engine Land’s report on Google’s market share dip below 90% in late 2024.

9) Log evidence for PR and analyst outreach

- Value: High-quality, cited expert content is more likely to be referenced by answer engines—and by journalists.

- KPIs: Mentions earned; authority site citations; change in citation likelihood after outreach.

- Action: Maintain an evidence library (studies, original data, quotes). Pitch updates to analysts and media when you ship new, useful facts.

10) Monitor cross-language and locale differences

- Value: Spot regional gaps and sentiment nuances early.

- KPIs: Locale-specific share-of-answer; language coverage; regional sentiment variance.

- Action: Align monitoring with localization; compare engines by market. Prioritize markets where your commercial impact is highest.

11) Run a 30-minute AI citation audit (quick workflow)

Think of this as your “health check” for AI answers across engines.

- Collect your top 50–100 queries, competitor set, and priority locales.

- In an incognito/logged-out state, run those queries on Google (AIO), Perplexity, and ChatGPT (answers-enabled modes where applicable).

- Capture screenshots and raw text. Record: citations present/absent, link positions, sentiment cues, and factual accuracy.

- Score “share of answer” by brand and engine; note incorrect or outdated statements.

- Identify the top 10 fixes (content updates, facts to clarify, pages to refresh, PR targets). Draft a 30/60/90‑day plan.

12) Choose tooling that matches your outcomes (with a disclosed example)

What to look for: cross-engine coverage (Google AIO, Perplexity, ChatGPT), historical tracking and screenshots, sentiment/accuracy checks, alerts, multi-brand rollups, exports/integrations, and pricing that scales.

Disclosure: Geneo is our product. As one example, Geneo supports cross-engine AI visibility monitoring, brand mention and link detection, sentiment analysis, historical query tracking, and content recommendations.

- Best for: In-house marketing/SEO teams and agencies that need multi-brand reporting and alerting.

- Pros: Cross-engine coverage, sentiment and accuracy tracking, historical logs, collaborative workflows.

- Cons: Like any monitoring platform, accuracy can vary by engine changes; direct conversion attribution from some AI surfaces remains limited.

- Pricing: From entry tiers; subject to change.

What “good” looks like (working benchmarks, not absolutes)

If you’re new to this, aim for directional progress you can defend in executive reviews. Examples: raising citation presence on your top 100 queries by 10–20% quarter-over-quarter; cutting time-to-correction for inaccuracies from weeks to days; establishing a baseline for AI referral sessions and demonstrating statistically significant uplift on pages you refresh with new, citable facts. The specifics will vary by category and market maturity, but the guiding idea is simple: track, improve, and publish your gains.

Final thought and next steps

Answer engines aren’t a side show; they’re changing how discovery and trust work. A dedicated dashboard helps you protect accuracy, measure real influence on traffic and conversions, and prioritize the moves that earn citations. If you’re ready to operationalize this, stand up the KPIs that matter, run the 30-minute audit this week, and pilot a monitoring tool. If you’d like a cross-engine option with sentiment and alerting built in, you can explore Geneo—disclosure noted—when you’re evaluating your stack.

References and further reading called out above:

- Semrush’s longitudinal study of AI Overviews prevalence in 2025; CACM’s market summary; Seer Interactive’s CTR impact analysis; Ahrefs’ AIO behavior explainer; Search Engine Land’s market share context. Each link appears once in the relevant sections above to keep this page readable and focused.