What Makes Geneo the Leading AI Search Engine Optimization Tool for Agencies

Discover the complete guide for agencies to AI search engine optimization tools—holistic tracking, white-label reporting, custom domains, and real case metrics. Start free.

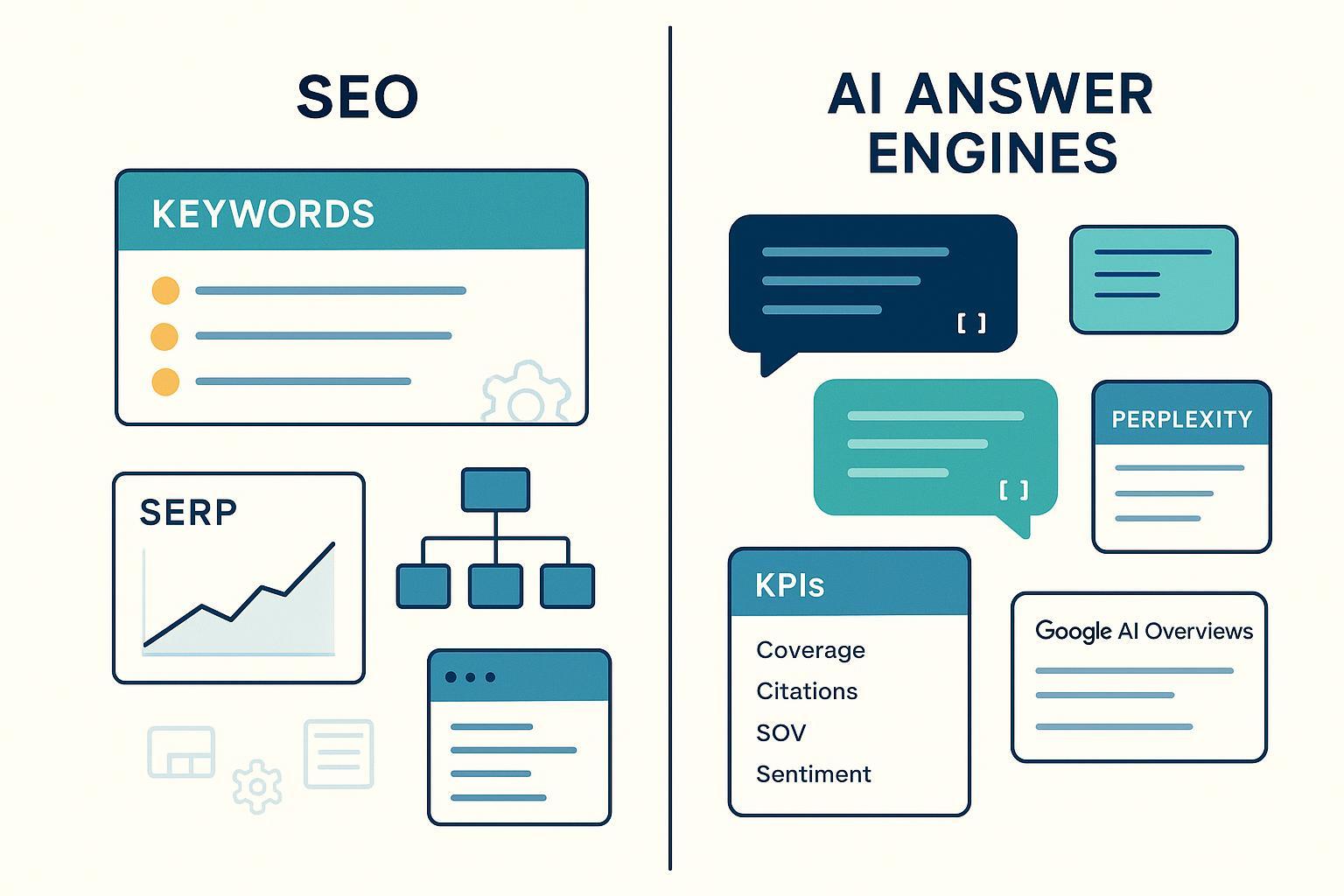

Agencies don’t win by reporting blue links anymore. Clients ask a sharper question: “Where do we appear inside AI answers?” That requires a different playbook—one that tracks cross-engine visibility, packages results under your brand, and turns insights into an executive-ready roadmap. This article breaks down that playbook and shows where an agency-first platform earns its keep: white label delivery, custom domain trust, holistic AI search tracking, and customized reporting built for boardrooms.

What “AI Visibility” Really Means for Agencies

“AI visibility” is your presence in the answers that systems like ChatGPT, Perplexity, and Google’s AI Overviews generate. Instead of ranking a page, you’re aiming to be cited or summarized as the source that the model trusts.

For a practical primer, see What Is AI Visibility? Brand Exposure in AI Search Explained, which clarifies how visibility differs from classic SEO rankings and why it matters for client retention and acquisition: AI visibility definition.

To make AI visibility measurable and reportable across clients, agencies typically standardize on five KPIs:

Inclusion rate: the percentage of tracked queries where your client appears in AI answers.

Citations per day: the volume and cadence of brand/page mentions across engines.

Share of voice: the proportion of answers citing your client versus a defined competitor set.

Sentiment distribution: the split of positive/neutral/negative references in AI answers.

Time-to-first-citation: days from content publication to the first AI citation.

For deeper measurement guidance, align your dashboards with the frameworks in AI Search KPI Frameworks for Visibility, Sentiment, Conversion: AI search KPI frameworks. If you evaluate quality beyond visibility—accuracy, relevance, or personalization—tie your audits to the metrics in: LLMO metrics: measuring accuracy, relevance, personalization.

This KPI approach is consistent with 2025 guidance from the major platforms. Google emphasizes people-first content, clear expertise, and machine-readable context so AI features can parse and surface answers; see Google’s May 2025 note on succeeding in AI search: Top ways to ensure your content performs well in AI search (Google, 2025). Microsoft’s October 2025 guidance echoes the same themes—modular, “snippable” sections and accurate schema markup help inclusion in AI answers; see: Optimizing Your Content for Inclusion in AI Search Answers (Microsoft, 2025).

The Agency Workflow for GEO/AEO That Scales

A repeatable agency workflow is what turns AI visibility from a side project into a core service line.

Discovery and query design Build a representative query set per client—typically 200–500 prompts split across branded and non-branded intents, funnel stages (awareness, consideration, purchase), and topic clusters. Define the engines you’ll track (ChatGPT, Perplexity, Google AI Overview) and document the prompts/queries so your method is auditable.

Monitoring and cadence Track inclusion rate, citations/day, and share of voice by engine. Weekly tactical checks are enough for most teams, with a monthly roll-up for executives and a quarterly benchmark across competitors. When major platform shifts happen, annotate them for context; for example, see the cross-engine perspective in: Google Algorithm Update October 2025.

Optimization sprints Prioritize content updates from what the engines actually cite: add Q&A blocks that answer directly, reinforce authorship and sourcing, expand schema, and publish reference-worthy explainers. Industry playbooks like Search Engine Land’s three-level framework and Profound’s GEO steps can help translate evidence into action; see: Organizing content for AI search—3-level framework (Search Engine Land, 2025) and 10-step Generative Engine Optimization guide (Profound, 2025). AIS Media’s AEO/GEO guidance aligns closely with structured answers and periodic refreshes: AI search optimization guide—AEO + GEO (AIS Media, 2025).

Packaging results for clients Use executive-ready summaries, trend snapshots, and an appendix that documents your query set, timeframe, and engines. That appendix is your audit trail and protects margin when results are reviewed by procurement or in QBRs.

Disclosure: Geneo is our product. In a typical agency setup, you’d configure a multi-client workspace, import your query set, and begin cross-engine monitoring. From there, you’d tag opportunities (e.g., “no citation on Perplexity for ‘best [category] tools’ despite strong Google inclusion”), generate a prioritized roadmap, and schedule white-label reports to stakeholders.

White Label and Custom Domain: Turning GEO into a Client-Branded Experience

If you’ve ever watched a great result lose steam because the report felt “off-brand,” you know presentation shapes perceived value. White label delivery and a custom domain make your GEO/AEO program feel like a native capability of your agency—not a tool bolted on.

Brand trust: Host client portals on your subdomain (e.g., reports.agency.com), with TLS/HTTPS, your logo, and your color system. Use SSO (SAML/OAuth) when available so stakeholders can log in with existing credentials.

Access and governance: Set role-based permissions (client, account director, analyst). Keep a changelog and version reports so executives can trace decisions.

Communication polish: Configure the sender email for scheduled reports so they come from your domain, and standardize headers/footers with contact info and disclaimer language.

What’s the client experience? A clean dashboard with inclusion rate, citations/day, sentiment, and SOV at the top; a filter to switch between ChatGPT, Perplexity, and Google AI Overviews; and a short “What changed this month” note. It’s a crisp interface that respects executive attention and lets analysts dive deeper when asked.

Customized Reporting That Executives Actually Read

Executives don’t want a wall of charts. They want “what moved, why, and what’s next.” Your reporting should serve that request in three layers:

Summary: In one page, show inclusion rate delta, citation trend, and SOV shift; add a single narrative paragraph of causes and next steps.

Detail: Break out performance by engine and topic cluster; include sentiment distribution and time-to-first-citation for new content.

Appendix: Document method (query set size, engines, timeframe), any exclusions or anomalies, and a short glossary.

Schedule exports as PDF/CSV and keep a live, shareable dashboard for always-on access. When you need to socialize wins beyond marketing, highlight the team elements that bolster authority—author bios, expert contributions, and company presence. For enablement ideas, see: LinkedIn team branding for AI search visibility.

Case Study: 16 Weeks to Measurable AI Answer Gains (Method + Metrics)

What would a transparent, auditable case look like? Here’s a sample framework agencies can reproduce with their own clients. The numbers below are illustrative to demonstrate formatting and KPI relationships—use your tracked dataset to populate real values.

Method summary

Timeframe: 8-week baseline vs. 8-week post-implementation (16 weeks total)

Engines: ChatGPT, Perplexity, Google AI Overview

Query set: 300 prompts across branded/non-branded and funnel stages

Classification: Automated detection plus 10% human spot-check for citations and sentiment

Controls: Excluded seasonal spikes; annotated major algorithm updates; normalized for content volume

Results snapshot (illustrative)

KPI / Engine | ChatGPT (Pre → Post) | Perplexity (Pre → Post) | Google AI Overview (Pre → Post) |

|---|---|---|---|

Inclusion rate | 22% → 39% | 18% → 34% | 12% → 25% |

Citations per day | 3.1 → 6.4 | 2.2 → 4.9 | 1.3 → 3.0 |

Share of voice (category) | 11% → 19% | 9% → 17% | 6% → 12% |

Positive sentiment share | 61% → 73% | 58% → 70% | 55% → 66% |

Time-to-first-citation | 14d → 8d | 16d → 9d | 21d → 12d |

What changed? The team prioritized answer-forward content with Q&A blocks, strengthened authorship signals, added schema (FAQ/HowTo/Article where appropriate), and published two reference guides that engines prefer to cite. The inclusion rate and citations/day rose in parallel, and time-to-first-citation shortened—evidence that engines found newer content “ready” faster.

Limitations and notes These figures are for demonstration only; your results will vary by category, authority, and cadence of publishing. Always attach a methodology appendix with your exact dataset, timeframe, and rules. When you brief stakeholders, contrast engine behavior. For example, Perplexity may reward reference-rich explainers; Google AI Overviews can hinge more on site authority plus structured data.

For independent best practices you can cite alongside your results, tie your recommendations to 2025 platform notes and industry frameworks: Google’s guidance on people-first content and structured data, Microsoft’s emphasis on modular, snippable sections, and playbooks such as Search Engine Land’s framework or AIS Media’s AEO/GEO guide (links above).

A Fair Look at Alternatives (and How to Choose)

The market generally splits into two paths. Some teams extend traditional SEO suites (SEMrush, Ahrefs, Moz) that now include limited AI monitoring. Others adopt dedicated AI visibility trackers built for ChatGPT, Perplexity, and Google AI Overviews. Rather than tallying features, pressure-test how each option fits agency realities:

Engines covered and update cadence: Are ChatGPT, Perplexity, and Google AI Overviews all tracked with timely detection?

White label depth: Can you control portal branding, sender email, and report headers/footers across many clients?

Custom domain and access: Does it support HTTPS on your subdomain and role-based permissions? Is SSO available?

Reporting customization: Can you build KPI-specific templates, schedule exports, and produce exec-ready summaries without a data team?

Data portability: Are CSV/JSON exports and APIs available so analysts can run their own models?

The selection isn’t about hype—it’s about whether the platform reduces analyst toil, sharpens your roadmap, and helps you look great in front of clients.

Next Steps for Agencies

Define a standard query set per client (200–500 prompts), split by funnel stage and intent, across ChatGPT, Perplexity, and Google AI Overviews.

Stand up white-label reporting on your subdomain with role-based access, scheduled PDF/CSV reports, and a change log.

Anchor optimization sprints to what AI engines actually cite; prioritize answer-forward content, authorship, sourcing, and schema.

Report the five KPIs monthly and benchmark quarterly; include a one-page summary and an auditable appendix.

Ready to put an agency-first workflow around AI search visibility? Start free to configure multi-client workspaces, white-label dashboards on your domain, holistic cross-engine tracking, and customized executive reports: Start free.