How to Get Content Cited by ChatGPT & Perplexity: Agency Best Practices

An advanced agency guide to creating AI-optimized content that gets cited by ChatGPT and Perplexity. Includes schema workflows, authority signals, and measurement tactics.

If your clients ask, “Why aren’t we showing up in AI answers?”, they aren’t just chasing clicks. They’re chasing visibility where decisions now start. Being cited inside ChatGPT or Perplexity means your content is deemed trustworthy enough to stand beside the answer itself. That reputation effect can influence brand recall and downstream conversions—even when no click happens.

This guide lays out a pragmatic, evidence‑backed playbook for agencies: how to structure pages for extraction, what authority signals matter, where freshness actually helps, how ChatGPT and Perplexity differ, and how to measure wins beyond blue links.

ChatGPT vs. Perplexity: how citations appear and what they favor

ChatGPT’s web answers include source cards or a Sources sidebar when browsing is used, with emphasis on a few named references. OpenAI’s materials show these references appear when ChatGPT searches or browses the web, especially in modes that expose sources in‑line for verification (Introducing ChatGPT search).

Perplexity performs real‑time searches and highlights citations prominently, typically listing multiple sources per response; Pro Search expands depth and focus, with visible numbered references next to claims (How does Perplexity work?).

Across 2024–2025 industry analyses, two patterns stand out. First, Perplexity tends to cite more sources per answer than ChatGPT across test sets, increasing opportunities for inclusion (AI engines often cite third‑party content, Search Engine Journal, 2024). Second, video—especially YouTube—shows up disproportionately in AI answers across platforms (YouTube dominates AI search citations, Search Engine Land, 2025). For planning, that means aiming for clear, extractable facts and unique angles to earn a place in ChatGPT’s tighter source lists, while ensuring your pages and videos present corroborated, skimmable evidence to be among Perplexity’s broader citations.

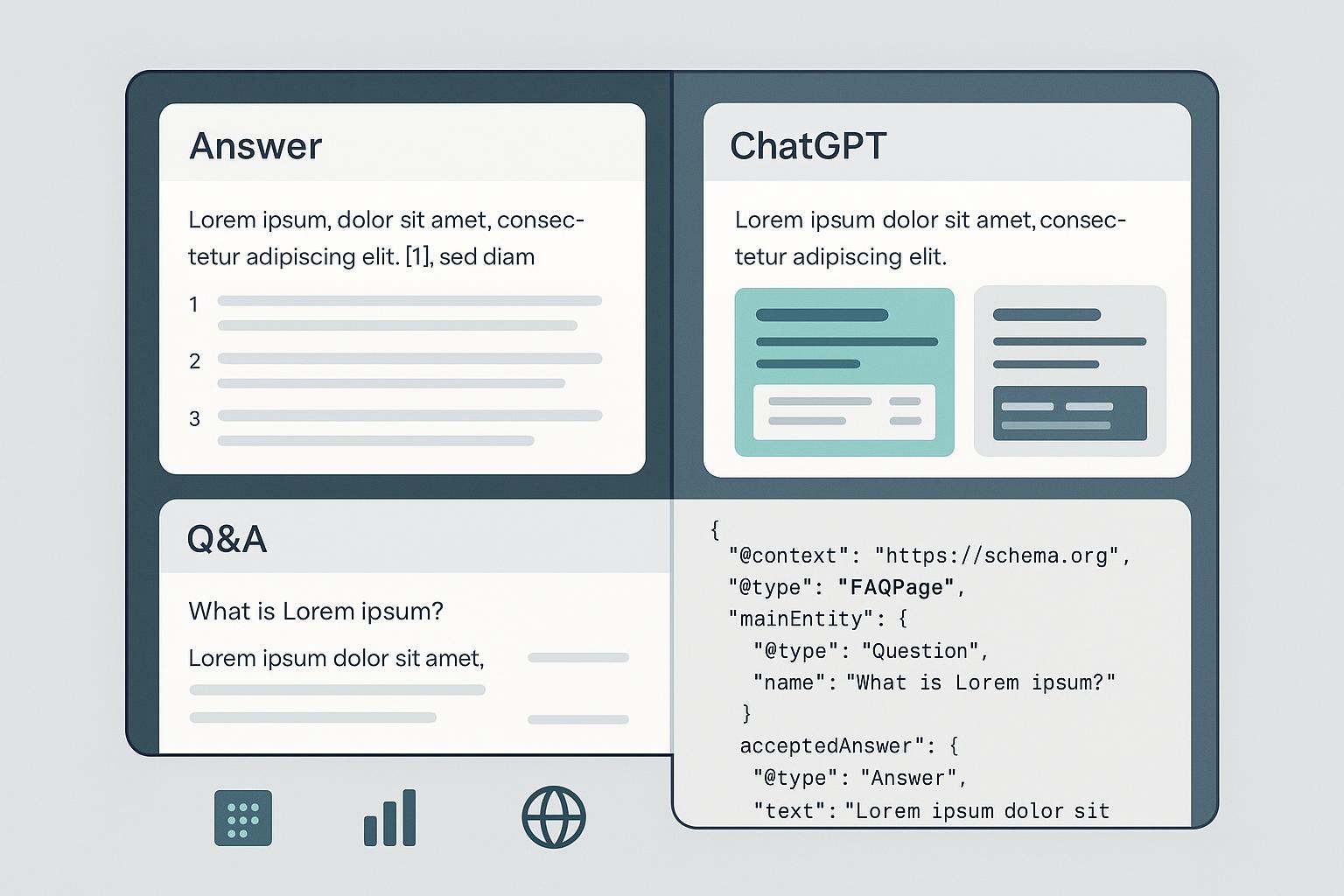

Build AI‑citable pages: structure, Q&A sections, and schema

Generative engines extract answers, not just rank pages. Give them clean “grab points.” Lead with the outcome—open with a direct answer, then backfill with reasoning, methods, and references. Short, unambiguous statements near the top make extraction easier. Add an on‑page Q&A module with a handful of specific questions your audience actually asks, each with a concise stand‑alone answer. Use scannable anchors sparingly—one small table or a short list—to summarize criteria, comparisons, or steps. Finally, mark up Q&A content correctly; for site‑owned FAQs prefer FAQPage, and for community‑style multi‑answer content use QAPage. Pair with Article/BlogPosting for authorship and dates, validate with Google’s tests, and ensure the markup mirrors visible content.

Example: a minimal FAQPage embedded on an article page.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "How to get cited by AI engines",

"author": {

"@type": "Person",

"name": "Ava Patel"

},

"datePublished": "2025-06-10",

"dateModified": "2025-12-15",

"mainEntityOfPage": {

"@type": "WebPage",

"@id": "https://example.com/ai-citations-guide"

}

}

</script>

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What increases the chance of being cited by AI?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Clear answer-first sections, verifiable facts with citations, and up-to-date content with accurate timestamps often help engines extract and attribute your content."

}

},

{

"@type": "Question",

"name": "Should I update pages frequently?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Only when you make meaningful changes. Update timestamps must reflect genuine edits rather than superficial tweaks."

}

}

]

}

</script>

Authority that LLMs can trust: E‑E‑A‑T made operational

You can’t will citations into existence, but you can earn them. Make expertise visible with real bylines that map to robust author pages, include original datasets or pilot results, and cite primary sources. Use consistent terminology for topics and organizations, and add “sameAs” links to official profiles where relevant. Build tight internal content clusters to show topical depth. Site‑wide trust still matters: fast pages, HTTPS, clean UX, clear About/Contact pages, and transparent editorial standards. These align with Google’s site‑owner guidance on helpful, reliable content for AI surfaces (AI features and your website, 2025).

Freshness without fluff: when to update and how to signal it

Engines care about current answers, not cosmetic timestamp churn. Make meaningful updates—new data, changed recommendations, altered steps—and reflect them in on‑page content; then update dateModified. Keep sitemaps accurate and only change

Multi‑format: why video can lift your odds

If YouTube is frequently cited in AI answers, meet engines where they already shop for proof. Produce a concise video walkthrough that complements your written guide—include chapter markers, on‑screen citations, and a text transcript on the page. Use the transcript to reiterate the key answer and data points that an engine might quote. A practical pattern is a 5–8 minute explainer on the exact query, a cleaned transcript, and one compact comparison table or checklist beneath it for fast extraction.

Measurement SOPs: GA4 and indirect visibility audits

You need two vantage points: measurable referrals and visibility without clicks. In GA4, create a custom “AI/LLM Traffic” channel grouping that buckets referrals from chatgpt.com and perplexity.ai above “Referral,” using a case‑sensitive regex and testing on a rolling 28‑day window; this approach is outlined in industry guidance on segmenting LLM traffic (Segment LLM traffic in GA4, Search Engine Land, 2024). In Looker Studio, chart sessions, engaged sessions, and conversions for AI/LLM Traffic and compare against Organic Search to set expectations. Separately, run weekly scripted or manual checks for target queries in ChatGPT and Perplexity, logging whether you’re cited, which URL appears, and competing domains, with a rolling archive of screenshots.

| Task | GA4 Field | Example Pattern/Setting | |

|---|---|---|---|

| Channel rule name | Custom channel group | AI/LLM Traffic | |

| Include sources | Session source | ^(chatgpt.com | perplexity.ai)$ |

| Medium condition | Session medium | referral | |

| Landing page slice | Page path + query string | Filter by priority URLs | |

| Validation | Traffic Acquisition report | Compare 28‑day vs. 90‑day |

Example agency workflow for AI citation monitoring

Disclosure: Geneo is our product.

Weekly, crawl priority topics and refresh Q&A sections only when facts or steps change, publishing brief changelogs on page. Twice weekly, check Perplexity for target queries such as “best [category] in [city]” and “alternatives to [brand],” capturing new or lost citations and noting which sentences appear to be quoted. In ChatGPT’s search mode, test high‑intent queries and track whether your page or video appears in the smaller set of sources. Monthly, review GA4’s AI/LLM Traffic and annotate spikes alongside updates or campaigns, then compare visibility logs to traffic to demonstrate the value of zero‑click exposure.

Agencies often centralize this evidence in white‑label dashboards, combining “are we cited?” checks with rolling metrics such as share of voice and total mentions. For a neutral overview of cross‑engine tracking methods that span ChatGPT, Perplexity, and Google’s AI surfaces, see this comparison of monitoring approaches: ChatGPT vs. Perplexity vs. Gemini vs. Bing monitoring comparison.

Troubleshooting and recovery: diagnose drops and fix root causes

When citations slip, confirm the loss with fresh tests in both engines and on multiple accounts, saving timestamped screenshots. Review the competing sources now being cited and identify what they provide that your page lacks—newer data, a cleaner Q&A, or video proof. Validate your structured data, ensuring JSON‑LD matches visible content and removing markup from sections that don’t qualify. Re‑write the first two paragraphs for clarity and specificity if they aren’t crisp enough to quote. Publish a meaningful update if substance is missing—add a small dataset, a procedure diagram, or a short video with transcript—and track post‑update results for a few weeks as engines recrawl.

Internationalization and localization: target markets and languages

Engines answer in the user’s language and often prefer sources that match locale. Create localized variants where intent or regulations differ by region, adjusting examples, pricing norms, screenshots, and citations to local authorities. Local queries matter—Perplexity surfaces highly specific, location‑bound results for “best [service] in [city].” Build city pages with unique Q&A sections and locally verifiable facts, keep hreflang correct, and ensure each locale’s sitemap and lastmod values are accurate and trustworthy.

Next steps

Pilot this with one high‑stakes topic: draft an answer‑first article, add a tight FAQ, embed valid schema, pair it with a short video and transcript, and set up GA4 plus a weekly citation log. If you need a neutral reference on what agencies typically track in AI visibility programs, this overview outlines a common baseline: Geneo (Agency) overview. For additional analytic context around AI traffic and reporting, see this practical guide: Best practices for AI traffic tracking in 2025.

One last thought: are you creating the single clearest answer on the topic, with proof and recency? If not, start there—everything else is just polish.