AI-First Search Engines in 2025: Key Changes & Marketer Moves

Discover how Google AI Overviews, ads in answers, and 2025's new search models redefine marketing performance. See expert tips—act now!

Updated on 2025‑11‑18

If your dashboards still treat “blue-link rank” as the north star, you’re flying with last year’s map. In 2025, the unit of search has shifted from pages to answers. That shift doesn’t kill SEO or paid search—but it rewires what we optimize, how we measure, and where budgets go.

What actually changed in 2025

Google made two moves that matter. First, AI Overviews scaled globally, summarizing answers with source citations and compressing classic results around them. Second, Google introduced AI Mode, a more conversational, multimodal experience that can display ads directly inside the AI-generated answer. Google framed AI Mode as “our most powerful AI search,” and cited a usage lift in markets where AI Overviews appear (U.S., India) in May 2025. See Google’s own explanation in the Search team’s post, “AI in Search: Going beyond information to intelligence” from May 20, 2025, which also references that usage uptick and the new experience: Google Search blog on AI Mode (May 20, 2025). At Marketing Live 2025, Google confirmed ads are eligible to show within AI Overviews and are “coming to AI Mode,” integrated via existing Search/Shopping/Performance Max inventory without a dedicated placement control: Google Marketing Live 2025 ads & AI roundup.

Outside Google, Microsoft emphasized a citation-forward approach in Copilot—answers show where information comes from with clear, clickable sources—and, as of November 2025, has not announced ads embedded inside Copilot’s AI chat answers. Microsoft’s November 7, 2025 update stresses transparent sourcing: Microsoft Copilot blog on citation-forward answers (Nov 7, 2025).

Perplexity doubled down on retrieval-backed answers with explicit, inline citations and “Deep Research,” which performs dozens of searches and aggregates sources, then surfaces a linked report: Perplexity’s Deep Research announcement (Feb 14, 2025).

What this does to performance

When AI answers appear, clicks tend to fall—both organic and paid. The magnitude varies by dataset, timeframe, and query intent. For example, a July 2025 survey study from Pew found users were less likely to click links when an AI summary appeared; link engagement was lower on pages with AI Overviews than on pages without them, as reported in the U.S. sample of that study: Pew Research on lower link clicking when AIO appears (July 22, 2025). In practitioner data, Seer Interactive’s multi-client analysis covering January through September 2025 observed informational queries with AI Overviews saw a 61% decline in organic CTR and a 68% drop in paid CTR relative to non-AIO queries, with brands cited inside AIOs faring better than those omitted: Seer’s AIO impact update (Nov 4, 2025).

Prevalence also changed over the year. Early-2025 U.S. desktop snapshots placed AI Overviews in the low teens of queries; by late 2025, some trackers measured roughly ~30% on U.S. desktop with higher likelihood on informational intent. Ranges vary by device, geo, and corpus. The takeaway: model performance in cohorts—queries with AI answers vs. without—and watch the mix shift over time.

Think of it this way: the answer card is the new “position zero,” and your objective is inclusion with a credible citation, not just a top blue link. That doesn’t mean blue links are irrelevant; it means they’re no longer the only battleground.

An inclusion playbook that works now (organic + paid)

On-page and content fundamentals

- Refresh cornerstone pages every 30–60 days on high-value topics. Lead with a concise, authoritative summary, then support it with current data, primary-source citations, and an FAQ block that mirrors follow-up questions.

- Add and validate structured data (Article, FAQ, Product, Organization). Make authorship and publisher metadata explicit. Ensure your citations are crawlable and point to canonical, dated sources.

- Publish original, linkable evidence: tables, mini datasets, and visuals that others reference. Retrieval-first engines prefer material they can cite.

Paid preparedness Write test plans that ring‑fence a small budget slice to observe performance for placements inside AI Overviews/AI Mode. Until Google provides breakout reporting, monitor impression share, scroll-depth on landing pages, and assisted conversions tied to AI‑answer‑prone intents. Consolidate where sensible into robust RSA and Performance Max, but preserve query intelligence to identify intents that frequently trigger AI answers.

A neutral micro-workflow to audit inclusion and sentiment

- Start with a target query list. Run neutral, logged-out checks to see whether AI Overviews appear and which sources are cited.

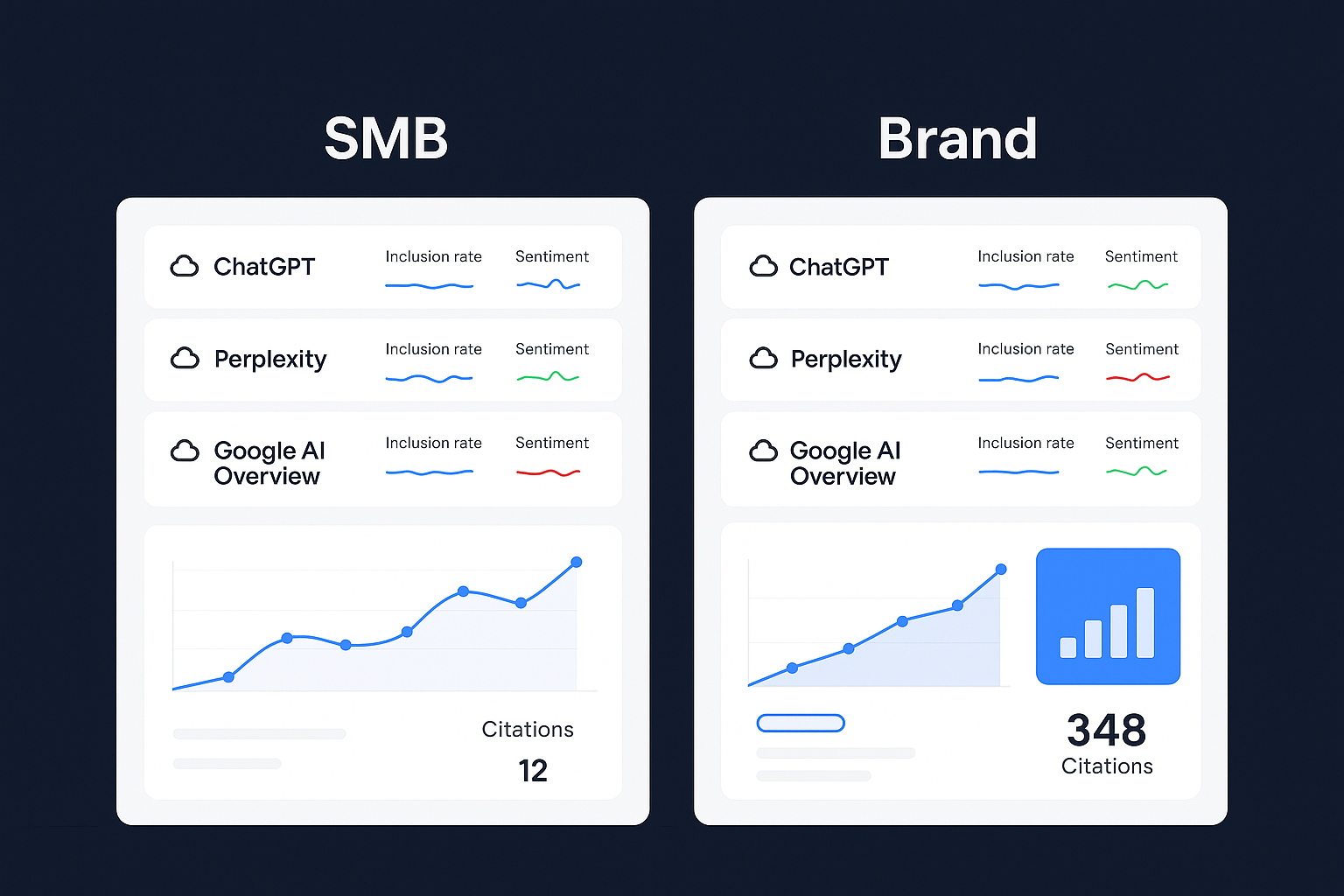

- For cross-engine monitoring and to track whether your pages are cited (and how they’re described), you can use Geneo. Disclosure: Geneo is our product. It helps monitor AI answer inclusion across Google AI Overviews, ChatGPT, Perplexity, and others, and it records sentiment/context in those answers over time.

- When comparing engines and use cases, see this explainer on cross-engine AI visibility monitoring (comparison guide), and review a representative query-level report example with screenshots to understand how inclusion and citations are captured.

Measurement reset: what to track weekly

- AI answer inclusion rate by query set (always/conditional/rare).

- Citation count and share for your pages vs. competitors inside AI answers.

- Sentiment and context of brand mentions in AI answers.

- Downstream outcomes: assisted conversions and revenue from pages cited inside AI answers vs. those not cited.

Mini change‑log: fast‑moving facts to watch

The facts below move quickly. Keep your own dated tracker and replace entries as new data emerges.

| Date | What changed | Source |

|---|---|---|

| May 20, 2025 | Google details AI Mode; notes increased usage where AI Overviews appear (U.S., India) | Google Search blog: “AI in Search: Going beyond information to intelligence” |

| May 2025 | Ads are eligible inside AI Overviews and are coming to AI Mode; no dedicated placement control exposed | Google Marketing Live 2025 ads & AI roundup |

| Jul 22, 2025 | Users less likely to click links when an AI summary appears | Pew Research short read (U.S. survey) |

| Nov 4, 2025 | Informational queries with AIO: −61% organic CTR, −68% paid CTR vs. non‑AIO | Seer Interactive multi‑client update |

| Nov 7, 2025 | Microsoft reiterates citation‑forward Copilot answers; no first‑party ads‑in‑answers announcement | Microsoft Copilot blog |

Budget and org implications

Budgets shouldn’t chase vanity clicks; they should chase profitable demand. As AI answers compress clicks, you’ll likely shift some spend toward upper-funnel content that earns inclusion and some toward lower-funnel capture where AI answers still hand off to transactional pages. Expect PMax to absorb more creative and placement variation as Google blends inventory into AI experiences. Without explicit AI-answer placement reporting, run controlled tests—by geo, campaign, or device—and read performance at the query cohort level.

On the org side, merge SEO and SEM reporting around shared dashboards. Replace single-metric scorecards with a bundle: inclusion rate, citation share, sentiment, and assisted conversions. Incent teams to publish and refresh evidence-backed summaries rather than just chase incremental rankings. Keep stakeholders aligned with a quarterly “state of AI answers” briefing that shows screenshots, mix shifts, and resulting budget moves.

Next steps: a 30‑day plan

- Week 1: Tag your top 50–100 queries by AI‑answer propensity (always/conditional/rare) using neutral tests. Baseline inclusion and citation share. Identify 5–10 pages to refresh first.

- Week 2: Implement on‑page updates (summary + FAQ + schema + dated sources). Launch a small paid test to observe performance in AI‑answer‑heavy cohorts.

- Week 3: Publish original evidence (a small dataset, visual, or benchmark). Set up weekly reporting for inclusion, citations, sentiment, and assisted conversions.

- Week 4: Review test results. Rebalance budget toward cohorts where inclusion or assisted conversions improve, and schedule the next 60‑day refresh cycle.

If you want a single place to track cross‑engine AI answer inclusion, citations, and sentiment over time, you can try Geneo. Disclosure: Geneo is our product. It can help you operationalize the monitoring and reporting pieces while you focus on content and conversion improvements.

One last question for your team: if answers are the new front door, which pages and proofs make you the most cite‑worthy this quarter?